Cloud Native DevOps with Kubernetes: The Ultimate Guide

Master cloud native DevOps with Kubernetes through this comprehensive guide, covering essential concepts, best practices, and tools for efficient application deployment.

Cloud native DevOps with Kubernetes helps organizations ship software faster and more reliably. But what does "cloud native" actually mean, and how does Kubernetes fit in? This guide explains cloud native DevOps with Kubernetes, covering core principles, benefits, and practical implementation. We'll explore key concepts, from pods and deployments to service meshes and GitOps. We'll also discuss the challenges of Kubernetes adoption and offer solutions for managing complexity, optimizing resources, and ensuring security. Whether you're a developer, operations engineer, or platform architect, this guide will help you build and manage robust, scalable cloud native applications with Kubernetes.

Unlock the power of cloud native DevOps and transform how you deploy, scale, and manage applications. Kubernetes isn't just another tech buzzword—it's the orchestration engine that's revolutionizing how modern applications come to life. This guide cuts through the complexity to reveal what makes Kubernetes tick, from the fundamental building blocks that power your first pod to the sophisticated service meshes and GitOps workflows that drive enterprise-scale operations.

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Learning Cloud Native DevOps with Kubernetes

Kubernetes has become essential for modern software development, but navigating the cloud-native landscape can be challenging. A solid understanding of Kubernetes is key to unlocking the potential of agile, scalable, and resilient applications. So, where should you start?

Recommended Resource: "Cloud Native DevOps with Kubernetes" Book

One excellent starting point is the book *Cloud Native DevOps with Kubernetes* by John Arundel and Justin Domingus. This comprehensive guide provides a practical approach to learning Kubernetes, making it accessible for all skill levels. For those looking to streamline their Kubernetes operations, especially across multiple clusters, Plural offers a robust platform for management and deployment. You can explore its capabilities and book a demo to see how it can simplify your workflow.

What is "Cloud Native DevOps with Kubernetes"?

This book demystifies the world of cloud-native development and its relationship with Kubernetes. As the authors explain, "Kubernetes is the operating system of the cloud native world, providing a reliable and scalable platform for running containerized workloads." It serves as a practical guide to implementing Kubernetes, breaking down complex concepts into digestible chunks. For teams managing multiple Kubernetes clusters, a platform like Plural can significantly reduce the operational overhead. See how Plural simplifies Kubernetes management.

Why Choose "Cloud Native DevOps with Kubernetes"?

In today’s fast-paced world, efficiency is paramount. This book emphasizes leveraging managed Kubernetes services like Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), and Azure Kubernetes Service (AKS). This approach minimizes the complexities of self-hosting and allows you to focus on building and deploying applications. The second edition underscores this further, highlighting how managed services streamline cloud-native development processes, as detailed here. Plural integrates seamlessly with these managed services, providing a unified control plane for your entire Kubernetes fleet. Learn more about Plural's architecture and how it can enhance your existing workflow.

What You'll Learn

From fundamental building blocks like pods and deployments to more advanced concepts such as service meshes and GitOps, this book covers a wide range of topics. You'll gain practical insights into building cloud-native applications and their supporting infrastructure, including creating a robust continuous deployment pipeline. More details on the specific learning outcomes can be found on the O'Reilly page. As you delve deeper into Kubernetes, consider how a platform like Plural can automate many of these processes, freeing up your team to focus on development. Explore Plural's pricing plans to see how it fits your needs.

Who Should Read This Book?

Whether you're a beginner taking your first steps into the world of Kubernetes or an experienced engineer seeking to optimize existing deployments, this book offers valuable knowledge. It's designed to be a helpful resource for anyone looking to deepen their understanding of Kubernetes and cloud-native best practices, as explained in the second edition.

About the Authors

John Arundel and Justin Domingus, the authors of *Cloud Native DevOps with Kubernetes*, are recognized experts in the field. Their combined expertise provides a wealth of practical knowledge and real-world experience, making the book an invaluable resource. You can find more about the authors on Amazon.

Where to Buy

The book is readily available for purchase on platforms like Amazon, offering various formats to suit your preferences. You can find new and used copies, often at discounted prices.

Key Takeaways

- Kubernetes is essential for modern cloud native DevOps: It automates deployments, scales applications, and manages infrastructure, enabling faster releases and improved reliability. Understanding core concepts like Pods, Deployments, and Services is crucial.

- A robust cloud native DevOps pipeline requires a comprehensive strategy: Implement CI/CD, leverage GitOps, and embrace Infrastructure as Code (IaC). Choose the right tools, like Helm, and service meshes, such as Istio or Linkerd.

- Address Kubernetes challenges with planning and the right approach: Manage complexity, allocate resources effectively, handle stateful applications, and implement robust security. Continuous learning and best practices are essential. Managed services and platforms like Plural simplify adoption and management.

Understanding Cloud Native DevOps and Kubernetes

This section clarifies Cloud Native DevOps and the role of Kubernetes.

What is Cloud Native DevOps?

Cloud native development is more than just using the cloud; it's about building and running applications designed for dynamic cloud environments. This approach leverages characteristics like elasticity, scalability, and resilience. Cloud native development relies on technologies like microservices, containers, and immutable infrastructure. DevOps practices, with their emphasis on automation and collaboration, are essential for managing the rapid pace of this development style.

Kubernetes Explained

Kubernetes is an open-source container orchestration platform. It automates deploying, scaling, and managing containerized applications. Consider it the operating system for your cloud native applications, providing a consistent platform across different cloud providers and on-premises environments. Kubernetes has become the industry standard for container orchestration, offering a robust and scalable solution for managing complex application deployments. It simplifies operational tasks, including rolling updates and rollbacks, automatic scaling, and self-healing.

How Kubernetes Enables Cloud Native DevOps

Kubernetes is fundamental to cloud native DevOps. It provides the platform for automating application deployments and scaling, enabling faster and more reliable software releases. Features like declarative configuration and automated rollouts streamline deployments. Kubernetes also simplifies infrastructure management with Infrastructure-as-Code (IaC) tools like Terraform, further enhancing automation and consistency. By abstracting away the underlying infrastructure, Kubernetes lets development teams focus on building and deploying applications, not managing servers and networks. This focus allows for efficient scaling of cloud native adoption.

Kubernetes and Plural: A Powerful Combination

Kubernetes has revolutionized how we deploy and manage applications. It acts as the operating system for cloud-native applications, providing a consistent platform across diverse environments—from various cloud providers to on-premises infrastructure (O'Reilly, "Cloud Native DevOps with Kubernetes"). This consistency is invaluable for organizations aiming to streamline application deployment and boost operational efficiency. Kubernetes features, such as rolling updates, automatic scaling, and self-healing, simplify operations, freeing teams to focus on building and shipping high-quality software. It's become the industry standard for container orchestration, offering a robust and scalable solution for managing complex application deployments.

Using Kubernetes, organizations automate application deployments and scaling, leading to faster and more reliable software releases (O'Reilly, "Cloud Native DevOps with Kubernetes"). Declarative configuration and automated rollouts streamline the deployment pipeline. Kubernetes simplifies infrastructure management with Infrastructure-as-Code (IaC) tools like Terraform, further enhancing automation and consistency. By abstracting away the underlying infrastructure, Kubernetes lets development teams focus on building and deploying applications, not managing servers.

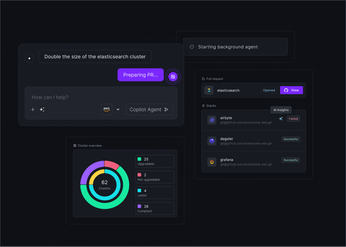

Managing Kubernetes at scale presents challenges. Plural simplifies Kubernetes adoption and management, providing an enterprise-ready platform that streamlines complex operations (Plural). Plural helps teams address the challenges of cloud-native DevOps, implementing best practices and optimizing cloud-native strategies. Features like GitOps-based continuous deployment, secure dashboards, and robust IaC management enhance Kubernetes at scale. This combination of Kubernetes and Plural empowers organizations to fully realize the potential of cloud-native DevOps.

Why Use Kubernetes in Cloud Native Environments?

Kubernetes is essential for cloud native environments, offering a robust platform for managing containerized applications. Its features streamline operations, boost efficiency, and improve the overall performance of your applications.

Automated Scaling with Kubernetes

Kubernetes excels at orchestrating containers and automating their deployment, scaling, and management. This automation frees developers from infrastructure concerns, allowing them to concentrate on code. Need to scale your application to handle increased traffic? Kubernetes automatically adjusts the number of running containers based on predefined metrics or real-time demand. This ensures optimal performance and resource utilization without manual intervention.

Self-Healing and Service Discovery with Kubernetes

Kubernetes offers built-in self-healing capabilities, constantly monitoring the health of your application. If a container fails, Kubernetes automatically restarts it. If a node goes down, Kubernetes reschedules the affected containers on healthy nodes. This automated recovery process ensures application resilience and minimizes downtime. Kubernetes also simplifies service discovery, allowing services to locate and communicate with each other seamlessly, even as they scale and move across the cluster.

Optimizing Resource Utilization with Kubernetes

Kubernetes optimizes resource allocation, ensuring efficient use of computing resources. By dynamically allocating resources based on application needs, Kubernetes minimizes waste and reduces infrastructure costs. This dynamic scaling also ensures that applications have the resources they need when they need them, preventing performance bottlenecks and improving overall efficiency. For example, if your application experiences a surge in traffic, Kubernetes can automatically provision additional resources to handle the increased load, and then scale back down when traffic subsides.

Faster and More Reliable Deployments with Kubernetes

Kubernetes enables faster and more reliable deployments, allowing organizations to release updates and new features more frequently. This increased agility translates to faster innovation cycles and improved responsiveness to market demands. By automating the deployment process and providing tools for rollback and canary deployments, Kubernetes reduces the risk of deployment errors and ensures a smooth transition between versions. Canary deployments, for instance, allow you to gradually roll out new versions to a subset of users, minimizing the impact of potential bugs. This improved deployment speed is a key advantage in today's fast-paced software development landscape.

Core Kubernetes Concepts for DevOps

This section covers core Kubernetes concepts DevOps teams need to effectively manage and deploy applications in a cloud native environment.

Understanding Pods, Containers, and Deployments

Kubernetes orchestrates containerized applications using a series of abstractions, starting with Pods. A Pod is the smallest deployable unit, encapsulating one or more containers. Think of a pod as a logical host for your containers, sharing resources like network and storage. DevOps teams typically use Deployments to manage pods, enabling declarative updates and rollouts. Deployments automate the creation and updating of pods, ensuring smooth transitions between versions and minimizing downtime. This declarative approach lets teams focus on the desired state of their application, not the complexities of deployment.

Kubernetes Networking: Services and Ingress

Networking in a Kubernetes cluster can be complex. Services provide a stable endpoint for accessing a group of pods, abstracting away the dynamic nature of individual pod IPs. This enables seamless communication between application components, even as pods are created and destroyed. Ingress exposes services to the outside world, acting as a reverse proxy and load balancer. It manages external access to your application, routing traffic to the appropriate services based on configured rules.

Managing Configuration and Storage in Kubernetes

Managing application configuration and sensitive data is essential. ConfigMaps stores non-sensitive configuration data separate from your application code. This simplifies managing and updating configurations without rebuilding your application. Secrets securely store sensitive information like passwords and API keys, protecting them from unauthorized access. Kubernetes also offers various persistent storage options, allowing you to store data that must persist across pod restarts or failures.

RBAC and Security in Kubernetes

Security is paramount in Kubernetes. Role-Based Access Control (RBAC) lets you define granular permissions for users and service accounts within your cluster. This ensures that only authorized entities can access specific resources, limiting the impact of potential security breaches. Implementing RBAC, along with other security best practices, is crucial for a secure and compliant Kubernetes environment.

Streamlining RBAC with Plural

Role-Based Access Control (RBAC) is a critical component of Kubernetes security, allowing organizations to define granular permissions for users and service accounts within their clusters. This ensures that only authorized entities can access specific resources, limiting the impact of potential security breaches. Implementing RBAC effectively is essential for maintaining a secure and compliant Kubernetes environment. Poorly managed RBAC can lead to overly permissive access, creating security vulnerabilities and compliance issues. Overly restrictive RBAC, on the other hand, can hinder productivity.

The Plural Console embedded Kubernetes dashboard ships with closed permissions by default. This requires users to gradually add RBAC rules to users or groups as they expand access. This measured approach allows organizations to carefully manage permissions and ensure that access is granted only as needed, enhancing security and facilitating team collaboration. For example, you might grant developers read access to a namespace with their application resources, while giving site reliability engineers (SREs) full access to the same namespace for troubleshooting. The Plural documentation provides further details on configuring RBAC for the dashboard.

Plural simplifies RBAC management through a unified platform that integrates with existing identity providers. This integration allows for seamless authentication and authorization, resolving all RBAC to the console user's email and groups connected to the identity provider. This centralized approach streamlines access control by eliminating the need for separate user accounts and permissions within Kubernetes. This is particularly helpful for organizations with numerous users and clusters, simplifying user management and reducing inconsistencies. The GitHub documentation offers a more technical look at Plural's integration with Kubernetes dashboards.

For organizations scaling their Kubernetes adoption, Plural streamlines RBAC management, making it easier to implement best practices and maintain a secure environment. This not only enhances security but also empowers teams to work more efficiently. By simplifying RBAC, Plural frees up DevOps teams to focus on other critical tasks, like application development and deployment. This Medium article offers additional insights into simplifying Kubernetes RBAC management, discussing various tools and strategies.

Building a Cloud Native DevOps Pipeline with Kubernetes

This section outlines how to build a robust, cloud-native DevOps pipeline using Kubernetes. We'll cover continuous integration (CI), continuous deployment (CD), GitOps, infrastructure as code (IaC), and implementing a microservices architecture.

Effective CI Strategies for Kubernetes

Effective CI is crucial for managing the complexities of Kubernetes. Your CI pipeline should automate the building, testing, and packaging of your application into container images. Popular CI tools like Jenkins, GitLab CI, and CircleCI integrate seamlessly with Kubernetes, allowing you to deploy your application to a development or staging environment for further testing. Implementing Kubernetes services can be complex, so a well-defined CI process is essential. Choose a CI tool that supports your preferred workflows and integrates with your existing toolchain.

CD and GitOps Workflows for Kubernetes

Kubernetes is the foundation for modern Continuous Deployment (CD) and GitOps workflows. CD automates the release and deployment of your application to production, while GitOps uses Git as the source of truth for your infrastructure and application configurations. Tools like Argo CD and FluxCD simplify GitOps implementation, enabling declarative configuration management and automated synchronization between your Git repository and your Kubernetes cluster. This approach streamlines deployments and rollbacks, making your infrastructure more reliable and easier to manage.

Simplifying GitOps with Plural

Kubernetes is the foundation for modern Continuous Deployment (CD) and GitOps workflows. CD automates the release and deployment of your application to production, while GitOps uses Git as the source of truth for your infrastructure and application configurations. GitOps enables declarative configuration management and automated synchronization between your Git repository and your Kubernetes cluster. This approach streamlines deployments and rollbacks, making your infrastructure more reliable and easier to manage. Tools like Argo CD and FluxCD simplify GitOps implementation, allowing teams to manage Kubernetes configurations directly from Git, ensuring that the desired state of the application is always maintained.

Managing GitOps workflows across a large fleet of Kubernetes clusters can be complex. Plural simplifies this by providing a unified platform for managing and deploying applications across all your clusters. With Plural, you can:

- Deploy and manage Argo CD and FluxCD instances across your entire fleet.

- Centralize configuration management for all your GitOps repositories.

- Monitor the health and status of your GitOps deployments.

- Automate common GitOps tasks, such as deployments and rollbacks.

Plural's agent-based architecture ensures secure and efficient communication between your management cluster and workload clusters, simplifying the management of even the most complex GitOps deployments. This allows teams to focus on development rather than infrastructure management. To learn more about how Plural can simplify your GitOps workflows, book a demo today.

Infrastructure as Code and Immutable Infrastructure with Kubernetes

IaC is fundamental to managing Kubernetes environments at scale. Tools like Terraform and Pulumi allow you to define your infrastructure in code, enabling version control, reproducibility, and automation. This approach promotes immutable infrastructure, where infrastructure changes are applied by creating new resources rather than modifying existing ones. IaC simplifies infrastructure management, reduces errors, and improves the overall reliability of your Kubernetes deployments. Platform engineering, combined with IaC, allows enterprises to scale cloud-native adoption without overwhelming developers with Kubernetes' complexities.

Plural Stacks for Infrastructure as Code

Infrastructure as Code (IaC) is fundamental to managing Kubernetes environments at scale. Tools like Terraform and Pulumi allow you to define your infrastructure in code, enabling version control, reproducibility, and automation. This approach promotes immutable infrastructure, where infrastructure changes are applied by creating new resources rather than modifying existing ones. IaC simplifies infrastructure management, reduces errors, and improves the overall reliability of your Kubernetes deployments. Platform engineering, combined with IaC, allows enterprises to scale cloud-native adoption without overwhelming developers with Kubernetes’ complexities. Plural Stacks enhances this process further.

Plural Stacks provides a scalable framework to manage IaC tools like Terraform, directly within your Kubernetes workflows. You declaratively define a stack, specifying its type (e.g., Terraform), the source code repository, and the target Kubernetes cluster for execution. This Kubernetes-native approach offers several advantages:

- Automated IaC Runs: Every commit to your IaC repository triggers a new run, automatically executed by Plural on the designated cluster. This automation streamlines infrastructure updates and ensures consistency across environments. You can even manage sensitive values like secrets securely within Plural.

- Granular Control and Security: Plural allows fine-grained control over permissions and network locations for IaC runs, enhancing security and compliance. This is particularly important for organizations with strict security requirements.

- Enhanced Visibility and Collaboration: Plural provides detailed information about each run, including inputs/outputs and Terraform state diagrams. This enhanced visibility simplifies debugging and promotes collaboration among team members.

- Integrated Plan and Apply: For pull requests to your IaC repository, Plural executes "plan" runs and posts the results as comments directly on the PR. This integration provides early feedback and facilitates code review, catching potential issues before they reach production. This streamlined workflow improves collaboration and reduces the risk of errors. For example, imagine updating your AWS VPC configuration. Plural will run `terraform plan` on your changes and comment directly on the PR with the planned changes, allowing for review and discussion before applying the changes to your infrastructure.

By integrating IaC management directly into the Kubernetes ecosystem, Plural Stacks simplifies infrastructure automation, improves visibility, and strengthens security. This approach empowers platform engineering teams to manage infrastructure effectively at scale, enabling faster and more reliable deployments.

Implementing a Microservices Architecture with Kubernetes

Kubernetes is ideal for running microservices. Its container orchestration capabilities simplify deploying, scaling, and managing individual microservices independently. Kubernetes provides features like service discovery, load balancing, and automated rollouts, which are essential for building resilient and scalable microservices architectures. While Kubernetes itself can be complex, it effectively addresses many of the challenges associated with microservices application delivery.

Extending Kubernetes with DevOps Tools

A well-functioning Kubernetes cluster often relies on additional tools and integrations to streamline operations and enhance capabilities. Let's explore some essential additions for a robust DevOps workflow.

Managing Packages with Helm

Deploying and managing applications on Kubernetes can be complex due to the numerous resources involved. Helm simplifies this by packaging Kubernetes resources into charts, allowing you to define, install, and upgrade even the most intricate applications with ease. Think of it as a package manager for Kubernetes, similar to apt or yum for Linux systems. This simplifies deployments and reduces the chance of errors, a common challenge when manually managing Kubernetes manifests. Helm charts provide a templating mechanism, enabling you to customize deployments for different environments without rewriting large amounts of YAML.

Leveraging Service Mesh Solutions (Istio, Linkerd)

As your application grows, managing communication between microservices becomes increasingly challenging. Service meshes like Istio and Linkerd provide a dedicated infrastructure layer for service-to-service communication, offering features like traffic management, security, and observability. They address common Kubernetes networking challenges, such as implementing a Container Network Interface (CNI) plugin for seamless integration with the underlying infrastructure. Istio offers advanced traffic management capabilities, enabling features like canary deployments and blue/green deployments. Linkerd focuses on simplicity and ease of use, making it a good choice for smaller teams or those new to service meshes.

Monitoring and Observability for Kubernetes

Gaining insights into your Kubernetes cluster's performance and health is crucial for maintaining optimal operation. Monitoring and observability tools provide the visibility you need to identify and troubleshoot issues effectively. These tools collect metrics, logs, and traces from your applications and infrastructure, offering a comprehensive view of your system's behavior. This addresses the growing complexity of Kubernetes and cloud-native technologies, providing end-to-end visibility and advanced analytics. Open-source options like Prometheus for monitoring and Grafana for visualization offer a flexible and cost-effective starting point. For more advanced features and managed services, consider commercial solutions like Datadog or Dynatrace.

Integrating Your CI/CD Pipeline with Kubernetes

Automating your deployment workflow is essential for faster release cycles and reduced manual intervention. Integrating your CI/CD pipeline with Kubernetes allows you to automate the building, testing, and deployment of your applications. Tools like Jenkins, GitLab CI, and CircleCI can be configured to interact with Kubernetes, enabling automated deployments triggered by code changes. This streamlines the process of deploying and managing Kubernetes services, a key challenge for many DevOps engineers. By automating deployments, you can ensure consistent and reliable releases, freeing up your team to focus on other critical tasks. For instance, you can configure your CI/CD pipeline to automatically deploy new versions of your application to a staging environment for testing before promoting them to production.

Comparing Managed Kubernetes Services

Choosing the right Kubernetes distribution can significantly impact your operational efficiency. This section compares popular managed Kubernetes services, highlighting their strengths and weaknesses to help you make an informed decision. For a deeper dive into choosing the right Kubernetes distribution for your needs, see our Kubernetes distribution comparison guide.

Google Kubernetes Engine (GKE)

GKE excels at container orchestration at scale. Features like autopilot mode simplify cluster management by automating tasks such as node provisioning, scaling, and upgrades. This frees up your team to focus on application development. GKE's tight integration with other Google Cloud services makes it a compelling choice for organizations already within that ecosystem.

Amazon Elastic Kubernetes Service (EKS)

EKS simplifies running Kubernetes on AWS without managing the control plane. Its seamless integration with AWS services like IAM and VPC strengthens security and streamlines operations. EKS offers various operating models, including EKS Anywhere, which extends EKS to on-premises environments. If your organization uses AWS infrastructure, EKS provides a robust and integrated Kubernetes solution.

Azure Kubernetes Service (AKS)

AKS simplifies Kubernetes deployment and management on Azure. Integrated with Azure Active Directory, AKS offers enhanced security and access control. Automated upgrades and patching simplify maintenance, ensuring your cluster remains up-to-date. AKS integrates tightly with other Azure services, making it a natural fit for organizations already using the Microsoft Azure cloud.

DigitalOcean Kubernetes

DigitalOcean Kubernetes provides a user-friendly managed Kubernetes service ideal for smaller teams or projects. Its simplified interface and straightforward setup process make it accessible to those new to Kubernetes. While offering essential features like autoscaling and monitoring, DigitalOcean Kubernetes prioritizes ease of use and a lower barrier to entry.

IBM Cloud Kubernetes Service

IBM Cloud Kubernetes Service focuses on security and compliance. Features like vulnerability scanning and network policies help protect your workloads. This service caters to enterprises in regulated industries requiring robust security measures and compliance certifications.

Red Hat OpenShift

OpenShift builds upon Kubernetes, adding developer-centric tools and features. Its integrated CI/CD pipelines and enhanced security policies streamline application development and deployment. OpenShift targets organizations seeking a comprehensive platform for building, deploying, and managing containerized applications.

VMware Tanzu Kubernetes Grid

Tanzu Kubernetes Grid integrates with VMware's vSphere platform, providing a consistent Kubernetes experience across on-premises and cloud environments. This hybrid cloud approach allows organizations to leverage existing VMware investments while adopting Kubernetes. Tanzu focuses on operational efficiency and lifecycle management, simplifying Kubernetes operations for VMware users.

Self-Hosting Kubernetes: When and How

While managed Kubernetes services offer convenience, self-hosting provides granular control over your infrastructure—essential for organizations with specific security and compliance requirements. As highlighted in this guide to self-hosted Kubernetes, industries like finance and healthcare often prefer self-hosting to comply with stringent data regulations and maintain data sovereignty. This approach allows tailoring the Kubernetes environment to unique requirements, including specialized hardware or configurations not always supported by managed services. Self-hosting can also offer potential cost savings at scale, though it requires dedicated resources for maintenance and management. Ultimately, the decision between self-hosting and managed services hinges on your organization's specific needs, priorities, and internal resources.

Tools for Self-Hosting

Self-hosting Kubernetes successfully requires selecting the right tools for deployment and ongoing management. This article on self-hosted Kubernetes emphasizes using tools like Rancher for streamlined cluster management. Rancher simplifies Kubernetes operations across multiple environments, providing a centralized platform for managing clusters, deploying applications, and enforcing security policies. For application deployment, Helm offers robust package management. Using Helm charts, you can define, install, and upgrade applications easily. These charts act as templates for your Kubernetes resources, simplifying complex deployments and ensuring consistency across your environments. Helm’s templating engine also allows you to customize deployments for different environments without rewriting YAML manifests.

Clusterless Alternatives

For teams seeking alternatives to managing a full Kubernetes cluster, clusterless architectures offer a simplified approach. This article on building a self-hosted Kubernetes cluster mentions clusterless options. These solutions abstract away the complexities of cluster management, allowing developers to focus on deploying and running applications without the overhead of maintaining the underlying infrastructure. This approach is particularly beneficial for smaller teams or projects with limited resources, or for applications that don't require the full capabilities of Kubernetes but still benefit from container orchestration. Consider a clusterless architecture if you need a more streamlined approach, especially if your team is new to container orchestration or your application has modest resource requirements. For more complex deployments and enterprise-grade management across multiple clusters, a platform like Plural can simplify operations and enhance control.

Overcoming Challenges in Cloud Native DevOps with Kubernetes

Kubernetes offers immense power for cloud native DevOps, but it also presents unique challenges. Successfully adopting Kubernetes requires careful planning and the right tooling.

Managing Kubernetes Complexity

Kubernetes introduces a new layer of abstraction and a wealth of concepts. Building internal expertise is crucial, but leveraging managed Kubernetes services or platforms like Plural can significantly reduce the initial learning curve and operational overhead. These services abstract away much of the underlying infrastructure management, allowing teams to focus on application deployment and management. As organizations mature in their Kubernetes journey, they often realize that a holistic approach to cloud native environments is essential. This includes robust tooling, continuous observability, integrated security, and a strong governance model.

Resource Allocation Best Practices

Efficient resource allocation is critical for cost optimization and application performance. Kubernetes provides features like resource requests and limits, allowing you to define the minimum and maximum resources for each container. However, accurately setting these values requires careful planning and monitoring. Over-provisioning leads to wasted resources, while under-provisioning can cause application instability. Tools that provide insights into resource usage and recommend optimal configurations can be invaluable. Furthermore, leveraging the autoscaling capabilities of Kubernetes ensures that your applications dynamically adjust to changing demand, maximizing resource utilization and minimizing costs.

Running Stateful Applications in Kubernetes

Not all applications are stateless. Databases, message queues, and other stateful applications require persistent storage and careful management of data during deployments and scaling events. Kubernetes offers Persistent Volumes to handle persistent storage, but correctly integrating them with your applications can be complex. Solutions like StatefulSets provide specialized features for managing stateful applications, ensuring data consistency and availability during updates and scaling operations.

Addressing Kubernetes Security Concerns

Security is paramount in any cloud native environment. Kubernetes offers several security features, including Role-Based Access Control (RBAC), Network Policies, and Pod Security Admission. Correctly implementing these features is crucial for limiting access to your cluster and protecting your applications. Regular security audits and vulnerability scanning should be part of your ongoing Kubernetes operations. Consider using security-focused tools and platforms that provide end-to-end visibility, advanced analytics, and automated security workflows.

Best Practices for Cloud Native DevOps with Kubernetes

Successfully adopting cloud native DevOps practices with Kubernetes requires a commitment to best practices that address security, resource management, monitoring, and continuous learning. These practices are crucial for maintaining a healthy, efficient, and secure Kubernetes environment.

Implementing Robust Security for Kubernetes

Security in Kubernetes isn't an afterthought; it's a continuous process. A holistic approach is essential. This includes robust tooling, continuous observability, integrated security checks, and a strong governance model. Implement role-based access control (RBAC) to restrict access to Kubernetes resources, regularly scan images for vulnerabilities, and use network policies to control traffic flow within your cluster. Consider security best practices at every stage of your application lifecycle, from development to deployment. Using a platform like Plural can simplify implementing and managing these security measures across your entire Kubernetes fleet.

Optimizing Kubernetes Resource Management

Efficient resource management is key to cost optimization and performance in Kubernetes. Right-size your pods and deployments, ensuring they have the necessary resources without over-provisioning. Leverage Kubernetes' autoscaling capabilities to dynamically adjust resources based on demand. This ensures your applications can handle traffic spikes while minimizing costs during periods of low activity. Tools like Vertical Pod Autoscaler (VPA) and Horizontal Pod Autoscaler (HPA) can automate this process. Simplifying configuration and leveraging Kubernetes' networking capabilities are also crucial for successful implementation. Plural's infrastructure-as-code management features can streamline these processes.

Effective Monitoring and Logging for Kubernetes

Comprehensive monitoring and logging are essential for understanding the health and performance of your Kubernetes cluster and applications. Kubernetes doesn't offer native solutions for these critical functions, so implementing a robust monitoring stack is crucial. Use tools like Prometheus, Grafana, and Elasticsearch to collect metrics, logs, and traces. Set up alerts to notify you of potential issues and use the collected data to troubleshoot problems and optimize performance. Centralized logging and monitoring solutions provide a single pane of glass for observability across your entire Kubernetes environment. Plural's integrated dashboard and monitoring tools can help you gain comprehensive visibility into your Kubernetes deployments.

Continuous Learning for Kubernetes and DevOps

The cloud native landscape is constantly evolving. Encourage continuous learning and team upskilling to stay ahead of the curve. DevOps teams should be proficient in Kubernetes concepts, tools, and best practices. Promote knowledge sharing through internal workshops, online courses, and participation in the Kubernetes community. Automation is a key trend in DevOps. Embrace automation tools for vulnerability scanning, code analysis, and other tasks to improve efficiency and security. By investing in continuous learning, your team can effectively leverage the power of Kubernetes and cloud native technologies. Platforms like Plural can simplify Kubernetes management, freeing up your team to focus on continuous improvement and innovation.

Choosing the Right Kubernetes Solution

Kubernetes has become the standard for container orchestration, but adopting it requires careful planning. Choosing the right Kubernetes solution depends on your organization's specific needs, resources, and long-term goals. This section outlines key considerations to help you make an informed decision.

Assessing Your Kubernetes Needs

Before looking at Kubernetes distributions, define your objectives. What problems are you trying to solve with Kubernetes? Are you aiming for faster deployments, improved scalability, or better resource utilization? Understanding your goals will guide your technology choices. Next, assess your in-house expertise. Do you have engineers experienced with Kubernetes, or will you need training and support? Consider your infrastructure requirements. Do you prefer on-premises, cloud, or a hybrid approach? Aligning your Kubernetes strategy with your resources and capabilities is crucial for success.

Managed vs. Self-Hosted Kubernetes

One of the first decisions you'll face is whether to use a managed Kubernetes service or self-host your own cluster. Managed services, like Google Kubernetes Engine (GKE) or Amazon Elastic Kubernetes Service (EKS), offer convenience and reduce operational overhead. The cloud provider handles infrastructure management, upgrades, and scaling, allowing your team to focus on application development. Self-hosting provides greater control and customization. You have full control over the underlying infrastructure and can tailor it to your specific needs. However, self-hosting requires significant expertise and ongoing maintenance. If you choose this route, use established tools like kOps, Kubespray, or kubeadm to simplify the process.

Vendor Lock-in and Kubernetes Portability

While Kubernetes itself is open source, managed Kubernetes services introduce vendor lock-in. Choosing a specific cloud provider's managed service can make migrating your workloads to another platform more difficult. Consider your long-term portability requirements. If multi-cloud or hybrid cloud deployments are part of your strategy, prioritize solutions that offer greater flexibility.

Analyzing Kubernetes TCO

Finally, carefully analyze the total cost of ownership (TCO) for each Kubernetes solution. Managed services often have higher upfront costs but can save money in the long run by reducing operational overhead. Self-hosting can be more cost-effective initially but requires ongoing investment in infrastructure, maintenance, and expertise. Factor in the cost of training, support, and potential downtime when calculating TCO.

The Future of Cloud Native DevOps and Kubernetes

The cloud native landscape is constantly evolving, with Kubernetes at its core. Understanding emerging trends is crucial for DevOps teams to stay ahead and maximize Kubernetes' potential.

Serverless Kubernetes and Edge Computing

Serverless Kubernetes simplifies development by abstracting away infrastructure management. Developers can focus on code without managing servers. This is particularly relevant for edge computing, where resources are limited and low latency is critical. Projects like SpinKube are pushing the boundaries of serverless workloads on Kubernetes powered by WebAssembly, enabling high-performance microservices at the edge. This allows organizations to deploy applications closer to users, improving response times and user experience.

AI/ML Integrations with Kubernetes

AI and machine learning are increasingly integrated into DevOps. AI can automate repetitive tasks, analyze data to identify potential issues, and optimize resource allocation in Kubernetes clusters. This leads to more efficient workflows, improved security, and faster incident response. As AI matures, expect more sophisticated automation and intelligent decision-making within cloud native environments, freeing DevOps teams to focus on higher-level tasks and innovation.

Improving the Kubernetes Developer Experience

A positive developer experience is essential for efficient cloud native development. This means providing developers with the right tools, processes, and environments. Key aspects include robust tooling for Kubernetes deployments, continuous observability into application performance, integrated security checks, and a strong governance model. Prioritizing the developer experience accelerates development cycles, improves code quality, and fosters innovation. Streamlined workflows and self-service capabilities empower developers to work independently and efficiently. Consider incorporating tools like Backstage to create a unified developer portal for all your Kubernetes resources and services. This can significantly improve discoverability and reduce onboarding time for new developers.

Kubernetes in Multi-Cloud and Hybrid Environments

Multi-cloud and hybrid cloud deployments are increasingly common, offering flexibility and resilience. Kubernetes provides a consistent platform for managing applications across these environments. However, challenges remain in managing complexity, ensuring security, and maintaining consistent operations across different cloud providers. Tools and platforms that simplify multi-cloud and hybrid cloud management are essential for organizations looking to leverage these deployments while mitigating risks. This includes solutions for networking, security, and centralized management across multiple Kubernetes clusters. Adopting a platform like Plural can help address these challenges by providing a single pane of glass for managing Kubernetes deployments across multiple clouds and on-premise infrastructure.

Related Articles

- Multi-Cloud Kubernetes Management: A Practical Guide

- The Quick and Dirty Guide to Kubernetes Terminology

- The Essential Guide to Monitoring Kubernetes

- Why Is Kubernetes Adoption So Hard?

- What is Continuous Deployment?

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

Why is Kubernetes so important for cloud native development?

Kubernetes allows you to automate many of the operational tasks involved in deploying, scaling, and managing cloud native applications. It provides a consistent platform across different environments, simplifies complex deployments, and enables faster release cycles. Without Kubernetes, managing the dynamic nature of cloud native applications at scale would be significantly more challenging.

What are the main benefits of using a managed Kubernetes service?

Managed Kubernetes services simplify Kubernetes operations by handling tasks like infrastructure management, upgrades, and scaling. This reduces operational overhead and allows your team to focus on application development rather than infrastructure management. While managed services might have higher upfront costs, they can save money in the long run by reducing the need for specialized Kubernetes expertise and minimizing operational overhead.

How do I choose the right Kubernetes distribution for my organization?

Choosing the right Kubernetes distribution depends on several factors, including your organization's size, technical expertise, infrastructure requirements, and budget. Consider whether you need a managed service or prefer self-hosting, and evaluate the features, pricing, and support offered by different providers. If you're new to Kubernetes, a managed service with a simpler interface might be a good starting point. For larger organizations with specific security or compliance needs, a more specialized distribution might be necessary.

What are some common challenges when adopting Kubernetes, and how can I overcome them?

Kubernetes can be complex, and teams often face challenges related to managing its complexity, ensuring proper resource allocation, handling stateful applications, and addressing security concerns. Investing in training and upskilling your team is crucial. Leveraging managed services or platforms like Plural can simplify operations and reduce the learning curve. Using appropriate tools for monitoring, logging, and security can also help address these challenges.

What are some future trends in the Kubernetes ecosystem that I should be aware of?

Key trends include the rise of serverless Kubernetes, increased integration with AI and machine learning, a growing focus on improving the developer experience, and the continued adoption of Kubernetes in multi-cloud and hybrid environments. Staying informed about these trends will help you make strategic decisions about your Kubernetes deployments and ensure you're leveraging the latest advancements in the cloud native ecosystem.

Newsletter

Join the newsletter to receive the latest updates in your inbox.