How Kubernetes Works: A Guide to Container Orchestration

Understand how Kubernetes works to orchestrate containerized applications efficiently. Learn about its architecture, features, and benefits in this comprehensive guide.

Table of Contents

Kubernetes has become the de facto standard for container orchestration, revolutionizing how we deploy and manage applications. But beneath its powerful capabilities lies a sophisticated system.

Whether you are a developer, operations engineer, or simply curious about how Kubernetes works, this guide offers a comprehensive overview, covering fundamental principles to advanced concepts. While Kubernetes addresses many container management challenges, it introduces its own complexities. We will also explore how platforms like Plural can tackle Kubernetes complexity.

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Key Takeaways

- Kubernetes automates application management: Features like automated scaling, self-healing, and load balancing simplify deployments and improve application reliability. Understanding core concepts like pods, services, and deployments is essential for effective orchestration.

- A secure and observable system is crucial: Implement RBAC, secrets management, and container runtime security best practices. Integrate monitoring and logging tools to gain insights into application and cluster health, enabling proactive troubleshooting.

- Leverage the Kubernetes ecosystem: Tools like Helm simplify package management, while Prometheus and Grafana enhance monitoring. Adopt CI/CD tools like Argo CD for streamlined deployments and updates. Understanding these tools and advanced Kubernetes concepts unlocks greater efficiency and control.

- Simplify Kubernetes complexity: Managing Kubernetes environments at scale is a significant challenge. Plural simplifies Kubernetes management with pull request (PR) automation, AI-driven insights, and a single unified interface.

What is Kubernetes?

Kubernetes is open-source software for automating the deployment, scaling, and management of containerized applications. Instead of manually administering individual containers on separate machines, Kubernetes provides a centralized control plane to orchestrate clusters of machines. Originally developed at Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes has become the industry standard for container orchestration, allowing businesses to build and deploy applications faster and more efficiently.

AI-powered platforms such as Plural are enhancing Kubernetes with AI features like AI-driven insights, allowing users to easily detect, diagnose, and fix complex issues across clusters while optimizing operational efficiency.

The Kubernetes Management Platform that puts AI to work

What can Kubernetes do for you?

Containers are a good way to bundle and run your applications. In a production environment, you need to manage the containers that run the applications and ensure no downtime. For example, if a container goes down, another container needs to start. Wouldn't it be easier if a system handled this behavior?

That's how Kubernetes comes to the rescue. Kubernetes provides you with:

- Automated Deployment and Scaling: Kubernetes automates deployment, scaling, and management of containerized applications, serving as a cornerstone of modern software development and IT operations. It enables teams to deploy updates quickly and reliably. Need to launch a new version? Kubernetes manages the rollout for a smooth transition. Experiencing increased traffic? Kubernetes automatically scales by creating additional container instances.

- Self-Healing and Load Balancing: Kubernetes has self-healing capabilities. It automatically monitors the health of your containers and takes corrective action when necessary. If a container crashes, Kubernetes automatically restarts it, ensuring continuous uptime for your applications. Kubernetes also acts as a built-in load balancer, distributing incoming network traffic across multiple application instances. This prevents any single instance from becoming overloaded, ensuring consistent performance, even under heavy load.

- Resource Management and Allocation: Efficient resource management is a key function of Kubernetes. It intelligently allocates computing resources to your containers, such as CPU and memory, ensuring each application gets the resources it needs. This optimized resource allocation prevents resource contention and maximizes infrastructure utilization. Kubernetes also simplifies managing persistent storage, allowing you to attach persistent volumes to your containers easily. This ensures application data persists even if a container crashes or is rescheduled. This streamlined storage management is crucial for data-driven applications.

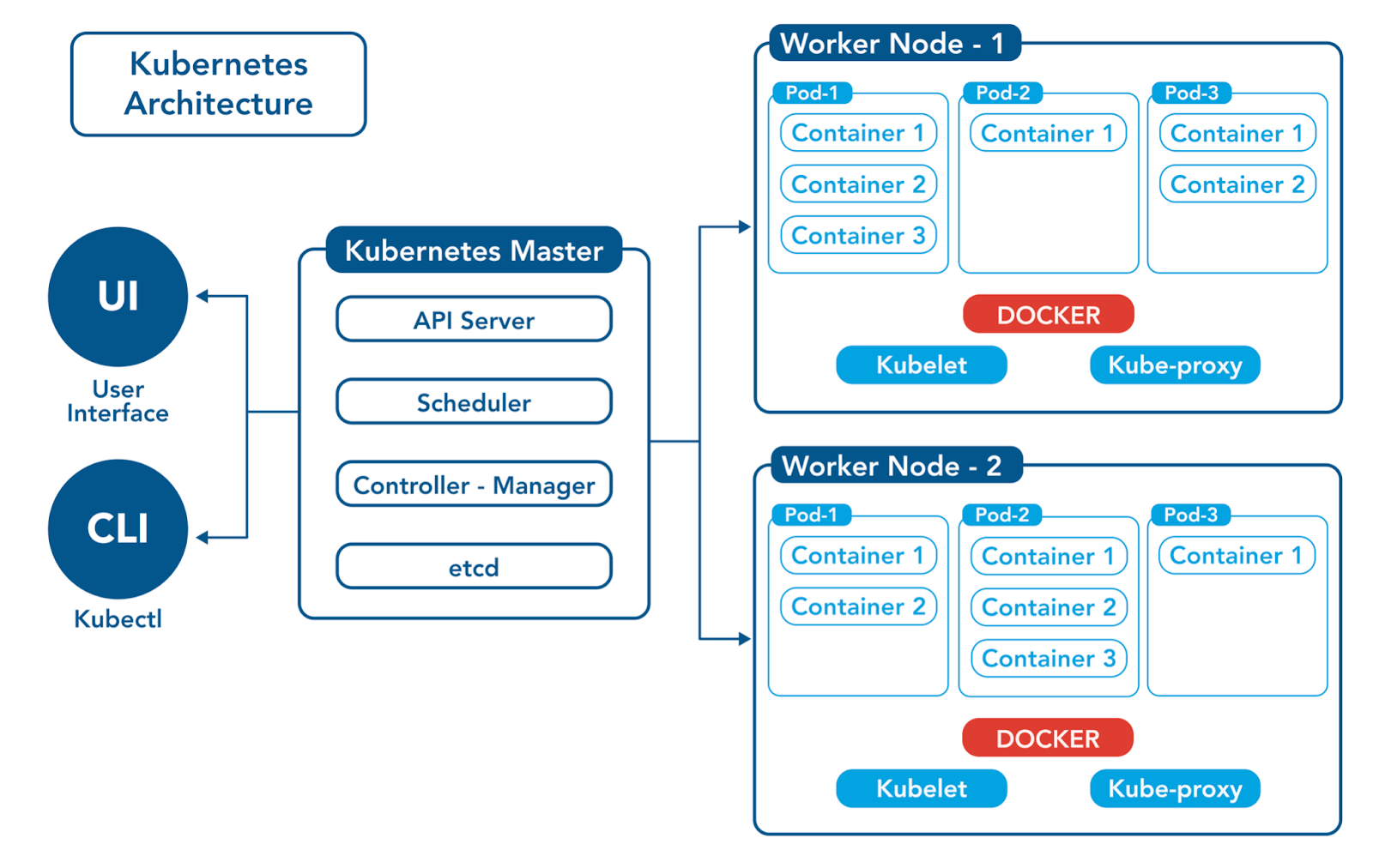

Kubernetes Architecture Explained

Kubernetes (K8s) orchestrates containerized applications across a cluster of machines. This cluster follows a master-worker architecture, where the master nodes control the cluster and the worker nodes run the applications.

Kubernetes Master Components

The master node is the control center of your Kubernetes cluster. It houses several key components that work together to manage the cluster's state and schedule workloads. These components include:

- API Server: The API server is the front-end for the Kubernetes control plane. All other components interact with the cluster through the API server. It exposes the Kubernetes API, enabling users and other components to submit requests and retrieve information about the cluster.

- Scheduler: The scheduler is responsible for placing pods onto worker nodes. It considers resource availability, node constraints, and data locality when making placement decisions. Efficient scheduling ensures optimal resource utilization across the cluster.

- Controller Manager: The controller manager runs a set of control loops that maintain the cluster's desired state. It monitors the current state and takes corrective actions to ensure it matches the desired state defined in your Kubernetes configurations.

- etcd: etcd is a distributed key-value store that holds the cluster's state information. This includes information about pods, services, deployments, and other Kubernetes objects. The API server interacts with etcd to read and write cluster data.

Kubernetes Worker Node Components

Worker nodes are the workhorses of the Kubernetes cluster, running the actual applications. Each worker node runs several key components:

- Kubelet: The kubelet is an agent that runs on each worker node and communicates with the master node. It receives instructions from the master node about which pods to run and manages the lifecycle of those pods on the node. The kubelet ensures that containers within the pods are running and healthy.

- Kube-proxy: The kube-proxy is a network proxy that runs on each worker node. It maintains network rules on the node, enabling communication between pods and services across different nodes. It also handles load balancing of traffic to pods within a service.

- Container Runtime: The container runtime is the software responsible for running containers on the worker node. Popular container runtimes include Docker and containerd. Kubernetes supports multiple container runtimes through the Container Runtime Interface (CRI).

Fundamentals of Kubernetes: Pods, Services, Deployments

These three concepts are fundamental to understanding how Kubernetes manages applications:

- Pods: Pods are the smallest deployable units in Kubernetes. A pod typically encapsulates a single container, although it can contain multiple containers that share resources and a network namespace. Pods are ephemeral and can be created, terminated, and rescheduled as needed.

- Services: Services provide a stable network endpoint for accessing a group of pods. They abstract away the dynamic nature of pods, allowing clients to access applications without needing to know the specific IP addresses or locations of the pods. Services also provide load balancing across multiple pods, ensuring high availability and scalability.

- Deployments: Deployments manage the rollout and updates of your applications. They define the desired state of your application, such as the number of replicas, the container image to use, and any other configuration parameters. Deployments automate the process of updating your application by gradually rolling out changes, minimizing downtime, and ensuring a smooth transition.

How Kubernetes Manages Containerized Apps

Kubernetes excels at simplifying the complexities of running containerized applications. It handles the entire application lifecycle, from initial deployment to ongoing management and scaling.

Container Scheduling and Orchestration

Kubernetes automates the deployment, scaling, and management of containerized applications. A Kubernetes cluster consists of a master node (the control center) and worker nodes (where your applications run). The master node orchestrates everything, distributing tasks and resources among the worker nodes. A pod encapsulates one or more containers, sharing resources like storage and network. This design allows containers within a pod to communicate efficiently and share data seamlessly.

Application Lifecycle Management

Kubernetes simplifies application lifecycle management by allowing you to define the desired state of your application—the number of replicas, the container images, and how updates should be rolled out. Kubernetes then ensures the actual state matches your desired state. Kubernetes also provides automated rollouts and rollbacks for updates. This allows you to deploy new versions of your application in a controlled manner, gradually replacing old pods with new ones. If issues arise, Kubernetes can automatically roll back to the previous version, minimizing downtime.

Monitoring and Health Checks

Kubernetes continuously monitors the health of your applications. It performs regular health checks on your pods to ensure they run correctly. If a pod fails a health check, Kubernetes automatically restarts or replaces it. This self-healing capability is crucial for maintaining application availability and reliability.

Securing a Kubernetes Cluster

Security is a critical aspect of any Kubernetes deployment. A robust security posture protects your applications and infrastructure from unauthorized access, data breaches, and other threats. This section covers three key areas of Kubernetes security: Role-Based Access Control (RBAC), Secrets Management, and Container Runtime Security.

Role-Based Access Control (RBAC)

RBAC is a fundamental security feature that governs access to resources within your Kubernetes cluster. It lets you define granular permissions based on roles, ensuring that users and applications only have the necessary privileges. This "principle of least privilege" minimizes the potential impact of compromised credentials or malicious actors. Platforms like Plural furthermore simplify RBAC management by integrating with your existing identity provider, enabling a streamlined Kubernetes SSO experience. For a deeper dive into RBAC, refer to Kubernetes RBAC Authorization: The Ultimate Guide article by Plural.

Secrets Management

Protecting sensitive information like passwords, API keys, and certificates is paramount. Kubernetes offers a dedicated Secrets API for securely storing and managing such data. When deploying applications, you can reference secrets as environment variables or volume mounts, allowing your applications to access sensitive information without directly exposing it. For a practical guide on Kubernetes Secrets, refer to Kubernetes Secrets: A Practical Guide by Plural.

Container Runtime Security

Container runtime security protects the integrity of the containers running within your cluster. This involves several key practices. First, regularly scan container images for vulnerabilities using tools like Trivy. This helps identify and mitigate potential security risks before deployment. Second, security policies should be implemented to restrict container behavior. Pod Security Admission (PSA) allows you to define rules governing resource usage, network access, and other aspects of container execution. Finally, continuously monitoring container runtime behavior is essential for detecting anomalies and potential security breaches. Tools like Falco can help identify suspicious activity within your containers, enabling rapid response to security incidents.

Advanced Kubernetes Concepts

As you become more familiar with Kubernetes, understanding these advanced concepts will allow you to leverage its full potential for managing containerized applications.

Deployment Strategies

Kubernetes offers several deployment strategies to control how application updates roll out. These strategies minimize downtime and risk by allowing you to introduce new versions gradually.

- Rolling Updates: Gradually update your application by replacing pods with newer versions one at a time. This ensures a smooth transition and allows you to monitor the new version's performance.

- Blue/Green Deployments: Maintain two identical environments—blue (live) and green (staging). Deploy the new version to green, test it, and then switch traffic from blue to green. This allows for rapid rollback if issues arise.

- Canary Releases: Deploy a small percentage of pods with the new version alongside the existing version. Monitor the canary pods and gradually increase their proportion if they perform well. This allows you to test new versions in production with minimal user impact.

Horizontal Pod Autoscaling

Horizontal Pod Autoscaling (HPA) automatically adjusts the number of pod replicas based on resource consumption, such as CPU or memory usage. Define target metrics and thresholds, and Kubernetes will automatically scale your application up or down. This ensures optimal resource utilization and maintains application performance under varying loads.

Cluster Autoscaling

Cluster Autoscaling dynamically adjusts the size of your Kubernetes cluster based on the resource requests of your deployments. When pods cannot be scheduled due to insufficient resources, the cluster autoscaler adds new nodes. Conversely, the autoscaler can remove nodes to save costs if they are underutilized. This ensures that your cluster has the right amount of resources available, optimizing cost-efficiency and preventing resource starvation.

The Kubernetes Ecosystem and Tools

Kubernetes boasts a rich ecosystem of tools that extend its core functionality and simplify management tasks. These tools address various aspects of the Kubernetes lifecycle, from deploying applications to monitoring their health and ensuring continuous delivery.

Package Management with Helm

Managing Kubernetes deployments often involves complex YAML manifests for defining resources. Helm acts as a package manager, streamlining this process. Think of it like apt or yum for your Kubernetes cluster, enabling you to define, install, and upgrade even intricate applications with ease. This is particularly useful for managing dependencies and ensuring consistent deployments across different environments. For example, you can use Helm to deploy a complex application like a web server with a database backend, managing all the necessary resources (deployments, services, configmaps, etc.) as a single unit.

Monitoring and Logging Solutions

Observability is crucial in dynamic environments like Kubernetes. Monitoring and logging solutions provide insights into the health, performance, and resource utilization of your applications and cluster. Prometheus is a popular open-source monitoring system commonly used in Kubernetes deployments, offering a robust time-series database and alerting capabilities. When paired with a visualization tool like Grafana, you gain a comprehensive view of your cluster's performance, enabling proactive identification and resolution of potential issues.

CI/CD Integration

Continuous integration and continuous delivery (CI/CD) are essential practices for modern software development. Kubernetes facilitates CI/CD integration by automating the deployment process. Tools like Argo CD and Jenkins can be integrated with Kubernetes to create automated pipelines for building, testing, and deploying applications. By leveraging Kubernetes' declarative nature and API-driven approach, CI/CD pipelines can achieve consistent and repeatable deployments, accelerating the software development lifecycle.

ArgoCD is great, but larger organizations face a few hiccups when using it. Here's how platforms like Plural fix this.

Challenges of Kubernetes

The shift to Kubernetes creates a new set of challenges for your organization. Both the number and the complexity of Kubernetes clusters are growing tremendously within most organizations, bringing a host of new challenges.

- Complexity of Management: Managing Kubernetes, especially at scale, can be complex. As deployments grow, handling numerous containers, nodes, clusters, and services requires specialized tools and expertise.

- Monitoring Difficulties: As Kubernetes environments become more complex, traditional monitoring tools alone are insufficient to address the challenges of cluster sprawl, configuration inconsistencies, and operational overhead.

- Security and Networking Issues: Securing Kubernetes involves addressing challenges related to access control, network policies, and container vulnerabilities. Implementing robust security practices, such as Role-Based Access Control (RBAC), network segmentation, and image scanning, is crucial.

- Resource Management: Efficiently managing resources in Kubernetes can be challenging. Ensuring applications have the necessary resources (CPU, memory, storage) without over-provisioning requires careful planning.

- Need for Expertise: Kubernetes has a steep learning curve. Managing it effectively requires specialized knowledge. Building internal expertise or leveraging managed Kubernetes services can help overcome this challenge.

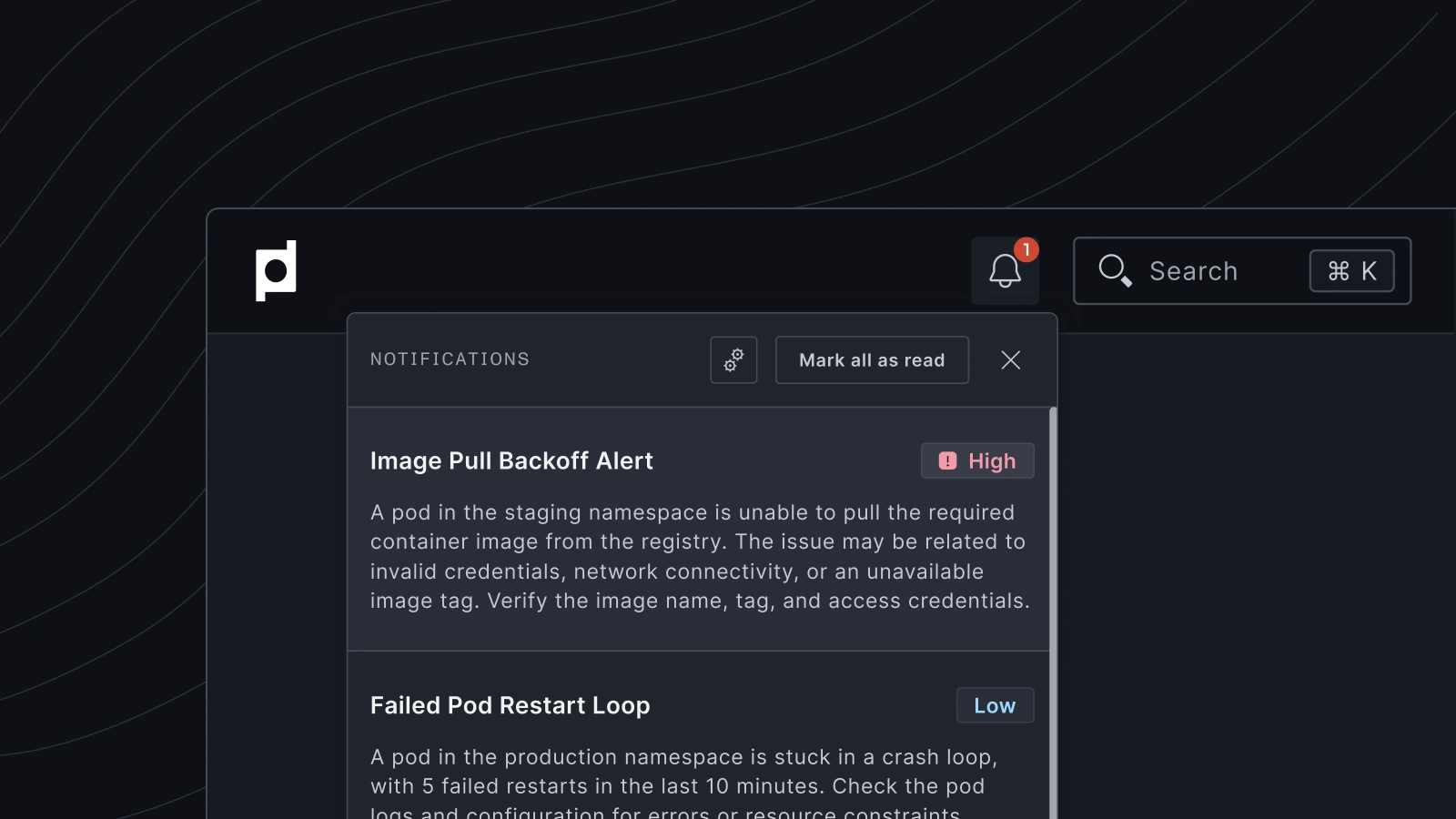

Simplify Kubernetes Complexity with Plural

Managing K8s environments at scale is a significant challenge. From navigating heterogeneous environments to addressing a global skills gap in Kubernetes expertise, organizations face complexity that can slow innovation and disrupt operations.

Plural helps teams manage multi-cluster, complex K8s environments at scale. By combining an intuitive, single pane of glass interface with advanced AI troubleshooting capabilities that provide a unique vantage point into your Kubernetes environment, Plural enables you to save time, focus on innovation, and reduce risk across your organization.

Streamline resource updates and deployments

Manual K8s operations can lead to errors, delays, and inefficiency. Plural leverages PR automation to eliminate tedious manual work, ensuring consistency and speed.

- PR-driven workflows to automate resource updates, deployments, and scaling

- Sequential updates across development, staging, and production environments for safe rollouts

- Full audit trails of every change are stored in Git for complete transparency

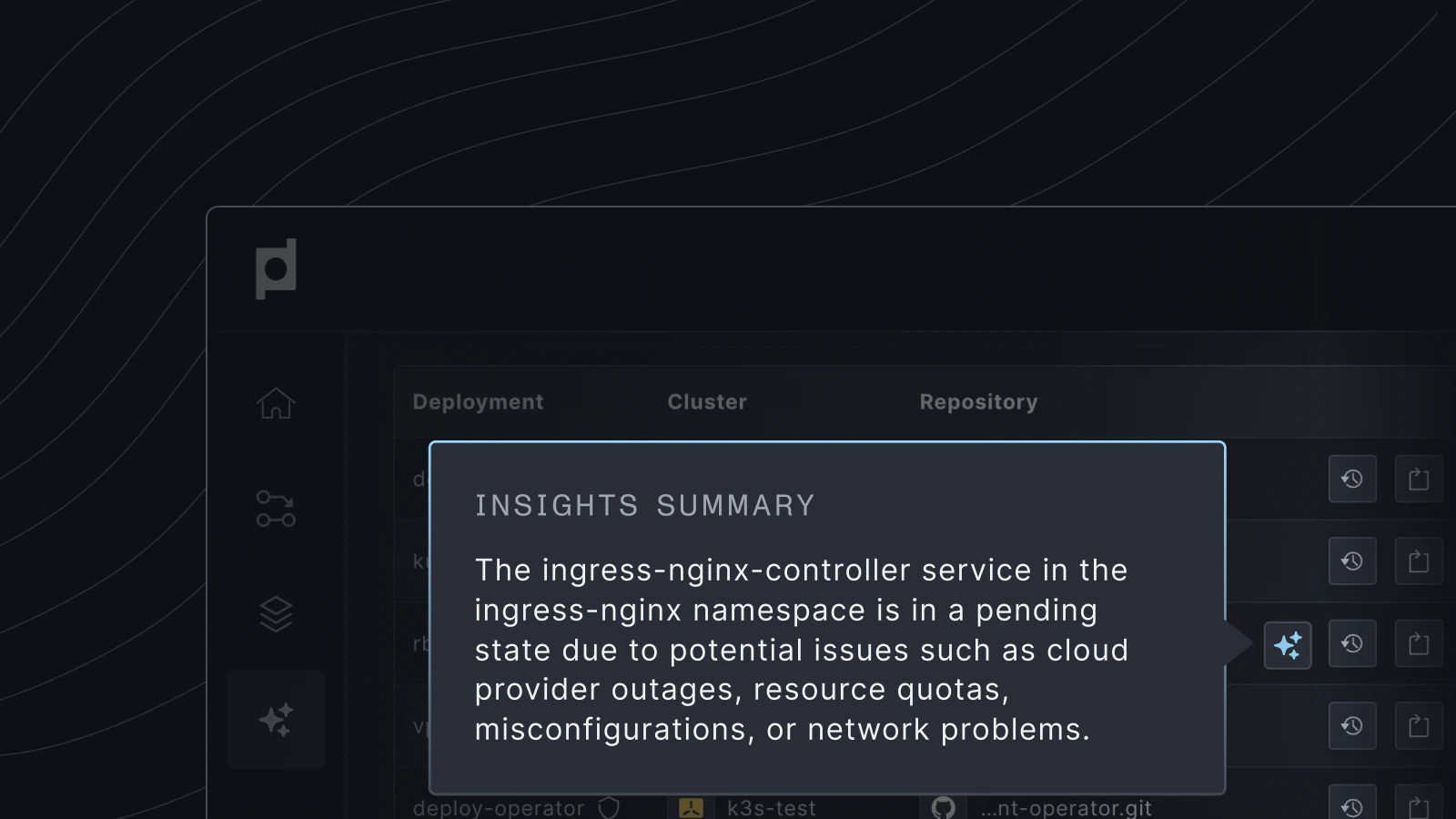

AI-driven insights for smarter operations

Organizations struggle to find the expertise needed to manage complex K8s environments. Plural’s AI capabilities reduce the learning curve and help teams avoid potential issues.

- Proactive detection of anomalies and potential failures using real-time telemetry

- AI-powered diagnostics that provide actionable root-cause analysis and resolution steps

- Auto-generated recommendations delivered directly to teams

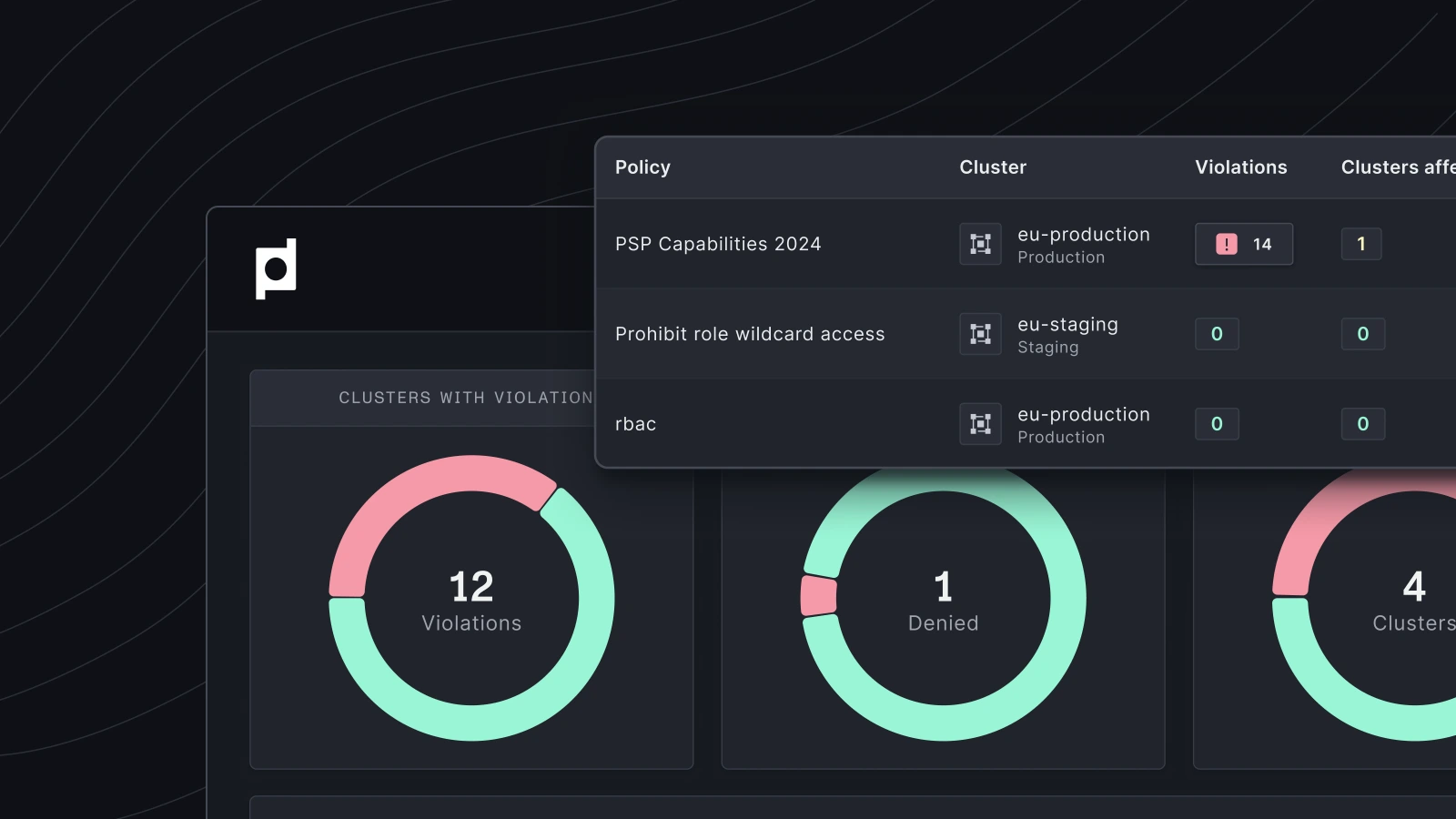

Simplify K8s management with a unified interface

K8s complexity often stems from disconnected tools and fragmented workflows. Plural centralizes everything into a single, intuitive interface, making it easier to monitor and act.

- Single-pane-of-glass dashboard for monitoring cluster health, logs, and resource usage

- Integrated policy enforcement, ensuring compliance at every stage

- Flexible tagging and templating to streamline configuration and reduce rework

Conquer heterogeneous environments with confidence

Managing K8s across on-premises, multi-cloud, and hybrid setups introduces challenges. Plural bridges the gap, providing the tools to standardize and scale across environments.

- GitOps-driven workflows to maintain consistency across heterogeneous environments

- Automated upgrades and compatibility checks for seamless operations

- Centralized policy enforcement to ensure secure, compliant configurations everywhere

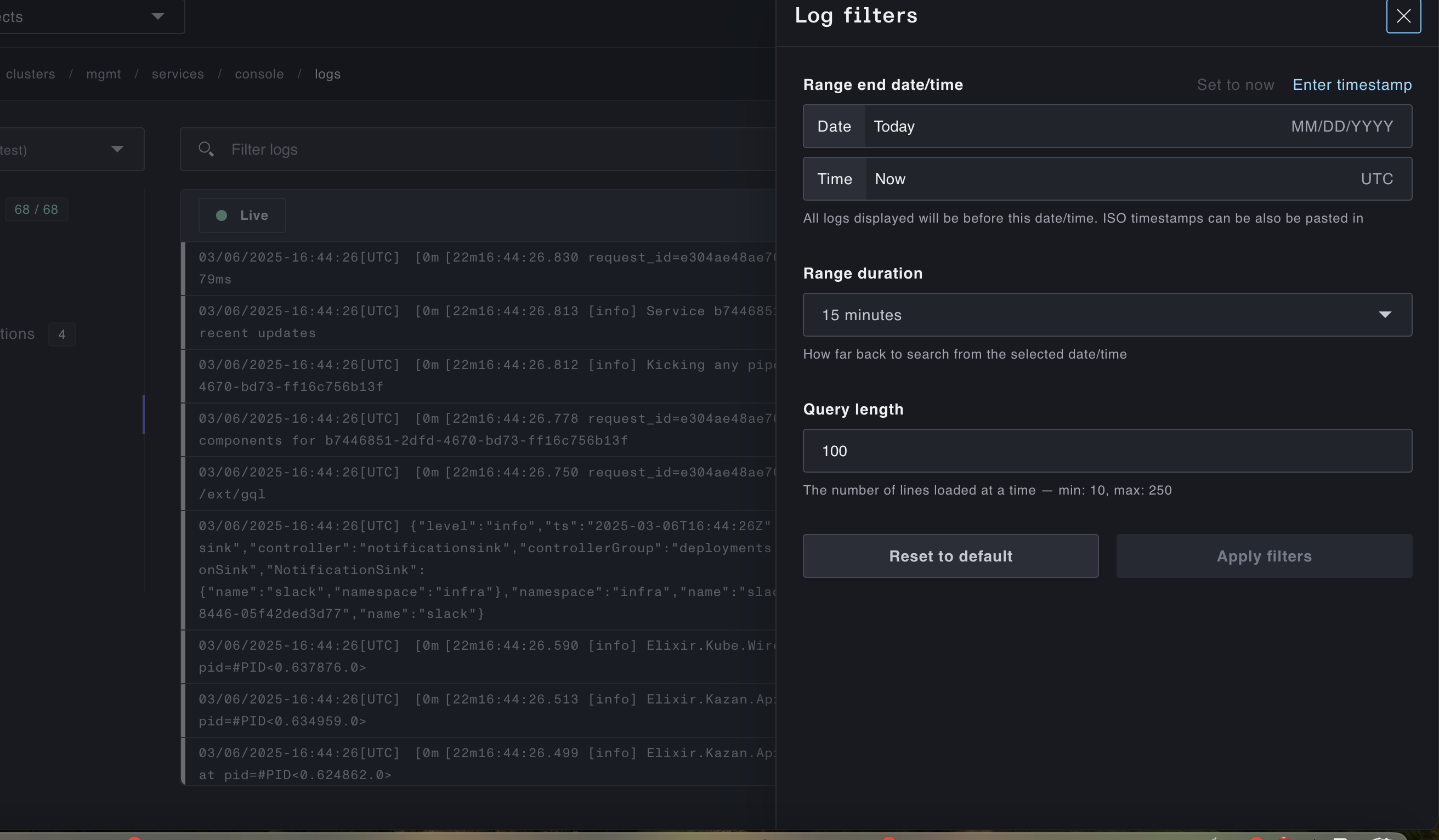

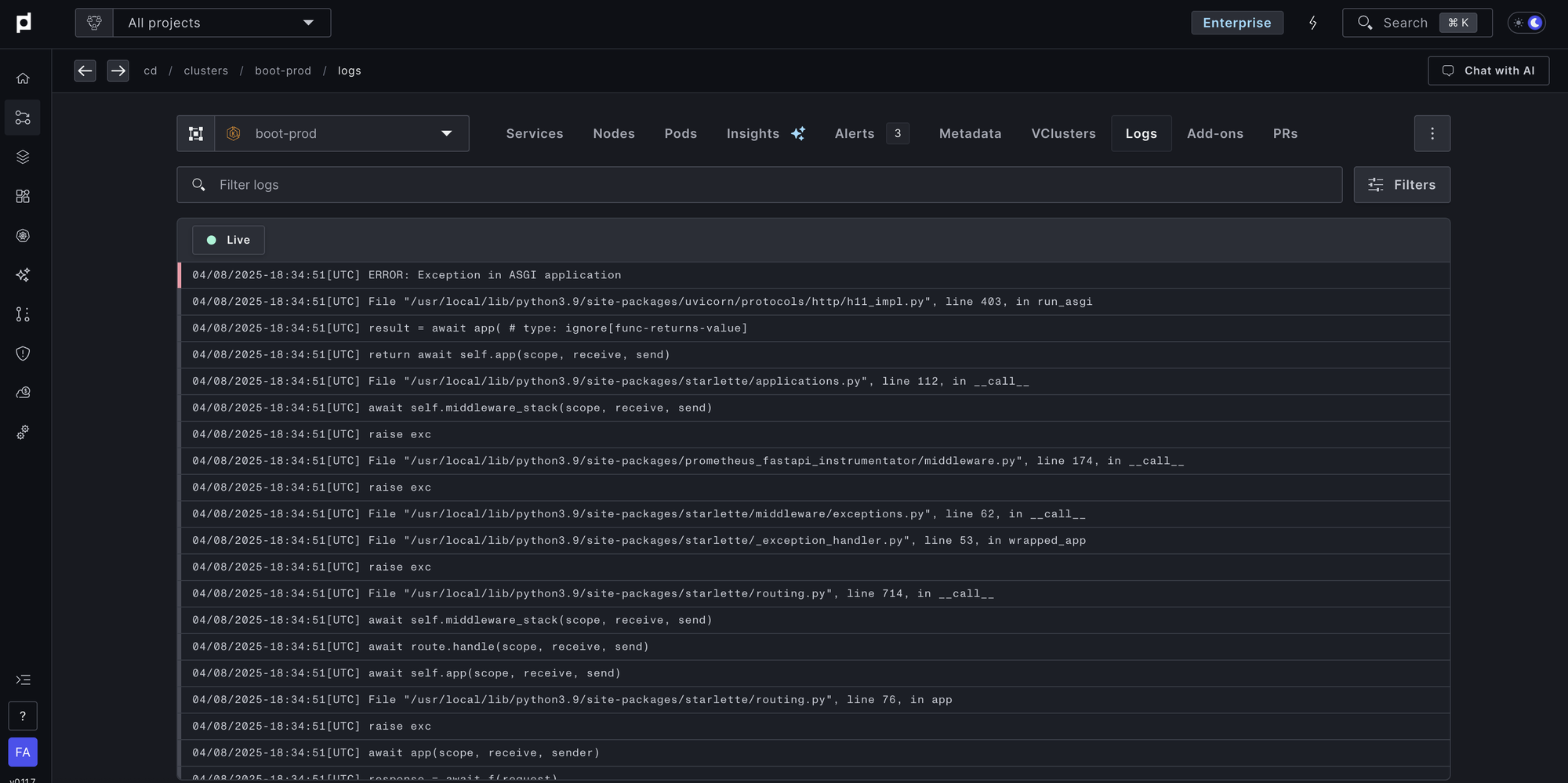

One console, all your logs

Most teams running Kubernetes face a common headache: logs are scattered across clusters, services, and tools. Platform teams spend too much time setting up log aggregation and governance, while developers waste hours jumping between

Plural offers built-in log aggregation that lets you view and search logs

directly in the Plural console. You can query logs at the service and

cluster levels, with proper access controls in place.

Whether you're just starting with Kubernetes or looking to scale your container deployments, Plural's approach helps you focus on building applications rather than troubleshooting infrastructure. Learn more at Plural.sh or book a demo!

Related Articles

- Deep Dive into Kubernetes Components

- Kubernetes Mastery: DevOps Essential Guide

- Kubernetes Management: A Comprehensive Guide for 2025

- Managing Kubernetes Deployments: A Comprehensive Guide

- Kubernetes Cluster Security: A Deep Dive

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

What are the core components of a Kubernetes master node?

The master node is the control center of a Kubernetes cluster. Its key components include the API server (the communication hub), the scheduler (which assigns pods to worker nodes), the controller manager (maintaining the desired cluster state), and etcd (the key-value store holding cluster data). These components work together to manage the cluster and schedule workloads.

How does Kubernetes handle persistent storage?

Kubernetes uses Persistent Volumes (PVs) to represent underlying storage resources and Persistent Volume Claims (PVCs) as pods request storage. This abstraction allows developers to request storage without managing the underlying infrastructure. Various volume types, such as NFS and cloud provider volumes, cater to different storage needs.

What are the main differences between pods, services, and deployments?

Pods are the smallest deployable units, encapsulating one or more containers. Services provide stable network endpoints for accessing a group of pods, abstracting their dynamic nature. Deployments manage the rollout and updates of applications, ensuring the desired state is maintained. They work together to provide a scalable and reliable application platform.

How does Kubernetes ensure application security?

Kubernetes employs several security mechanisms. Role-Based Access Control (RBAC) governs access to cluster resources, limiting privileges to only what's necessary. Secrets management securely stores sensitive data like passwords and API keys. Network policies control traffic flow between pods, enhancing isolation and security.

What are some common challenges in managing Kubernetes, and how can they be addressed?

Managing Kubernetes can be complex, especially at scale. Monitoring the dynamic environment requires robust tooling. Security and networking require careful configuration. Resource management and the need for specialized expertise are also common hurdles. Solutions include adopting management platforms like Plural, using monitoring tools like Prometheus and Grafana, implementing strong security practices, and investing in team training.

Newsletter

Join the newsletter to receive the latest updates in your inbox.