Modern Solutions for Managing Kubernetes Clusters

Master Kubernetes cluster management with practical solutions for optimizing resources, enhancing security, and simplifying operations.

Kubernetes offers incredible power and flexibility, but managing Kubernetes clusters can be a challenge. This guide provides practical kubernetes clusters solutions and best practices to simplify your Kubernetes operations. We'll cover key aspects of cluster management kubernetes, explore common pitfalls, and delve into advanced techniques, including kubernetes dependency management. You'll gain the knowledge to efficiently manage your clusters and unlock the full potential of Kubernetes. This guide also offers kubernetes cluster management best practices to help you succeed.

Key Takeaways

- Effective Kubernetes management hinges on mastering resource allocation, monitoring, security, and scaling. Regular updates, high availability, and disaster recovery planning are also crucial for minimizing downtime and protecting your systems.

- Simplify Kubernetes complexities by leveraging the right tools and automation. Address challenges like operational overhead and multi-cluster management with purpose-built solutions to streamline workflows and optimize resource use. Prioritize security and compliance best practices to protect your applications and data.

- Plural simplifies Kubernetes operations, allowing your team to focus on application development. Features like automated maintenance, dependency management, and streamlined upgrades free up valuable time and resources.

Understanding Kubernetes Cluster Management

What is Kubernetes Cluster Management?

Kubernetes cluster management refers to all the processes and tools you use to administer a Kubernetes cluster. A Kubernetes cluster is a set of machines, called nodes, that run containerized applications. Kubernetes itself is an open-source container orchestration platform that automates the deployment, scaling, and management of these application containers. Think of it as a sophisticated conductor orchestrating a complex symphony of software containers. You can learn more about the basics of Kubernetes clusters from AWS.

Why is Managing Kubernetes Clusters Important?

Kubernetes simplifies container management at scale. This offers significant benefits, including scalability, high availability, and automated management. These features are crucial for organizations that need to deploy applications quickly and efficiently while maintaining reliability. Without proper cluster management, your containerized applications could become a chaotic mess. Kubernetes helps keep things running smoothly, allowing you to focus on building and deploying great software. For a deeper look into the advantages of using Kubernetes, check out this AWS resource.

Key Components of Kubernetes Cluster Management

A Kubernetes cluster consists of a control plane and worker nodes. Worker nodes are the workhorses of your cluster, hosting the Pods, which are the smallest deployable units in Kubernetes and contain your application workloads. The control plane acts as the brain, managing the worker nodes and Pods, ensuring that the desired state of the cluster is maintained. This architecture ensures that your applications run reliably and efficiently. You can explore the Kubernetes cluster architecture in more detail.

The control plane itself has several key components, each with a specific role:

- kube-apiserver: This is the front end of the control plane, exposing the Kubernetes API. It's the primary way you interact with your cluster.

- etcd: This is a consistent and highly-available key-value store used to hold all cluster data. It's essential for maintaining the state of your Kubernetes environment.

- kube-scheduler: This component intelligently selects nodes for newly created Pods based on various factors like resource availability and constraints.

- kube-controller-manager: This runs controller processes that manage different aspects of the cluster, ensuring its smooth operation. It's the behind-the-scenes manager keeping everything in check. For more information on these components, see the official Kubernetes documentation.

Control Plane Variations and Management Tools

The control plane is the brain of your Kubernetes cluster, making decisions about where your applications run and how they interact. It’s responsible for scheduling workloads, maintaining the desired state, and responding to changes within the cluster. Understanding the different ways you can deploy and manage the control plane is crucial for optimizing your Kubernetes setup.

You have several options for deploying the control plane: running it on dedicated machines outside the worker nodes, deploying it as static pods directly on the nodes, or using a managed Kubernetes service from a cloud provider like Amazon EKS, Google Kubernetes Engine (GKE), or Azure Kubernetes Service (AKS). Each option presents trade-offs in terms of complexity, cost, and maintenance. Managed services offer convenience but may lead to vendor lock-in, while self-hosting gives you more control but requires additional operational overhead.

Managing the control plane effectively involves using the right tools. Kubeadm helps automate the process of setting up and configuring the control plane. For AWS deployments, kops simplifies cluster management. Kubespray provides a production-ready setup for deploying Kubernetes on various infrastructure providers. These tools, and others like Plural, can significantly reduce the complexity of managing your control plane, allowing you to focus on deploying and scaling your applications. Plural, for example, offers a unified platform for managing Kubernetes deployments, including streamlined control plane operations.

Kubernetes Add-ons: Extending Functionality

Kubernetes provides a solid foundation for container orchestration, but its real power lies in its extensibility through add-ons. Add-ons provide essential services, enhancing the core capabilities of Kubernetes and simplifying application management and monitoring.

The DNS service is mission-critical. It allows your applications to find and communicate with each other within the cluster using names, rather than relying on IP addresses. This simplifies service discovery and makes your applications more resilient and easier to scale.

The Kubernetes Dashboard offers a web-based interface for managing your cluster. You can visualize deployments, monitor resource usage, and troubleshoot issues, providing valuable insights into your cluster's health and performance. Plural also offers an embedded Kubernetes dashboard with enhanced features and integrated access control.

Monitoring and logging are essential for maintaining application health and performance. Monitoring tools collect metrics about resource usage, helping you identify bottlenecks and optimize performance. Logging provides a record of events, crucial for debugging and understanding application behavior. Consider integrating these add-ons with a comprehensive platform like Plural for a unified view of your Kubernetes operations.

Effectively Managing Your Kubernetes Cluster

Once your Kubernetes cluster is up and running, the real work begins. Effectively managing a cluster involves several key areas, each crucial for application performance and stability. Let's break down these core components of Kubernetes cluster management.

Allocating and Optimizing Kubernetes Cluster Resources

A Kubernetes cluster includes a control plane and worker nodes. These worker nodes host the pods that run your application workloads. The control plane manages these nodes and pods, as detailed in the Kubernetes documentation. Efficiently allocating and optimizing resources across your nodes is essential. Think of it like managing the space in your apartment: you want to make sure everything fits comfortably and that no single area is overloaded. This involves defining resource requests and limits for each pod, ensuring applications have the resources they need without starving others. Regularly reviewing and adjusting these resource allocations as your application evolves helps prevent performance bottlenecks and keeps costs under control.

Monitoring and Logging Your Kubernetes Clusters

You can't manage what you can't see. Monitoring and logging provide essential visibility into your cluster's health and performance. Kubernetes offers tools for logging, monitoring, and auditing cluster activity, allowing you to track resource usage, identify potential issues, and troubleshoot problems quickly. Setting up comprehensive monitoring and logging from the start is like installing a security system—it gives you peace of mind and alerts you to any unusual activity. This proactive approach lets you address issues before they impact your users.

Securing and Controlling Access to Your Clusters

Security is paramount, and Kubernetes clusters are no exception. Controlling access to your cluster is crucial for protecting sensitive data and maintaining the integrity of your applications. Kubernetes provides authentication and authorization options to secure your cluster, much like using a lock on your apartment door. Implementing role-based access control (RBAC) lets you define granular permissions for different users and services, ensuring that only authorized individuals and processes can access specific resources.

Scaling and Balancing Loads on Kubernetes

One of Kubernetes' strengths is its ability to scale applications dynamically based on demand. This involves adjusting the number of pods running to handle fluctuations in traffic or workload. Kubernetes excels at managing microservices—independent software components responsible for specific tasks—and offers features like load balancing, self-healing, portability, and automated deployment and scaling, as explained in this article on Kubernetes. Load balancing distributes traffic evenly across multiple pods, ensuring no single pod becomes overwhelmed. This is similar to having multiple elevators in a building—it prevents long wait times and ensures smooth traffic flow. Scaling and load balancing are essential for maintaining application availability and responsiveness, especially during peak periods.

Kubernetes Cluster Management Best Practices

Solid Kubernetes cluster management hinges on implementing best practices that ensure reliability, security, and efficiency. Here are some key areas to focus on:

Regularly Updating and Patching Your Clusters

Regular updates and patches are your first line of defense against security vulnerabilities and performance issues. A proactive approach to patching ensures your cluster benefits from the latest features and bug fixes. This includes updating the Kubernetes control plane components (like the API server, scheduler, and controller manager) as well as the worker nodes where your applications run. While Kubernetes offers various upgrade strategies, remember that testing updates in a non-production environment first is crucial. The official Kubernetes documentation offers more information on cluster administration.

Implementing High Availability for Kubernetes

High availability is paramount for mission-critical applications. Minimizing downtime means designing your cluster with redundancy in mind. This typically involves running the control plane across multiple servers, ensuring that the failure of one component doesn't bring down the entire system. Distributing your workloads across multiple worker nodes and availability zones also contributes to a resilient architecture. The Kubernetes documentation provides a good overview of cluster architecture and high availability.

Backing Up and Recovering Kubernetes Clusters

A robust disaster recovery plan is essential for any Kubernetes deployment. This includes regularly backing up your etcd data, which stores the cluster's state and configuration. Consider using a separate etcd cluster for backups and storing those backups in a secure, offsite location. Test your recovery procedures regularly to ensure they function as expected in a real-world scenario. For more information on etcd and other architectural components, refer to the Kubernetes documentation.

Managing Network Policies in Kubernetes

Network policies act as firewalls within your Kubernetes cluster, controlling traffic flow between pods and namespaces. By defining granular rules about which pods can communicate with each other, you can significantly enhance the security of your applications. This helps prevent unauthorized access and limit the impact of security breaches. Learn more about network policies in Kubernetes.

Efficient Kubernetes Resource Management

Efficient resource management is key to optimizing costs and performance. Right-sizing your pods and nodes ensures that you're not over-provisioning resources and wasting money. Using resource quotas and limits helps prevent runaway resource consumption by individual applications. Consider using tools like the Kubernetes Horizontal Pod Autoscaler to automatically adjust the number of pods based on demand. The Kubernetes documentation provides further information on resource management within the context of cluster architecture.

Tools and Technologies for Kubernetes

Managing a Kubernetes cluster effectively means using the right mix of built-in tools and third-party platforms. Your choices here can significantly impact how efficiently you operate and your ability to scale. Let's look at some key technologies:

Native Kubernetes Management Tools

Kubernetes comes with a robust set of tools for managing cluster operations. The control plane, as described in the Kubernetes documentation, is the core of your cluster. It manages worker nodes and the pods running your applications. Kubectl, the command-line interface, lets you interact directly with your cluster for deploying applications, inspecting resources, and troubleshooting problems. For managing resources, Kubernetes features like Resource Quotas and LimitRanges help control consumption and prevent shortages. These native tools provide a solid base for essential cluster management.

Third-Party Kubernetes Platforms

As your Kubernetes setup gets more complex, managing multiple clusters and their dependencies can become a real headache. Third-party platforms offer advanced features and automation to simplify these processes. These platforms, discussed in this article on Kubernetes management tools, often address common issues like inconsistent configurations, security, and efficient monitoring across clusters. They can simplify deployments, rollouts, and scaling, giving your team more time to focus on developing applications instead of managing infrastructure. Consider exploring these platforms to improve your Kubernetes workflows.

Monitoring and Observability Tools for Kubernetes

Knowing how your cluster is performing and how healthy it is is crucial for smooth operation. Kubernetes offers built-in tools for logging and monitoring, including Container Resource Monitoring and Cluster-level Logging, which offer helpful insights into resource usage and application behavior. For a more complete picture, consider adding specialized monitoring and observability tools. These tools collect and analyze metrics, logs, and traces, giving you a deeper understanding of your cluster's performance and letting you proactively identify potential problems. Effective monitoring is key to ensuring your Kubernetes deployments are reliable and stable.

Common Challenges in Kubernetes

Kubernetes offers powerful tools for managing containerized applications, but it also presents unique challenges, especially as your deployments grow. Let's break down some of the most common hurdles teams face.

Operational Complexity of Kubernetes

Kubernetes has a steep learning curve. Managing even a single cluster involves juggling many moving parts, from deployments and services to pods and namespaces. The sheer operational overhead and the complexity of the Kubernetes ecosystem can be a major roadblock, especially for teams just starting with container orchestration. This complexity is often compounded by the need to integrate with existing infrastructure and tooling. Finding skilled Kubernetes administrators can also be a challenge.

Kubernetes Resource Optimization

While Kubernetes helps manage resources, optimizing their use is a separate task. Kubernetes excels at managing microservices and offers features like automated scaling and load balancing. However, efficiently allocating resources across your clusters to prevent waste and ensure application performance requires careful planning and ongoing monitoring. This includes right-sizing your nodes, managing resource quotas, and understanding the resource requirements of your applications.

Reasons for Transitioning Away from Kubernetes

While Kubernetes offers powerful orchestration capabilities, it's not a one-size-fits-all solution. Some organizations find that the operational overhead and complexity outweigh the benefits, leading them to explore alternative approaches. Understanding these reasons can help you determine if Kubernetes is the right fit for your specific needs.

One common reason for transitioning away from Kubernetes is the operational complexity. As Ben Houston points out in his article, managing Kubernetes often requires dedicated DevOps engineers, even for relatively simple tasks. This can be a significant burden for smaller teams or organizations without specialized Kubernetes expertise. The need for constant monitoring, patching, and upgrades adds to this overhead, diverting resources from core development.

Cost is another factor. Houston also notes the substantial infrastructure costs associated with Kubernetes, including redundant management nodes and potential over-provisioning to accommodate slow autoscaling. These costs can escalate, especially for applications with fluctuating workloads. The pricing for managed Kubernetes services can also be a consideration. For some, simpler alternatives offer a more streamlined experience with reduced operational overhead and a pay-per-use model that can lead to significant cost reductions, as highlighted in Houston's transition to Cloud Run.

If you're looking to simplify your Kubernetes operations or are struggling with the complexities of managing your cluster, consider exploring booking a demo with Plural. We can help you streamline your workflows and reduce operational overhead, allowing your team to focus on building and deploying applications, not managing infrastructure.

Managing Multiple Kubernetes Clusters

Many organizations end up managing multiple Kubernetes clusters, whether for different environments (development, staging, production), geographic regions, or to isolate workloads. This introduces a whole new layer of complexity. Maintaining consistency across these clusters—in terms of configurations, resource definitions, and deployments—becomes a significant challenge. This can lead to configuration drift, increased management overhead, and potential security vulnerabilities.

Compliance and Governance in Kubernetes

Security and compliance are paramount in any production environment. Kubernetes offers various authentication and authorization options. However, implementing and enforcing robust security policies, managing access control, and meeting regulatory requirements can be complex. This includes managing secrets, ensuring network security, and regularly auditing your clusters for vulnerabilities.

Navigating Kubernetes Networking

Kubernetes networking can be tricky. The flexible architecture, while allowing for customization and extensibility, can also introduce complexities, especially when using different deployment tools like kubeadm, kops, or Kubespray. Understanding concepts like service discovery, ingress, and network policies is crucial for ensuring reliable communication between your applications and the outside world. Troubleshooting network issues within a Kubernetes cluster can also be time-consuming.

Addressing Kubernetes Management Challenges

Kubernetes offers incredible power and flexibility, but managing it effectively can be tricky. Let's break down some common challenges and how to tackle them.

Simplifying Kubernetes Operations

One of the biggest hurdles with Kubernetes is the sheer operational overhead. Managing multiple clusters, each with its own configurations and deployments, can quickly become a tangled mess. Inconsistencies creep in, making troubleshooting and maintenance a nightmare. Thankfully, tools exist to streamline these processes. Think of it like using a project management tool instead of a stack of sticky notes—everything is centralized, organized, and easier to track. As Romaric Philogène points out, solutions are available to address common issues like inconsistent configurations, security enforcement, and efficient monitoring. Check out his article on the best tools to manage Kubernetes clusters. Finding the right tools for your team can significantly simplify daily operations.

Optimizing Kubernetes Resource Use

Kubernetes is designed to manage microservices—those independent software components responsible for specific tasks within your application. This architecture allows for incredible scalability and resilience, but it also introduces the challenge of resource optimization. You want to ensure your applications have the resources they need to perform optimally without overspending. This requires careful planning, monitoring, and potentially even automated scaling. AltexSoft's overview of Kubernetes can help you leverage features like load balancing and automated deployment to maximize resource efficiency.

Effective Multi-Cluster Management for Kubernetes

For many organizations, a single Kubernetes cluster isn't enough. Whether for geographic redundancy, separating workloads, or managing different environments, multi-cluster deployments are becoming increasingly common. However, managing multiple clusters introduces a new layer of complexity. Maintaining consistent configurations, resource definitions, and deployments across all clusters is crucial. ArgoCD can simplify multi-cluster management by providing a centralized control plane for your deployments. This allows you to manage resources across different clusters from a single interface, ensuring consistency and reducing the risk of errors. For a deeper dive into multi-cluster Kubernetes, check out Tigera's practical guide.

Ensuring Kubernetes Compliance and Security

Security is paramount in any IT environment, and Kubernetes is no exception. With distributed systems and containerized workloads, ensuring compliance and security can feel daunting. However, Kubernetes provides robust security features, including various authentication and authorization options. The official Kubernetes documentation offers detailed information on securing your cluster. Taking the time to understand and implement these security best practices is essential for protecting your applications and data. Regularly reviewing and updating your security policies is also crucial to stay ahead of evolving threats.

Advanced Kubernetes Techniques

As you become more comfortable managing your Kubernetes clusters, exploring advanced techniques can further optimize your operations and unlock new possibilities. These strategies can help you adapt to changing demands, improve resource utilization, and simplify complex deployments.

Implementing Kubernetes Cluster Autoscaling

Cluster autoscaling dynamically adjusts the size of your cluster based on the demands of your applications. If your current nodes are overwhelmed, the cluster autoscaler provisions additional nodes to handle the increased load. Conversely, if resources are underutilized, the autoscaler scales down the cluster to save costs. This ensures you always have the right amount of resources available, avoiding performance bottlenecks and unnecessary expenses. For more information on scaling best practices, see Microsoft's documentation. Kubernetes also offers robust cluster autoscaling features you can explore.

Leveraging AI-Driven Kubernetes Solutions

AI and machine learning are transforming how we manage Kubernetes clusters. AI-driven solutions analyze historical resource usage data to predict future demand and proactively scale your cluster. This predictive scaling helps prevent performance issues during peak times and optimizes resource allocation. These tools can also identify anomalies and potential problems, allowing you to address them before they impact your applications. To learn more about optimizing cluster autoscaling, read this informative blog post.

Edge Computing with Kubernetes

Kubernetes is increasingly popular for managing workloads at the edge. Edge computing involves deploying applications closer to the data source, which reduces latency and improves performance for devices and users at the edge of the network. This is particularly relevant for Internet of Things (IoT) applications and other scenarios where low latency is critical. Kubernetes provides a consistent platform for deploying and managing applications across both cloud and edge environments.

Exploring Serverless Kubernetes

Serverless Kubernetes abstracts away the underlying infrastructure, allowing developers to focus solely on their application code. With serverless, you don't need to manage servers or worry about scaling; the platform automatically handles resource allocation based on demand. This simplifies deployments and reduces operational overhead, enabling faster development cycles and greater agility. For insights into different configurations for cluster autoscaling, review this helpful blog post.

Detailed Kubernetes Use Cases

Kubernetes's flexibility makes it a powerful tool for a wide range of applications. Let's explore some of the most common and compelling Kubernetes use cases, drawing from real-world examples and expert insights.

Deploying and Scaling Microservices

Kubernetes excels at managing microservices, those independent software components responsible for specific tasks within your application. This architecture allows for incredible scalability and resilience. Kubernetes simplifies the deployment, scaling, and management of these individual services, ensuring they work together seamlessly. Think of it as a well-organized apartment building for your software, where each microservice occupies its own unit, as Spacelift describes. This modularity makes it easier to update and maintain individual services without affecting the entire application. Plural offers robust support for microservice deployments, simplifying complex deployments and management.

Running Applications at Scale

Need your app to handle a surge in users? Kubernetes can automatically add more resources as needed, ensuring smooth performance even during peak times. This dynamic scaling is a game-changer for applications that experience fluctuating demand, such as e-commerce sites during sales events or streaming services during popular releases. Kubernetes ensures your application stays responsive and available, no matter the load. Plural's platform further enhances this scalability by providing tools for automated scaling and resource management.

Creating Serverless/PaaS Platforms

Kubernetes can be used to build custom platforms for developers, making it easier for them to deploy and manage their apps without worrying about the underlying infrastructure. This self-service approach empowers developers to work more efficiently and independently. Kubernetes provides the foundation for creating these streamlined development environments, allowing organizations to focus on building and deploying applications, not managing servers. Plural can help streamline the creation and management of these platforms, simplifying the complexities of Kubernetes for your development teams.

Streamlining CI/CD Pipelines

Continuous integration and continuous delivery (CI/CD) are essential for modern software development. Kubernetes simplifies the process of building, testing, and deploying software, enabling faster release cycles and quicker feedback loops. By automating these processes, Kubernetes helps teams deliver high-quality software more efficiently. Plural integrates seamlessly with CI/CD pipelines, further automating deployments and simplifying the release process.

Powering AI/ML and Big Data Workloads

The demands of AI, machine learning, and big data workloads often require significant computing power and specialized infrastructure. Kubernetes is well-suited for handling the large datasets and complex computations involved in these fields, providing a scalable and reliable platform for data-intensive applications. Plural's platform offers the robust infrastructure and management tools needed to support these demanding workloads.

Enabling Hybrid and Multicloud Deployments

In today's complex IT landscape, many organizations leverage a mix of cloud providers and on-premises infrastructure. Kubernetes simplifies the management of applications that run across these diverse environments, providing a consistent platform for hybrid and multicloud deployments. This flexibility allows organizations to choose the best infrastructure for their needs without being locked into a single provider. Plural simplifies multi-cluster management, providing a single pane of glass for managing deployments across various environments.

Improving Application Resiliency and Fault Tolerance

Kubernetes is designed to keep your applications running, even when things go wrong. Its built-in features for self-healing and automated failover ensure that your application stays up and running even if parts of the system fail. This resilience is crucial for maintaining service availability and minimizing the impact of unexpected disruptions. Plural enhances this resilience with features for automated recovery and disaster recovery planning. For more in-depth information on Kubernetes and its capabilities, you can explore Plural's platform and its features for managing Kubernetes deployments.

Streamlining Kubernetes Operations with Plural

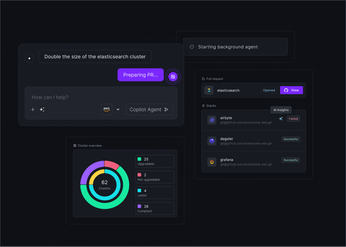

Plural is an AI-powered Kubernetes management platform designed to simplify the complexities of operating Kubernetes, saving your team valuable time and resources. We help you automate tasks, manage dependencies, enforce compliance, and drastically reduce upgrade cycles.

Automating Kubernetes Cluster Maintenance

Kubernetes offers significant advantages like scalability and automated management (AWS), but keeping your clusters updated and maintained can still be a significant undertaking. Plural automates these crucial processes. We handle patching, upgrades, and other routine maintenance, freeing your team to focus on developing and deploying applications. This automation minimizes downtime and ensures your clusters always run smoothly.

Simplifying Kubernetes Dependency Management

One of the biggest headaches in Kubernetes is managing dependencies. As highlighted in this Medium article, managing multiple Kubernetes clusters presents a significant challenge due to operational overhead and the complexity of the Kubernetes ecosystem. Plural simplifies this by providing a clear, centralized view of all your dependencies. This helps you avoid conflicts, streamline deployments, and ensure your applications run reliably.

Enforcing Kubernetes Compliance and Security with Plural

Security and compliance are paramount in any organization. Kubernetes provides tools for logging, monitoring, and auditing (Kubernetes documentation), but leveraging these tools effectively can be complex. Plural integrates seamlessly with your existing security and compliance workflows. We help you enforce policies, monitor activity, and maintain a secure environment for your Kubernetes deployments.

Reduce Kubernetes Upgrade Cycles with Plural

Upgrading Kubernetes can often be a long, drawn-out process. Because Kubernetes is designed to manage microservices (AltexSoft), rapid deployment and updates are possible, but managing these upgrades across complex environments can be a bottleneck. Plural changes the game by automating and streamlining the upgrade process. We can reduce upgrade cycles from months to hours, minimizing disruption and allowing you to quickly take advantage of the latest Kubernetes features. Ready to see how Plural can transform your Kubernetes operations? Book a demo to learn more. You can also explore our pricing or log in to your existing account.

Related Articles

- The Quick and Dirty Guide to Kubernetes Terminology

- Kubernetes: Is it Worth the Investment for Your Organization?

- Why Is Kubernetes Adoption So Hard?

- How to manage Kubernetes Add-Ons with Plural

- Cattle Not Pets: Kubernetes Fleet Management

Frequently Asked Questions

What's the difference between a Kubernetes cluster and a node?

A Kubernetes cluster is a collection of machines, called nodes, that run your containerized applications. Think of the cluster as the entire orchestra and the nodes as the individual musicians. Each node can host multiple pods, which are the smallest deployable units in Kubernetes and contain your application workloads. The cluster provides the overall infrastructure and management capabilities, while the nodes provide the computing resources.

How do I choose the right Kubernetes management tools?

Choosing the right tools depends on the size and complexity of your Kubernetes deployments, as well as your team's expertise. If you're just starting out, the native Kubernetes tools like kubectl might be sufficient. However, as your needs grow, consider third-party platforms that offer advanced features like automation, multi-cluster management, and enhanced monitoring. Evaluate your specific requirements and explore different options to find the best fit.

What are the biggest security risks with Kubernetes?

Misconfigurations, insecure network policies, and inadequate access control are common security risks in Kubernetes. Regularly auditing your clusters, implementing role-based access control (RBAC), and staying up-to-date with security best practices are crucial for mitigating these risks. Also, ensure your container images are scanned for vulnerabilities and that your secrets are managed securely.

How can I simplify Kubernetes upgrades?

Kubernetes upgrades can be complex and time-consuming, but automation tools can significantly streamline the process. These tools can automate the steps involved in upgrading your control plane and worker nodes, minimizing downtime and reducing the risk of errors. Testing upgrades in a non-production environment before rolling them out to production is also essential.

What's the benefit of using a platform like Plural for Kubernetes management?

Plural simplifies Kubernetes management by automating key tasks like cluster maintenance, updates, and dependency management. It also helps enforce compliance, optimize security, and drastically reduce upgrade cycles. This frees up your team to focus on building and deploying applications, rather than getting bogged down in the complexities of Kubernetes administration.

Newsletter

Join the newsletter to receive the latest updates in your inbox.