What is a Kubernetes Pod? A Deep Dive for Engineers

Understand what a Kubernetes pod is and its role in container orchestration. Learn about pod architecture, lifecycle, and best practices for engineers.

Kubernetes has become the de facto standard for container orchestration, but understanding its core components is crucial for leveraging its full potential. At the heart of Kubernetes lies the Pod, the smallest deployable unit in the system. But what is a Kubernetes pod exactly, and why is it so important?

In this post, we'll break down the concept of a pod, exploring its architecture, lifecycle, and how it interacts with other Kubernetes components. We'll delve into practical examples and best practices, providing you with the knowledge to effectively manage your Kubernetes deployments. Whether you're new to Kubernetes or looking to deepen your understanding, this guide will provide a comprehensive overview of this fundamental building block.

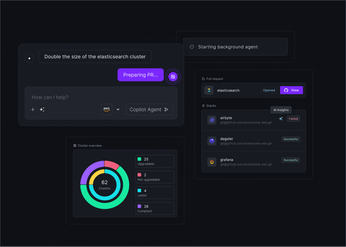

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Key Takeaways

- Pods are the smallest deployable units in Kubernetes: They encapsulate one or more containers and provide a shared context for resources like networking and storage. Mastering pod management is essential for running applications effectively on Kubernetes.

- Resource allocation and security are crucial: Define resource requests and limits to prevent resource contention and ensure predictable pod behavior. Implement security best practices, such as minimizing container privileges and using network policies, to protect your applications.

- Monitoring and logging provide essential insights: Use liveness, readiness, and startup probes to monitor the health of your pods and ensure they are functioning correctly. Centralized logging helps aggregate logs from all your pods, simplifying debugging and troubleshooting.

What are Kubernetes Pods?

Kubernetes pods are the smallest deployable units in a Kubernetes cluster. Think of a pod as a logical host for one or more containers designed to run together and share resources. They are the fundamental building blocks of your Kubernetes deployments, abstracting away the underlying infrastructure and providing a consistent environment for your applications.

Definition and Core Concepts

A pod encapsulates one or more closely related containers, along with shared storage and network resources and a specification for how to run the containers. This design makes it easy to manage complex applications as a single unit. While it's possible to run a single container within a pod, the real power comes into play when running multiple interdependent containers. For example, an application container might run alongside a sidecar container responsible for logging or proxying requests. These containers within a pod share the same network namespace, allowing them to communicate seamlessly via localhost. They can also share volumes, facilitating easy data exchange. This tight coupling and resource sharing make pods ideal for microservices architectures.

The Role of Pods in Kubernetes Architecture

Pods play a crucial role in the overall Kubernetes architecture. They are the atomic units of scheduling and deployment. When you deploy an application, you're actually deploying one or more pods. The Kubernetes scheduler then distributes these pods across the available nodes in your cluster, ensuring high availability and resource utilization. If a pod fails, Kubernetes automatically restarts it or creates a new one based on your desired state configuration. This self-healing capability is a key benefit of using Kubernetes. Furthermore, understanding pods is essential for grasping more advanced Kubernetes concepts like Deployments, ReplicaSets, and StatefulSets, all of which build upon the foundation of pods.

Key Components and Features of Kubernetes Pods

Container Encapsulation

A Kubernetes pod encapsulates one or more closely related containers, acting as a single deployable unit. While it's possible to run a single container in a pod, the real advantage emerges when grouping containers designed to work together. A common example is an application container paired with a logging sidecar or a web server coupled with a local cache. This design promotes tight coupling and efficient inter-container communication.

Shared Resources

Containers within a pod share resources such as network and storage. This shared context allows them to operate as a cohesive unit. For example, an application container and a logging sidecar can easily exchange files through a shared volume. This model simplifies configuration and ensures efficient data transfer between containers.

Networking and Communication

Each pod has a unique IP address, enabling seamless communication between containers within the pod via open. This simplifies intra-pod networking. Communication between pods, however, relies on standard IP networking.

Kubernetes Pods vs. Containers and Nodes

This section clarifies the relationship between pods, containers, and nodes within Kubernetes.

Distinguishing Pods from Containers

While sometimes used interchangeably, pods and containers are distinct concepts. A container is a runnable instance of an image, packaging application code and its dependencies. A pod is a group of one or more containers. Think of a pod as a wrapper, providing a shared environment for your containers. This simplifies container management and inter-container communication within the pod. If you have multiple containers that need to work closely together, they should reside within the same pod.

Relationship Between Pods and Nodes

Nodes are the worker machines in a Kubernetes cluster. Pods run on these nodes, orchestrated by the kubelet, a Kubernetes agent running on each node. The kubelet manages the pod lifecycle—from creation to termination—ensuring the containers within are running correctly. Each node must have a container runtime, such as Docker or containerd, installed. This runtime allows the pods and their containers to execute.

Kubernetes Pod Lifecycle

Understanding the Pod lifecycle is crucial for effectively managing your Kubernetes deployments. We'll also cover health checks, essential for ensuring your applications are running as expected.

Pod States: From Creation to Termination

Pods are ephemeral—they're designed to be short-lived, running until their task completion, deletion, resource exhaustion, or node failure.

While you can create individual Pods, in practice, you'll rarely do so directly. Instead, you'll typically use higher-level workload resources like Deployments, Jobs, or StatefulSets. These controllers manage the lifecycle of your Pods, handling scaling, rolling updates, and restarting failed Pods automatically. They provide a more robust and resilient approach to managing your applications in Kubernetes, especially in production environments.

A Pod transitions through several states during its lifecycle. Understanding these states provides insights into the health and status of your applications:

- Pending: The Pod has been accepted by the Kubernetes cluster, but one or more containers haven't been created yet. This could be due to image downloads, resource allocation delays, or other initialization tasks.

- Running: All containers in the Pod are running. At least one container has been started or restarted.

- Succeeded: All containers in the Pod have successfully terminated and won't be restarted. This is the typical end state for batch jobs.

- Failed: All containers in the Pod have terminated, and at least one container has terminated in failure. This could be due to application errors, crashes, or exceeding resource limits.

- Unknown: The state of the Pod couldn't be determined, often due to communication issues with the node where the Pod is running.

Health Checks and Probes

Kubernetes uses probes to monitor the health of your containers within a Pod. These probes are periodic checks that determine whether a container is running correctly and ready to serve traffic. There are three main types of probes:

- Liveness Probes: These probes check if a container is still running. If a liveness probe fails, Kubernetes restarts the container. This is useful for catching deadlocks or unresponsive applications. A common example is an HTTP request to a health check endpoint.

- Readiness Probes: Readiness probes determine if a container is ready to accept traffic. If a readiness probe fails, Kubernetes removes the Pod from the associated Service's endpoints, preventing traffic from reaching the unhealthy container until the probe succeeds again. This ensures only healthy containers receive traffic.

- Startup Probes: Startup probes are used for containers with long initialization times. They differ from liveness probes by having an initial delay period before checks start. This allows the container sufficient time to start up before health checks begin, avoiding premature restarts due to slow startup times. If the startup probe fails, the container is restarted, just like with a liveness probe.

Creating and Managing Kubernetes Pods

This section explains how to create and manage Pods. We'll cover defining Pods with YAML and interacting with them using kubectl.

YAML Configuration

Pods are defined using YAML files, which describe the desired state of your application. These files specify details such as the container image, resource requirements, and other configuration parameters. Here's a simple example:

apiVersion: v1

kind: Pod

metadata:

name: my-app-pod

spec:

containers:

- name: my-app-container

image: nginx:latest

ports:

- containerPort: 80

This YAML defines a Pod named my-app-pod with a single container, my-app-container, running the nginx:latest image. The containerPort setting exposes port 80 within the container. A Pod can include application containers (which run your application code), init containers (for setup tasks before the main application starts), and ephemeral containers (for debugging or short-lived tasks).

Using kubectl

kubectl is the command-line tool for interacting with the Kubernetes API. You use it to create, manage, and inspect your Kubernetes resources, including Pods. To create a Pod from the YAML file above, you would use:

kubectl apply -f pod.yaml

This command instructs Kubernetes to create the Pod as described in pod.yaml. You can then manage the Pod using various kubectl commands:

kubectl get pods: Lists all Pods in your current namespace.kubectl describe pod <pod-name>: Provides detailed information about a specific Pod, including its status, events, and resource usage.kubectl logs <pod-name>: Displays the logs from a container within the Pod, useful for debugging and monitoring.kubectl exec -it <pod-name> -- bash: Opens a shell session inside a container, allowing you to execute commands directly within the Pod's environment.kubectl delete pod <pod-name>: Removes the Pod from the cluster.

Kubernetes Pod Networking and Storage

Understanding how pods communicate and manage data is crucial for building robust and scalable applications.

IP Addressing and Service Discovery

Every Pod in a Kubernetes cluster receives a unique IP address, enabling communication between Pods even across different nodes. Containers within a Pod, however, share the same network namespace and communicate via localhost. Kubernetes manages the underlying network, ensuring reliable connectivity between Pods across the cluster.

Service discovery provides a stable endpoint for accessing a group of Pods, abstracting away the dynamic nature of individual Pod IP addresses. Kubernetes offers various service types, each routing traffic to the underlying Pods differently, allowing controlled and predictable application exposure.

Volume Management and Data Persistence

Pods use volumes for data storage, which can be shared among containers within the Pod. This is crucial for applications requiring persistent storage, as container filesystems are ephemeral. Volumes decouple storage from the Pod lifecycle, ensuring data persists even if a Pod is terminated and rescheduled. Kubernetes supports various volume types, from local disks to cloud-based solutions, offering flexibility in data management.

For data persistence beyond individual Pod lifecycles, Kubernetes provides Persistent Volumes (PVs) and Persistent Volume Claims (PVCs). PVs represent storage provisioned by an administrator, while PVCs are storage requests from developers. This separation simplifies storage management, allowing developers to focus on application logic without managing the underlying storage infrastructure. Using PVs and PVCs ensures application data remains safe and available, even during Pod failures or cluster upgrades.

Scaling and Replication

Scaling and replication are fundamental to running resilient and efficient applications in Kubernetes. This section covers the core components that enable these capabilities.

Horizontal Pod Autoscaling

The Horizontal Pod Autoscaler (HPA) automatically adjusts the number of pods in a Deployment or ReplicaSet based on observed metrics like CPU utilization, memory usage, or custom metrics. This dynamic scaling allows your application to adapt to changing workloads, ensuring optimal resource utilization and performance. When demand increases, the HPA automatically increases the number of pods; when demand decreases, it scales down, saving resources and costs. For example, you might configure the HPA to scale up when the average CPU utilization across all pods exceeds 70% and scale down when it falls below 50%.

ReplicaSets and Deployments

ReplicaSets and Deployments work in conjunction to ensure your application remains available and up-to-date. A ReplicaSet ensures that a specified number of pod replicas are running at any given time. If a pod fails, the ReplicaSet controller creates a new one, maintaining the desired replica count.

Deployments build upon ReplicaSets by providing declarative updates. You define the desired state of your pods in a Deployment configuration, and the Deployment controller manages the transition from the current state to the desired state. This allows for rolling updates, rollbacks, and scaling, simplifying deployments and minimizing downtime. You can easily scale your application up or down by changing the number of replicas in the Deployment configuration. This flexibility is vital for adapting to changing workloads and ensuring efficient resource management. For instance, during peak traffic, you can increase the replica count to handle the increased load and then decrease it during off-peak hours to conserve resources.

Best Practices for Kubernetes Pod Management

Efficient pod management is crucial for stable and performant Kubernetes deployments. This section covers best practices for resource allocation, security, and monitoring.

Resource Allocation and Limits

Accurately defining resource requests and limits for your pods is the first step toward a well-oiled Kubernetes cluster. Without these settings, a pod could consume excessive resources, starving other pods and potentially crashing your application. Think of resource requests as what the pod needs to start and limits as the ceiling on what it can consume. Setting these prevents noisy neighbor issues and ensures predictable resource usage.

Security Considerations

Running pods securely requires a multi-layered approach. One fundamental practice is minimizing the privileges of the containers within your pods. Grant only the necessary permissions required for the application to function. Regularly update container images to patch vulnerabilities and use security contexts to define access control policies. Consider network policies to restrict traffic flow between pods, limiting the blast radius of potential attacks.

Monitoring and Logging

Comprehensive monitoring and logging are essential for maintaining the health and stability of your pods. Implement liveness, readiness, and startup probes to ensure your applications are functioning correctly. These probes provide Kubernetes with the signals it needs to manage pod lifecycles effectively.

Centralized logging allows you to aggregate logs from all your pods, making debugging and troubleshooting much easier. Understanding pods is fundamental to understanding Kubernetes itself. Effective monitoring and logging build on this foundation, providing insights into the health and performance of your deployments.

Related Articles

- The Quick and Dirty Guide to Kubernetes Terminology

- The Essential Guide to Monitoring Kubernetes

- Kubernetes: Is it Worth the Investment for Your Organization?

- Kubernetes Management Platforms: Your Complete Guide

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

What's the difference between a Pod and a container?

A container is a runnable instance of an image, packaging application code and dependencies. A pod, on the other hand, is a Kubernetes abstraction that represents a group of one or more containers deployed together on the same node. Pods provide a shared environment for containers, facilitating inter-container communication and resource sharing.

How do Pods communicate with each other?

Each pod in a Kubernetes cluster has a unique IP address, enabling communication between pods regardless of their location within the cluster. Containers within the same pod share a network namespace and can communicate via localhost. Kubernetes manages the underlying network infrastructure, ensuring reliable connectivity.

How do I make my Pod's data persistent?

Containers within a pod have ephemeral storage, meaning data is lost when the container terminates. To persist data, use Kubernetes volumes. Volumes provide persistent storage that can be mounted by containers within a pod. For data that needs to survive pod restarts and rescheduling, use Persistent Volumes (PVs) and Persistent Volume Claims (PVCs).

How can I scale my application in Kubernetes?

Use Deployments to manage the desired number of pod replicas. The Deployment controller ensures the specified number of pods are running and automatically replaces any failed pods. For automatic scaling based on resource utilization, use the Horizontal Pod Autoscaler (HPA). The HPA adjusts the number of pods based on metrics like CPU and memory usage.

How do I troubleshoot issues with my Pods?

kubectl provides several commands for inspecting and troubleshooting pods. kubectl describe pod <pod-name> provides detailed information about a pod's status, events, and resource usage. kubectl logs <pod-name> displays container logs, helpful for debugging application issues. For interactive troubleshooting, kubectl exec -it <pod-name> -- bash open a shell session inside a container. Liveness, readiness, and startup probes provide insights into application health and can trigger automatic restarts or removal from service endpoints.

Newsletter

Join the newsletter to receive the latest updates in your inbox.