Kubernetes Taints & Tolerations: Best Practices Guide

Learn what are the best practices for using taints and tolerations in Kubernetes to optimize pod scheduling and enhance cluster management.

Managing Kubernetes pod scheduling effectively is crucial for production clusters. Taints and tolerations give you fine-grained control, letting you reserve nodes for specific workloads or protect them from incompatible applications. This guide covers everything you need to know about Kubernetes taints and tolerations, from the basics to advanced techniques. We'll explore taint effects (NoSchedule, PreferNoSchedule, NoExecute), how tolerations work, and practical management with kubectl. Plus, we'll dive into best practices for using taints and tolerations in Kubernetes to maximize resource utilization and application reliability, complete with real-world examples and troubleshooting tips. Finally, we'll see how Plural simplifies taint management at scale.

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Key Takeaways

- Use taints to reserve nodes: Taints let you dedicate nodes for specific workloads like those requiring GPUs or high memory. Use the appropriate taint effect (

NoSchedule,PreferNoSchedule,NoExecute) to control how strictly pods are repelled from a node. - Grant pod access with tolerations: Tolerations allow pods to run on tainted nodes. Ensure the toleration's key and effect match the taint. Remember, a toleration doesn't guarantee placement, it just removes the taint's restriction.

- Manage taints strategically: Use

kubectlfor applying, removing, and viewing taints. For larger deployments, consider a platform like Plural to streamline management. Document your taint strategy and test changes in a non-production environment.

Introduction to Kubernetes Taints and Tolerations

Kubernetes taints and tolerations give you fine-grained control over pod scheduling. Taints make a node less desirable for some pods, while tolerations are exceptions granted to specific pods, allowing them to run on tainted nodes. This is crucial for managing diverse workloads and ensuring pods land on nodes with the right resources.

Taints

A taint marks a node as unsuitable for pods without a matching toleration. It's a way to say, "This node has special characteristics, and not all pods are welcome." Taints are applied to nodes using labels with a specific format: key=value:effect. The key and value are arbitrary strings you define. The effect determines how the taint impacts pod scheduling:

- `NoSchedule`: The strictest effect. Pods without a matching toleration will never be scheduled on a node with this taint.

- `PreferNoSchedule`: A less strict effect. The scheduler tries to avoid placing pods on the tainted node, but it's not a hard rule. If no other suitable nodes are available, a pod without a matching toleration might still end up there.

- `NoExecute`: Similar to `NoSchedule`, but it also impacts already-running pods. If applied, existing pods without a matching toleration will be evicted.

Tolerations

Tolerations are applied to pods, allowing them to run on nodes with matching taints. They're a way to say, "This pod can tolerate the conditions on this tainted node." Like taints, tolerations use keys and values, and the toleration's key and effect must match the taint's for it to work. If a value is specified in the taint, the toleration must also specify the same value or use an operator like Exists to match any value. A toleration without a specified value matches taints with the same key and effect, regardless of the taint's value.

For example, if a node has a taint dedicated=gpu:NoSchedule, only pods with a toleration like dedicated=gpu:NoSchedule or dedicated:NoSchedule:Exists can schedule on that node. This dedicates GPU nodes to pods designed to use them.

Managing taints and tolerations can become complex as your cluster grows. For larger deployments, consider a platform like Plural to streamline management and ensure consistent application of your strategies.

Understanding Kubernetes Taints

What are Taints and Why Use Them?

In Kubernetes, taints act like keep-out signs posted on a node, signaling to the scheduler that the node shouldn't accept certain pods. This mechanism reserves nodes for specific workloads or protects them from pods not designed for their characteristics. This control is crucial for managing diverse workloads and ensuring efficient cluster resource use. Rather than relying on random pod placement by the scheduler, taints provide granular control over where pods run. The Kubernetes documentation offers a comprehensive overview of taints and tolerations.

How Taints Influence Pod Scheduling

Taints repel pods that lack a corresponding toleration, which acts as a pod's permission slip to run on a tainted node. A taint comprises a key, an optional value, and an effect. The key-value pair identifies the taint, while the effect determines how it repels pods. The three possible effects—NoSchedule, PreferNoSchedule, and NoExecute—each have different restrictiveness. You manage taints on nodes using the kubectl taint command. Understanding these effects is crucial for effective taint usage. This blog post explains the interaction between taints and tolerations, which essentially function as a filter, ensuring only compatible pods are scheduled on designated nodes.

Exploring Taint Effects

Taints influence pod scheduling by specifying which nodes should not run particular pods. Think of them as "keep out" signs for certain workloads. Kubernetes offers three taint effects, each controlling scheduling decisions with varying strictness.

The NoSchedule Effect

The NoSchedule effect is a strong directive. It prevents any pod without a matching toleration from being scheduled on the tainted node. Existing pods on the node remain unaffected. This is useful for dedicating a node to specific workloads. For example, you might taint a node with key=gpu:effect=NoSchedule to reserve it for pods requiring GPUs. Only pods with a corresponding toleration for the gpu key will be allowed to run on that node. This ensures that resource-intensive GPU workloads don't compete with other pods.

Understanding PreferNoSchedule

PreferNoSchedule acts as a soft preference. The Kubernetes scheduler tries to avoid placing new pods on the tainted node. However, if no suitable untainted nodes are available, the scheduler will still schedule pods onto the tainted node. This is helpful for nodes that should primarily run specific workloads but can accommodate others if necessary. Imagine a node primarily used for batch processing jobs. You could taint it with key=batch:effect=PreferNoSchedule. This encourages the scheduler to place batch jobs on this node while still allowing other pods to run there if resources are available. This balances dedicated resources with overall cluster utilization.

The NoExecute Effect

NoExecute is the most restrictive taint effect. It not only prevents new pods without a matching toleration from being scheduled but also evicts existing pods that don't tolerate the taint. This guarantees that only pods with the correct tolerations can run on the node. This is crucial for scenarios like node maintenance or hardware failures. Tainting a node with key=node-maintenance:effect=NoExecute allows you to gracefully drain pods from a node before taking it offline. This ensures workloads are safely moved to other nodes before maintenance begins. For more details on how taints and tolerations interact with pod scheduling, refer to the Kubernetes documentation.

Taints and Tolerations in Kubernetes

This section explains how to use tolerations to control pod scheduling on tainted nodes. Think of taints as attributes that repel pods, and tolerations as exceptions granted to specific pods, allowing them to override those repulsions.

How Tolerations Interact with Taints

Tolerations are applied to pods, giving them permission to run on nodes carrying specific taints. A pod without a toleration won't be scheduled on a tainted node. It's important to note that having a toleration doesn't guarantee a pod will run on a tainted node. Kubernetes still considers other factors like resource requests and limits during scheduling. Tolerations simply lift the restriction imposed by the taint. You configure these tolerations directly within the pod's specification.

Matching Taints to Tolerations

For a toleration to work, it must match the taint on the node. This matching process involves three criteria: key, effect, and optionally, value. The taint and toleration keys must be identical. Similarly, the effects must also match. If the taint specifies a value, the toleration must either include the same value or use the Exists operator. The Exists operator signifies that the toleration applies regardless of the taint's value. If even one taint on a node isn't tolerated by a pod, the pod won't be scheduled there. The effect of that unmatched taint takes precedence.

Managing Taints with kubectl

This section covers practical kubectl commands for managing taints. We'll walk through applying, removing, and viewing taints on your Kubernetes nodes.

Adding Taints to Nodes

Applying a taint to a node dictates which pods can be scheduled there. Use the kubectl taint command with the following syntax:

kubectl taint nodes <node-name> <key>=<value>:<effect>

Let's break down an example:

kubectl taint nodes worker-node-1 env=production:NoSchedule

This command adds a taint to worker-node-1. The key is env, the value is production, and the effect is NoSchedule. This prevents any pod without a matching toleration from being scheduled onto this node. You can find more details on taint effects in the Kubernetes documentation.

Removing Taints

Removing a taint reverses its effect, allowing pods previously blocked to be scheduled. Use the same kubectl taint command, appending a - after the effect:

kubectl taint nodes <node-name> <key>=<value>:<effect>-

To remove the taint we just added:

kubectl taint nodes worker-node-1 env=production:NoSchedule-

This removes the env=production:NoSchedule taint from worker-node-1, opening it back up for general scheduling.

Viewing Taints on Nodes

To see the taints applied to a node, use the kubectl describe command:

kubectl describe node <node-name>

For our example node:

kubectl describe node worker-node-1

This command outputs detailed information about the node, including a section specifically listing its taints. This is crucial for understanding the scheduling constraints on your nodes and for troubleshooting scheduling issues. For additional ways to inspect and manage taints, see this guide on managing Kubernetes taints.

Practical Taint Use Cases

This section explores practical scenarios where using taints can significantly improve your Kubernetes cluster management.

Dedicating Nodes for Specific Pods

Taints allow you to reserve nodes for particular workloads, ensuring resource availability for critical applications. Imagine you have a set of nodes with high memory capacity intended for memory-intensive applications. By applying a taint like key=value:NoSchedule to these nodes, you prevent other pods without a matching toleration from being scheduled there. This guarantees that your memory-intensive pods always have access to the necessary resources. This approach is similar to setting up dedicated worker nodes in a CI/CD pipeline, where specific build jobs require specific hardware. For more information on using taints and tolerations, see Komodor's guide.

Managing Nodes with Specialized Hardware

Many applications require specialized hardware like GPUs or FPGAs. Taints provide a mechanism to ensure that pods requiring this hardware are scheduled on the correct nodes. For example, if you have nodes equipped with GPUs for machine learning workloads, you can apply a taint like gpu=true:NoSchedule. Then, configure your machine learning pods with a corresponding toleration. This ensures that only pods designed to utilize GPUs are scheduled on those nodes, optimizing resource utilization and preventing scheduling conflicts. Kubecost offers a tutorial on using taints and tolerations with specialized hardware.

Handling Node Issues with Taints

Taints are valuable for managing node maintenance and handling failures gracefully. When a node requires maintenance, you can apply a taint like node.kubernetes.io/unreachable:NoSchedule. This prevents new pods from being scheduled on the node while allowing existing pods to continue running. As the maintenance progresses, you might change the taint to node.kubernetes.io/unreachable:NoExecute, which gracefully evicts the existing pods, giving them time to shut down cleanly. This controlled approach minimizes disruption to your applications during maintenance. This method also helps isolate failing nodes, preventing the scheduler from placing new pods on unhealthy infrastructure. Learn more about how taints and tolerations work together in this article.

Deep Dive into Tolerations

Tolerations act like exceptions to taints, granting specific pods permission to run on tainted nodes. They provide a nuanced approach to pod scheduling, working in conjunction with taints to fine-tune where your workloads land. Let's explore the intricacies of tolerations, focusing on operators and duration.

Understanding Toleration Operators

Toleration operators define how a toleration matches with a taint, offering flexibility in your scheduling strategies. The two primary operators are Equal and Exists, each serving a distinct purpose.

Equal Operator

The Equal operator demands an exact match between the toleration's value and the taint's value. Both the key and the value must be identical for the toleration to be valid. For instance, a node tainted with key=env:value=production:NoSchedule will only accept a pod with the toleration key: "env", operator: "Equal", value: "production", effect: "NoSchedule". This precise matching is crucial for scenarios requiring strict control over pod placement, ensuring that only pods with the correct key-value pair can land on the designated node. This level of granularity is especially useful in environments with varying security or resource requirements.

Exists Operator

The Exists operator offers a broader approach. Here, the toleration applies regardless of the taint's value; only the key needs to match. This simplifies configuration when the value isn't a determining factor for scheduling. Consider a node tainted with key=dedicated:effect=NoSchedule. A pod with the toleration key: "dedicated", operator: "Exists", effect: "NoSchedule" is permitted, irrespective of any value associated with the taint. This operator streamlines toleration management when you want to allow pods to bypass a taint based solely on the key, without the added complexity of value matching. The Kubernetes documentation provides further details on how these operators function within the broader context of taints and tolerations.

Specifying Toleration Durations with tolerationSeconds

The tolerationSeconds field adds a time dimension to tolerations, specifically for the NoExecute taint effect. This field determines how long a pod can tolerate the taint before being evicted. This is invaluable for managing temporary conditions, such as planned node maintenance or transient network issues. Setting tolerationSeconds: 3600, for example, allows a pod to run on the tainted node for one hour before eviction. This grace period enables you to gracefully shut down or migrate the pod, minimizing disruption to your services. Without tolerationSeconds, a pod tolerating a NoExecute taint remains on the node indefinitely, even if the underlying issue persists. This feature acts as a safeguard, ensuring that pods eventually vacate nodes experiencing prolonged problems. For practical guidance and best practices on using tolerationSeconds effectively, refer to this helpful resource on Kubernetes taints and tolerations.

Best Practices for Kubernetes Taints and Tolerations

While taints offer granular control over pod scheduling, overusing them can lead to a complex and difficult-to-manage cluster configuration. Following these best practices will help you strike the right balance.

Using Taints Effectively

Start with node affinity for general workload placement and only use taints when you need to prevent pods from running on specific nodes. Taints introduce complexity, so apply them judiciously. Focus on clear use cases like dedicated hardware or maintenance activities. Overuse can make debugging and maintenance more challenging. Prioritize simpler solutions like node selectors or affinity when possible. Proper taint management is crucial for optimizing resource utilization and application reliability, as highlighted in this tutorial on configuring taints and tolerations.

Combining Taints with Node Affinity

For more nuanced scheduling, combine taints with node affinity. Use taints to create "hard" restrictions, preventing pods from landing on inappropriate nodes. Then, layer node affinity on top to encourage pods to run on nodes with specific labels or characteristics. This approach provides a flexible and robust scheduling mechanism. For example, you might taint a set of nodes dedicated to a specific service and then use node affinity to ensure that only pods from that service, with the corresponding tolerations, are scheduled there. This combined approach is discussed further in this blog post on mastering taints and tolerations.

Documenting and Reviewing Your Taints

Maintain clear documentation of your taint and toleration strategy. This includes which nodes are tainted, the effect of each taint, and the corresponding tolerations applied to your pods. Regularly review your taint configuration to ensure it still aligns with your cluster's needs and identify any potential conflicts or redundancies. A well-documented approach simplifies troubleshooting and collaboration within your team. This article on understanding taints and tolerations emphasizes their importance for effective pod scheduling.

Testing Taints in a Staging Environment

Before applying taints to your production cluster, thoroughly test them in a staging environment. This allows you to validate their behavior and identify any unintended consequences. Testing helps ensure that your taints and tolerations work as expected and don't disrupt your application's functionality. This practice is crucial for minimizing the risk of production incidents. Testing in a staging environment is essential for identifying potential issues before they impact production, as discussed in this article on scaling Kubernetes clusters.

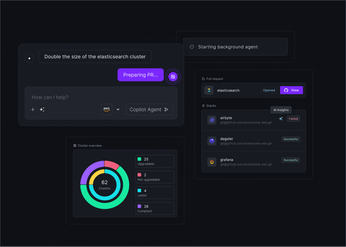

Managing Taints and Tolerations at Scale with Plural

As your Kubernetes deployments grow, managing taints and tolerations across a large number of nodes and pods can become complex. Manually applying and tracking taints with kubectl can be time-consuming and error-prone. Plural simplifies these tasks, offering a centralized and automated way to manage these crucial scheduling components.

Plural's GitOps-based approach lets you define and manage taints declaratively within your Git repositories. This means you can treat taints and tolerations as code, versioning them, and applying them consistently across your entire Kubernetes fleet. Instead of manually applying taints to individual nodes, you define the desired state in your Git repository, and Plural ensures that this state is reflected in your clusters. This simplifies management and provides a clear audit trail of all changes.

Plural also integrates with other Kubernetes management tasks, such as deployments and updates. This allows you to coordinate taints and tolerations with other changes, ensuring that your scheduling policies are always in sync with your application deployments. For example, you can automatically taint nodes during an upgrade process and then remove the taint once the upgrade is complete, minimizing disruption. This automation is essential for managing large, complex Kubernetes deployments efficiently.

Using Plural helps avoid the manual overhead and potential inconsistencies of managing taints and tolerations at scale. This frees you to focus on deploying and managing your applications instead of low-level Kubernetes configurations. To learn more, book a demo.

Advanced Taints and Troubleshooting

This section dives into more nuanced uses of taints and tolerations, along with practical troubleshooting advice.

Taint-Based Evictions

Taints primarily prevent pod scheduling. However, combined with tolerations, they offer a powerful mechanism for evictions. Imagine needing to drain a node for maintenance. Instead of directly evicting pods, apply a taint to the node. Pods with matching tolerations can remain, allowing for a more graceful drain. Pods without the toleration will be evicted according to their tolerationSeconds setting, if present. This approach provides fine-grained control, especially when combined with node affinity rules.

Using Multiple Taints

A single node can have multiple taints, and a pod can have multiple tolerations. Kubernetes evaluates each taint against each toleration. If a pod doesn't tolerate even one taint on a node, it won't be scheduled there. This allows for complex scheduling logic. For example, you might have one taint for hardware type (e.g., gpu=true:NoSchedule) and another for environment (e.g., env=production:PreferNoSchedule). Only pods tolerating both taints could be scheduled on nodes with those taints. This approach is documented in the Kubernetes documentation.

Common Taint Challenges and Solutions

While powerful, taints and tolerations can present challenges. Overly specific configurations can lead to scheduling conflicts. For instance, requiring an exact match on multiple labels for node affinity, combined with strict taint tolerations, can severely restrict where a pod can run. Start with broader constraints and progressively refine them. Another common issue is misconfigured tolerations. Ensure your key, value, effect, and operator settings in your tolerations align correctly with the node's taints. Managing taints and tolerations across a large fleet can also become complex. Consider using tools like Plural to manage and automate these configurations. Enterprise-scale Kubernetes deployments often face challenges in maintaining consistency and interoperability across environments; proper taint management is crucial for addressing this. For more context, see this post on Enterprise Kubernetes challenges.

Debugging Taints and Tolerations

Troubleshooting taint and toleration issues requires a systematic approach. First, verify the taints on your nodes using kubectl describe node <node-name>. Then, inspect the tolerations defined in your pod specifications. Pay close attention to the operator used in the toleration (e.g., Exists, Equal) and ensure it corresponds to the taint's value. Use kubectl get events to check for scheduling failures related to taints and tolerations. Kubernetes observability tools can also help monitor the effects of taints and tolerations on pod scheduling and node utilization. Understanding these operators is crucial for effectively managing pod scheduling. If you're still stuck, check the Kubernetes documentation for detailed explanations and examples.

Monitoring and Optimizing Taint Usage

After you implement taints and tolerations, ongoing monitoring and optimization are crucial for a healthy Kubernetes cluster. This ensures efficient resource utilization and helps you avoid unexpected scheduling issues.

Tools for Taint Management

While kubectl provides the basic functionality for managing taints, several tools can simplify management, especially at scale. Consider integrating taint management into your broader Kubernetes tooling. For example, you can leverage a platform like Plural to manage your Kubernetes deployments and gain a centralized view of your taint configuration across your entire fleet. This single pane of glass simplifies monitoring and allows you to quickly identify any misconfigurations or inconsistencies. You can also explore open-source projects that extend kubectl's capabilities, offering features like automated taint application and removal based on node conditions or metrics.

Performance Implications of Taints

Taints themselves have a negligible performance impact on the Kubernetes scheduler. The scheduler evaluates taints and tolerations as part of its pod scheduling logic. However, inefficient or excessive taint use can indirectly affect performance. For instance, if a large number of pods require specific tolerations, the scheduler might take longer to find suitable nodes, leading to increased scheduling latency. Similarly, if taints are used incorrectly, they can lead to resource starvation or underutilization. For example, over-tainting nodes can restrict pod placement, preventing the scheduler from efficiently distributing workloads. Following best practices for taint and toleration configuration is crucial for optimizing resource utilization and application reliability. For practical guidance on configuring taints and tolerations, see this tutorial.

Scaling Taint Strategies

Managing taints across a large number of clusters presents unique challenges. As your Kubernetes footprint grows, maintaining consistency and avoiding configuration drift becomes increasingly complex. Implementing a robust GitOps approach can help manage taints at scale. By storing your taint configurations in a Git repository, you can track changes, enforce consistency, and automate deployments across your entire fleet. This also enables you to roll back changes easily if necessary. For more on implementing GitOps, see Plural's features. Furthermore, consider using tools that provide a centralized view of your taint configurations across all clusters. This simplifies monitoring and allows you to quickly identify and address any inconsistencies or misconfigurations, which is especially important when dealing with enterprise-scale Kubernetes deployments. Scaling Kubernetes effectively requires careful planning and the right tools. This article discusses the challenges and solutions for enterprise Kubernetes. Remember that as your cluster scales, monitoring becomes increasingly critical. Without proper monitoring, detecting bottlenecks or failures related to taint misconfiguration can be challenging. Tools that provide insights into pod scheduling and resource utilization can help you identify and address any performance issues related to taints. By mastering taints, tolerations, and node affinity, you can effectively manage workload distribution, ensuring critical services receive the necessary resources. This blog post offers a deeper dive into these concepts.

Real-World Examples of Taints and Tolerations

Let's illustrate the practical application of taints and tolerations with a couple of real-world scenarios. These examples demonstrate how these Kubernetes features enhance application reliability and resource management.

Example: Protecting Critical Applications During Node Failures

Taints are invaluable for managing node maintenance and handling failures gracefully. Imagine a scenario where one of your Kubernetes nodes becomes unresponsive due to a hardware issue. You can apply a taint like node.kubernetes.io/unreachable:NoSchedule to this failing node. This immediately prevents the Kubernetes scheduler from placing any new pods onto the compromised node, minimizing the impact on your overall application performance. Existing pods on the node, however, are allowed to continue running, providing a degree of continuity. For more details on how taints interact with the scheduler, refer to the Kubernetes documentation on taints and tolerations.

As you investigate and address the hardware problem, you might escalate the taint to node.kubernetes.io/unreachable:NoExecute. This gracefully evicts the existing pods, giving them a chance to shut down cleanly before the node is taken offline for maintenance or replacement. This controlled approach, using taints and their corresponding tolerations, minimizes disruption to your applications during unforeseen incidents and planned maintenance. For a deeper dive into managing node failures with taints, check out Komodor's practical guide on taints and tolerations.

Example: Utilizing Spot Instances with Taints and Tolerations

Another common use case for taints and tolerations involves leveraging cost-effective spot instances from cloud providers. Spot instances offer significant cost savings compared to on-demand instances, but they can be interrupted with short notice. To manage this potential disruption, you can taint your spot instance nodes with a specific taint, such as cloudprovider.com/spot-instance:true:NoSchedule. Then, configure your less critical applications—those that can tolerate interruption—with a corresponding toleration. This setup ensures that these applications are preferentially scheduled on the cheaper spot instances.

If a spot instance is reclaimed, the tainted node becomes unavailable, and the tolerating pods are rescheduled onto other nodes. This strategy allows you to take advantage of spot instance pricing without jeopardizing the availability of your critical applications. This level of granular control over pod scheduling goes beyond simpler methods like node affinity or anti-affinity, providing a more robust solution for optimizing resource costs and application resilience. Cast.ai offers valuable best practices for using taints and tolerations, particularly in scenarios involving spot instances and other specialized node configurations.

Mastering Kubernetes Taints for Efficient Cluster Management

Taints and tolerations are key mechanisms in Kubernetes for controlling pod scheduling. They add a layer of control beyond basic resource requests and limits, allowing you to influence where pods land based on node characteristics. Mastering these concepts is crucial for optimizing resource utilization and ensuring application reliability. This section provides practical guidance and best practices for using taints effectively.

Review: What are Kubernetes Taints?

Taints are essentially labels applied to a node that repel pods. They signal that a node has specific attributes—perhaps dedicated hardware like GPUs or a temporary condition like undergoing maintenance. A taint doesn't prevent all pods from scheduling; instead, it requires pods to explicitly express a willingness to run on the tainted node through a corresponding toleration. This allows you to reserve nodes for particular workloads without completely locking them down.

Review: How Taints Control Scheduling

When Kubernetes schedules a pod, it checks the taints on each node. If a node has a taint and the pod doesn't have a matching toleration, the pod won't be scheduled there. This mechanism allows you to direct pods with specific requirements to nodes with matching attributes. For example, you might taint a node with gpu=true:NoSchedule to ensure that only pods needing GPUs and tolerating this taint can run on it. This prevents regular pods from consuming resources on specialized hardware. For a deeper dive into taints and tolerations, check out this practical guide.

Review: Taint Effects

Taints have three different effects, controlling how strictly they repel pods:

NoSchedule: This is the most common effect. Pods without a matching toleration are simply not scheduled on the tainted node. Existing pods on the node remain unaffected.PreferNoSchedule: This effect is less strict. The scheduler tries to avoid placing pods on the tainted node, but if no other suitable nodes are available, it will still schedule the pod there. This is useful for workloads that can run on general-purpose nodes but perform better on specialized hardware.NoExecute: This is the strongest effect. Pods without a matching toleration are not only prevented from being scheduled but are also evicted if they are already running on the node. This is typically used for situations like node maintenance or draining a node before termination. This tutorial offers a comprehensive explanation of taint effects.

Review: Taints and Tolerations

Tolerations are defined within a pod's specification and act as the counterpart to taints. They declare a pod's ability to run on a node with a specific taint.

Review: How Tolerations Work

A toleration must match the key and effect of a taint for it to be effective. If a pod has a toleration that matches a taint on a node, the pod is allowed to run on that node. The toleration can also specify a tolerationSeconds value, which determines how long a pod can remain on a node with a NoExecute taint after the node becomes unavailable. For more on how tolerations interact with taints, see this guide.

Review: Matching Taints and Tolerations

For a toleration to match a taint, the key must be the same, and the effect of the toleration must be equal to or greater than the effect of the taint. For example, a toleration with effect=NoExecute will tolerate taints with effect=NoSchedule, effect=PreferNoSchedule, and effect=NoExecute.

Review: Using kubectl with Taints

kubectl provides commands for managing taints on nodes:

- Apply Taints to Nodes: Use

kubectl taint node <node-name> key=value:effect. For example,kubectl taint node worker-1 gpu=true:NoSchedule. - Remove Taints from Nodes: Use

kubectl taint node <node-name> key=value:effect-. For example,kubectl taint node worker-1 gpu=true:NoSchedule-. - View Node Taints: Use

kubectl describe node <node-name>to view the taints applied to a node.

Review: Common Taint Use Cases

- Dedicate Nodes for Specific Workloads: Taints are ideal for reserving nodes for specific applications, such as those requiring GPUs or other specialized hardware.

- Manage Specialized Hardware: Taints ensure that only pods designed for specific hardware are scheduled on those nodes, preventing resource conflicts and optimizing performance.

- Handle Node Maintenance and Failures: Use taints with the

NoExecuteeffect to gracefully drain nodes before maintenance or in case of impending failure, allowing pods to be rescheduled elsewhere.

Review: Best Practices

- Use Taints Sparingly: Overuse of taints can lead to complex scheduling rules and make troubleshooting difficult. Start with a minimal set of taints and add more only as needed.

- Combine Taints with Node Affinity: While taints are powerful, they are often more effective when combined with node affinity, which provides more granular control over pod placement.

- Document and Review Taints: Maintain clear documentation of your taint strategy to ensure that everyone on your team understands the purpose of each taint and its potential impact.

- Test Taints in Staging: Before applying taints to production clusters, thoroughly test them in a staging environment to avoid unexpected disruptions. This guide provides further information on working with taints and tolerations in Kubernetes.

Conclusion

Mastering Kubernetes taints is essential for efficiently managing your clusters. Think of taints as powerful tools for directing traffic in your cluster, ensuring that specific workloads land on the right nodes. By understanding the different taint effects—`NoSchedule`, `PreferNoSchedule`, and `NoExecute`—you gain granular control over pod placement, reserving resources for critical applications and protecting nodes from incompatible workloads. As described in the Kubernetes documentation, using taints strategically is key to optimizing resource utilization and maintaining a healthy, reliable cluster.

However, like any powerful tool, taints should be used judiciously. Overuse can lead to complex configurations that become difficult to manage and debug. Start with simpler solutions like node affinity for general workload placement, and reserve taints for situations where you need to explicitly prevent pods from running on certain nodes. This strategic approach, advocated by resources like this tutorial on configuring taints, helps maintain clarity and simplifies troubleshooting. For managing taints and other Kubernetes configurations at scale, consider a platform like Plural, which provides a centralized view and streamlines management across your entire fleet.

Testing your taint strategy in a non-production environment is crucial. This allows you to validate the behavior of your taints and tolerations without risking disruption to your live applications. Thorough testing, as recommended in this guide to taints and tolerations, helps identify and resolve any unintended consequences before they impact your production workloads. For more complex scenarios, combining taints with node affinity, as discussed in articles like this one on mastering node affinity and taint challenges, offers a more nuanced approach to scheduling, allowing for greater flexibility and control.

Related Articles

- The Essential Guide to Monitoring Kubernetes

- The Quick and Dirty Guide to Kubernetes Terminology

- Understanding Deprecated Kubernetes APIs and Their Significance

- Plural | Edge Kubernetes

- Kubernetes: Is it Worth the Investment for Your Organization?

Unified Cloud Orchestration for Kubernetes

Manage Kubernetes at scale through a single, enterprise-ready platform.

Frequently Asked Questions

How do taints differ from node selectors or node affinity in Kubernetes?

Node selectors and affinity are ways to attract pods to specific nodes based on labels. Taints, on the other hand, repel pods from nodes unless the pod has a matching toleration. Think of selectors and affinity as "welcome" signs, while taints are "keep out" signs with exceptions granted through tolerations. Use selectors and affinity when you want to encourage pods to run on certain nodes, and use taints when you need to restrict pods from running on specific nodes.

What happens if a pod doesn't have a toleration for a taint on a node?

If a node has a taint and a pod doesn't have a matching toleration, the Kubernetes scheduler won't schedule the pod on that node. The specific behavior depends on the taint's effect (NoSchedule, PreferNoSchedule, or NoExecute). For NoSchedule and PreferNoSchedule, the scheduler simply looks for another suitable node. For NoExecute, the pod is evicted if it's already running on the tainted node.

Can a node have multiple taints, and can a pod have multiple tolerations?

Yes, a node can have multiple taints, and a pod can have multiple tolerations. Kubernetes evaluates each taint against each toleration. If a pod doesn't tolerate even one taint on a node, it won't be scheduled there. This allows for complex scheduling logic based on various node characteristics and pod requirements.

How can I troubleshoot issues related to taints and tolerations?

Start by inspecting the taints on your nodes using kubectl describe node <node-name>. Then, check the tolerations defined in your pod specifications using kubectl describe pod <pod-name>. Ensure the keys, values, and effects match correctly. Look at Kubernetes events using kubectl get events for any scheduling failures related to taints and tolerations.

What are some best practices for using taints and tolerations effectively?

Use taints sparingly. Overuse can lead to complex configurations. Combine taints with node affinity for more nuanced scheduling. Document your taint strategy clearly. Test your taint and toleration configurations in a staging environment before applying them to production. Consider using a platform like Plural to manage taints across a large number of clusters.

Newsletter

Join the newsletter to receive the latest updates in your inbox.