Kubernetes Informers: A Practical Guide for DevOps

Learn how Kubernetes informers can streamline event management, improve performance, and enhance resource monitoring in your cluster with this comprehensive guide.

Kubernetes is powerful, but keeping up with its constant changes can be tough. Applications can easily get overwhelmed by the sheer volume of events. Kubernetes informers offer an elegant solution, acting as a smart filter between your application and the Kubernetes API server. This post is your guide to understanding and using Kubernetes informers. We'll cover everything from basic setup and mechanics to advanced usage with custom resources. Learn how to efficiently manage Kubernetes events monitoring and build more responsive applications. We'll also touch upon practical tips for monitoring Kubernetes deployment events and overall Kubernetes event monitoring for improved cluster management.

Key Takeaways

- Dynamic informers efficiently track Kubernetes resource changes, including CRDs. They maintain a local cache, reducing API server load and improving application responsiveness. This makes them ideal for dynamic environments and custom resources.

- Responsive Kubernetes applications rely on well-structured event handling. Decoupling event processing with queues enables better error handling, retries, and prioritization, leading to more resilient and manageable applications.

- Thorough testing and debugging are essential for reliable dynamic informer operation. Simulate various events and errors to validate informer logic. Monitor resource usage and RBAC configurations for efficiency and security. Ensure cache synchronization before processing events to avoid using outdated information.

Understanding Kubernetes Dynamic Informers

Working with Kubernetes often means juggling lots of moving pieces. You’re dealing with deployments, services, pods—a whole ecosystem of resources that are constantly changing. Dynamic informers help you keep track of all this activity without overwhelming your cluster. They provide an efficient way to stay updated on the state of your Kubernetes resources. This section breaks down what dynamic informers are, how they function, and when they're the right choice for your Kubernetes project.

What is a Kubernetes Dynamic Informer?

A dynamic informer is a client-side component in Kubernetes that watches for changes to resources within your cluster. Unlike their static counterparts, dynamic informers don't require you to know the resource type upfront. This makes them incredibly useful for working with Custom Resource Definitions (CRDs), which are essentially extensions to the standard Kubernetes API. As David Bond points out in his article on dynamic informers, they can handle add/update/delete notifications for any cluster resource, including these CRDs. This flexibility is a key advantage when dealing with evolving or customized Kubernetes setups.

How Kubernetes Dynamic Informers Work

Imagine having a dedicated helper constantly monitoring your Kubernetes cluster. That's essentially what a dynamic informer does. It acts as a local cache and a change notifier, keeping a copy of relevant resource data and alerting your application when something changes. This offloads the work from the Kubernetes API server, improving efficiency and reducing the risk of overloading it. Instead of constantly polling the API server, your application receives targeted updates through the informer. As explained in this tutorial, informers simplify event management by abstracting away the complexities of the Kubernetes API, allowing developers to focus on application logic. This means less boilerplate code and more time spent on the core functionality of your application. A FireHydrant blog post further emphasizes how dynamic informers offer flexibility and scalability for building event-driven applications.

The Role of the SharedInformerFactory

Managing numerous informers for different Kubernetes resources can quickly become complex. The SharedInformerFactory provides a streamlined solution. It acts as a central manager for your informers, handling their lifecycle from start to stop, as described in the official client-go documentation. This centralized approach ensures efficient resource utilization and prevents conflicts. Critically, the SharedInformerFactory orchestrates cache synchronization across all your informers, a vital step before processing any resource events.

Beyond lifecycle management, the SharedInformerFactory promotes code reusability. If different parts of your application require the same informer, the factory provides a single, shared instance. This eliminates redundant calls to the Kubernetes API server, optimizing performance and minimizing server load. By centralizing informer management, the SharedInformerFactory simplifies your codebase and improves maintainability. For a deeper dive into shared informers, check out this blog post.

Understanding WaitForCacheSync

Before your application can rely on data from informers, you need to ensure the informer caches are synchronized with the current state of your Kubernetes cluster. WaitForCacheSync provides this guarantee. This method, as explained by Mario Macias in his informative blog post, blocks execution until the informer's cache is fully populated from the Kubernetes API server. This synchronization is crucial to prevent your application from operating on stale data, which can lead to inconsistencies and errors. Operating without WaitForCacheSync risks making decisions based on outdated information, potentially causing unexpected behavior.

Consider the importance of having an up-to-date map for navigation. Similarly, your application needs the latest information about your Kubernetes resources. WaitForCacheSync ensures you have a current snapshot of your cluster state before your application uses that data. This synchronization is fundamental for building reliable and consistent Kubernetes applications. Plural simplifies this process, ensuring your applications always have the most current data. Book a demo to see how.

Resource Retrieval with Indexers

While informers offer a convenient way to access Kubernetes resources, the default namespace/name lookup isn't always sufficient. Indexers provide a more powerful and efficient retrieval mechanism. Macias' blog post highlights how informers allow you to create custom indexes based on specific resource fields. These indexes enable targeted retrieval based on criteria like labels, annotations, or other relevant fields. For instance, you could create an index to quickly find all Pods with a specific IP address or all Deployments with a particular label, avoiding costly iteration through the entire cache.

This targeted retrieval, especially beneficial in large clusters, significantly improves performance and responsiveness. Indexers empower your application to efficiently access the precise resources it needs, streamlining interactions with your Kubernetes cluster. They are a valuable tool for optimizing resource access and building more performant Kubernetes applications. Plural leverages these indexing capabilities to provide efficient and flexible resource management. Explore our pricing plans to learn more.

Dynamic vs. Static Informers in Kubernetes: Performance and Use Cases

Choosing between a dynamic and static informer depends on your specific needs. If you're working with standard, well-defined Kubernetes resources and your requirements are relatively stable, a static informer might be a good fit. They can offer consistent throughput for predictable workloads. However, as a comparative analysis highlights, static approaches can struggle when data grows significantly or access patterns change, potentially leading to performance bottlenecks. Dynamic informers shine when you need more flexibility. They're particularly valuable when working with custom resources or in situations where importing canonical types is impractical, as noted in David Bond's article. If you anticipate needing to adapt to new resource types or are working with a rapidly evolving Kubernetes environment, dynamic informers offer the adaptability you need. At Plural, we leverage the power of dynamic informers to help our users manage complex Kubernetes environments efficiently. Check out our platform to see how we can simplify your Kubernetes operations. You can also book a demo to learn more.

Setting Up a Dynamic Informer in Kubernetes

This section provides a practical guide to setting up dynamic informers in your Kubernetes cluster. We'll break the process into digestible steps, starting with creating a Kubernetes client.

Creating a Kubernetes Client

First, you'll need a way to interact with your cluster. The k8s.io/client-go package provides the tools to create a client. This client acts as your bridge to the Kubernetes API, enabling you to manage resources and receive updates. For a more detailed walkthrough, check out this helpful guide on dynamic informers.

Initializing Your Dynamic Informer

With your Kubernetes client set up, you can initialize a dynamic informer. Unlike static informers tied to specific resource types, dynamic informers offer flexibility. They handle notifications about changes to any cluster resource, including CustomResourceDefinitions (CRDs). This makes them incredibly useful for managing evolving resources within your cluster. Initializing the dynamic informer sets up the listening mechanism for changes—additions, updates, and deletions. See this tutorial on dynamic Kubernetes informers for a practical example.

Configuring Kubernetes Resource Types and Selectors

After initializing your dynamic informer, you need to specify what it should watch. This involves configuring the resource types and selectors, which act as filters to narrow down the events your informer processes. Filtering with resource type and selector configuration optimizes performance by reducing overhead. Your application only reacts to relevant changes, rather than every single event in the cluster. This targeted approach ensures efficiency and prevents your application from being overwhelmed by unnecessary information.

Filtering Resources with FieldSelectors and LabelSelectors

Filtering is key to making the most of dynamic informers. You don't want your application bombarded with notifications for every single change in your cluster. That's where FieldSelectors and LabelSelectors come in. They act like fine-tuned filters, ensuring your informer only pays attention to the resources you actually care about. This targeted approach improves performance and reduces load on the API server by minimizing the amount of data retrieved.

FieldSelectors let you filter based on specific fields of a resource. For example, you might only want to watch pods in a "Running" state or those scheduled on a particular node. As explained in this resource on filtering objects, FieldSelectors are primarily for use with kubectl or other Kubernetes API clients, and aren't used internally by Kubernetes objects. They're a tool for querying and retrieving specific information, not for defining object relationships.

LabelSelectors, conversely, filter based on labels assigned to your resources. Labels are key-value pairs used to organize and categorize Kubernetes objects. Using LabelSelectors with your dynamic informer is like creating dynamic groups of resources. By watching for changes only to resources with specific labels, you can easily manage related components. This article on filtering with labels highlights how useful this is for dynamic group creation and resource management.

Combining FieldSelectors and LabelSelectors creates highly specific filters for your dynamic informers. This not only improves efficiency but also simplifies event management. This Medium article emphasizes that carefully configuring these selectors is crucial for reducing API server load and maintaining application responsiveness. Focusing on relevant events leads to more robust and efficient applications. At Plural, we use dynamic informers with filtering to help our users manage complex Kubernetes environments. Explore our platform or book a demo to learn more.

Managing Kubernetes Events

This section explains how to use dynamic informers to effectively manage the stream of events within your Kubernetes cluster. Knowing how to process these events is key to building responsive and resilient applications.

Handling Kubernetes Add, Update, and Delete Events

Dynamic informers provide a real-time view of the changes happening to your resources, allowing you to respond quickly and efficiently. They handle notifications for additions, updates, and deletions of any cluster resource, including CustomResourceDefinitions (CRDs). This constant stream of information keeps your application in sync with the cluster's current state.

To manage the complexity of these events, it's best to use a queue. Push the events from the informer onto a queue for another component (often a controller) to process. This separation of concerns simplifies your code and makes it easier to maintain. For a practical guide to implementing dynamic informers in Go, check out this tutorial. FireHydrant's blog also offers valuable insights into using dynamic informers effectively.

Categorizing and Prioritizing Events in Kubernetes

Kubernetes events provide valuable insights into your cluster's health and activity. They act as a detailed log of state changes and errors, which is invaluable for debugging and maintenance. These events are categorized into different types, such as Normal, Warning, Failed, and Eviction. Understanding these event types is crucial for troubleshooting effectively. For instance, a "Normal" event might indicate a successful pod deployment, while a "Failed" event signals a problem needing attention.

Categorizing and prioritizing events lets you focus on the most critical issues. This proactive approach helps maintain the health of your Kubernetes environment. Learning to effectively monitor Kubernetes events is a fundamental skill for any Kubernetes administrator. Prioritizing based on severity and potential impact ensures you address the most pressing issues first.

Understanding Kubernetes Event Types

Kubernetes events are like the breadcrumbs of your cluster, offering a trail of what's happening within your deployments. They're categorized into types—Normal, Warning, Failed, and Eviction—each signaling a different level of concern. A Normal event might indicate a successful pod deployment, like a confirmation message. A Warning signals a potential issue that might need attention, such as a pod nearing its resource limits. Failed events point to problems requiring immediate action, like a pod crashing repeatedly. Finally, Eviction events occur when Kubernetes removes a pod from a node, often due to resource constraints or node failures. Understanding these event types is crucial for effective troubleshooting.

Think of it like checking your car's dashboard. A green light (Normal) means everything's fine. A yellow light (Warning) suggests you keep an eye on things. A red light (Failed) demands immediate attention. And if your car suddenly stops (Eviction), you know something's seriously wrong. By categorizing and prioritizing these signals—using tools like those available on Plural—you can focus on the most critical issues first. This proactive approach, combined with effective monitoring, helps maintain the health of your Kubernetes environment and ensures you address the most pressing problems before they escalate. For a deeper dive into managing Kubernetes clusters effectively, book a demo with Plural.

Registering and Using Kubernetes Event Handlers

After setting up your dynamic informer, register event handlers to process Kubernetes events. These handlers define the actions to take when changes occur within your cluster.

Implementing Event Handler Functions in Kubernetes

Event handler functions are the core logic that processes events captured by your informer. They're the brains of your event-driven system. These functions receive event objects containing information about the change, including the resource type (pod, deployment, etc.), the event type (add, update, delete), and the object's current state. You'll use these details to trigger appropriate actions. For example, if a pod enters a "failed" state, your handler might trigger an alert or attempt a restart. Informers deliver these events to your handlers, abstracting away the complexities of the Kubernetes API. This lets you focus on application-specific logic, as described in this LabEx tutorial on managing Kubernetes events.

Best Practices for Kubernetes Event Handling

When designing your event handling system, consider queuing events. Instead of directly processing events within the handler, add them to a queue. A separate worker or controller can then consume these queued events. This decoupling offers several advantages. It prevents your informer from getting bogged down by long-running handler tasks, ensuring responsiveness to new events. It also provides a mechanism for retrying failed operations and managing event processing flow. Kubernetes provides packages to implement this queuing system.

Handling Errors and Retries in Kubernetes

Robust error handling is crucial for any production Kubernetes system. When working with event handlers, anticipate potential issues like network disruptions or temporary API unavailability. Implement strategies to handle these errors gracefully. This might involve logging the error, retrying the operation after a delay, or sending an alert. A well-designed retry mechanism improves the reliability of your event handling logic, as discussed in this article on Kubernetes informers. Addressing these potential issues proactively keeps your system stable and responsive, even under pressure.

Processing Events with Kubernetes Dynamic Informers

Once your dynamic informer is set up and receiving events, efficient processing becomes critical. This involves filtering for relevant events, correctly handling resource versioning, and optimizing how your application interacts with the informer’s cache.

Filtering Kubernetes Events

Processing every single event from your Kubernetes cluster can quickly overwhelm your application. Filtering lets you hone in on specific events, improving performance and reducing unnecessary load. You can filter events based on criteria like the objects involved, event types (add, update, delete), or specific fields within the event data. This ensures your application only processes the events that truly matter. For more detailed filtering strategies, check out this tutorial on managing Kubernetes events.

Handling Resource Versioning in Kubernetes

Kubernetes uses resource versioning to track changes to objects. Each time an object is modified, its resource version increments. Dynamic informers use this versioning to ensure your application has the most up-to-date view of the cluster. When processing events, pay attention to the resource version to avoid acting on stale data. Properly handling resource versions is crucial for maintaining data consistency and preventing conflicts, especially with frequent updates.

Optimizing Cache Synchronization

Before processing events, ensure your informer's cache is synchronized with the cluster. Use the cache.WaitForCacheSync function to wait for the initial synchronization. This prevents your application from acting on incomplete information. Also, consider using a queue to decouple event processing from the informer. Push incoming events onto the queue and have a separate worker process them. This separation improves scalability and lets your application handle bursts of events more effectively. You can learn more about optimizing dynamic informers in this blog post.

Strategies for Efficient Cache Management

Efficient cache management is crucial for maximizing the performance and reliability of your dynamic informers. A well-managed cache reduces the load on the API server, speeds up response times, and ensures your application operates with the most current data. Here are some key strategies to consider:

Prioritize Cache Synchronization: Before your application starts processing events, ensure the informer's cache is fully synchronized with the Kubernetes cluster. Using the cache.WaitForCacheSync function, as described in this guide to creating dynamic informers, blocks operations until the initial synchronization is complete. This prevents your application from making decisions based on outdated or incomplete information. It's like waiting for a map application to fully load before starting your journey—you need an accurate view before you begin.

Decouple Event Processing with Queues: Handling events directly within the informer's event handlers can create bottlenecks. Decoupling event processing using a queue offers a more robust solution. Pushing incoming events onto a queue frees up the informer to continue receiving updates without interruption. A separate worker process can then consume events from the queue at its own pace, improving scalability and handling bursts of events more gracefully. This also simplifies your code and makes complex event processing easier to manage.

Leverage Resource Versioning: Kubernetes uses resource versioning to track object changes. Each modification increments an object's resource version. Dynamic informers rely on this to maintain consistency and ensure your application has the latest view of the cluster. When processing events, pay close attention to the resource version to avoid acting on stale data. This prevents conflicts and ensures your application always has the most up-to-date information.

Implement Targeted Filtering: Processing every Kubernetes event can be inefficient. Filtering lets you focus on specific events relevant to your application. You can filter based on criteria like resource type (pods, deployments, services), event type (add, update, delete), or specific labels and fields within the event data. This targeted approach reduces unnecessary processing, improves performance, and makes your application more efficient.

Solving Common Challenges in Kubernetes Event Management

Dynamic informers offer elegant solutions to common Kubernetes event management challenges, simplifying complex processes and improving overall efficiency. Let's explore how they address resource monitoring, performance optimization, and security configurations.

Managing Complex Resource Monitoring in Kubernetes

Monitoring diverse resources within a Kubernetes cluster can be tricky. Dynamic informers simplify this by handling notifications (add/update/delete) for any cluster resource, including CustomResourceDefinitions (CRDs). This is especially helpful when working with resources that have unknown structures or when standard resource types aren't a good fit. You can perform operations on resources without needing to know their exact structure beforehand, as explained in this post on dynamic informers. This flexibility simplifies interactions with complex resources and streamlines monitoring efforts.

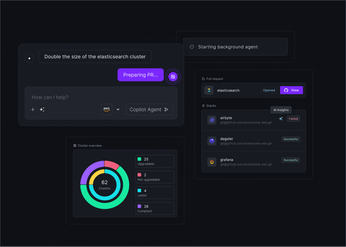

Leveraging Plural for Simplified Kubernetes Management at Scale

Managing resources efficiently is paramount in the dynamic world of Kubernetes. Plural uses dynamic informers to streamline this process, enabling efficient tracking of resource changes, including Custom Resource Definitions (CRDs). As FireHydrant notes, these informers maintain a local cache, which reduces the load on the API server and improves application responsiveness. This is especially valuable in dynamic environments where resources are constantly in flux.

Plural's architecture also facilitates responsive applications through well-structured event handling. By decoupling event processing with queues, Plural improves error handling, retries, and prioritization. This leads to more resilient applications, echoing the FireHydrant observation that "Responsive Kubernetes applications rely on well-structured event handling." Efficient event management is key to maintaining application performance and stability.

The flexibility of dynamic informers, particularly when working with custom resources, is another advantage that Plural offers. This adaptability is essential in rapidly changing environments. David Bond highlights this, stating that dynamic informers are best suited for situations requiring flexibility. Plural capitalizes on this flexibility to provide a unified platform for managing all your Kubernetes resources.

Plural simplifies event management by abstracting away the complexities of the Kubernetes API. This allows developers to focus on application logic rather than wrestling with low-level details. This approach reduces boilerplate code and allows for more focused development, as described in this LabEx tutorial on managing Kubernetes events. By simplifying these complex processes, Plural helps organizations manage Kubernetes at scale, freeing them to concentrate on core functionalities while ensuring efficient resource monitoring and event management. Contact us to learn how Plural can simplify your Kubernetes operations.

Optimizing Performance and API Server Load

Constantly querying the Kubernetes API server for updates puts a strain on resources and can impact performance. Dynamic informers alleviate this by caching resource state locally. This reduces the number of API server calls, minimizing load and latency. LabEx explains how informers abstract away the complexities of the Kubernetes API, letting developers concentrate on application logic instead of managing API interactions. This results in more efficient event processing and a more responsive application. FireHydrant highlights the efficiency of monitoring changes with dynamic informers, making them a powerful tool for building scalable, event-driven applications.

Configuring Authentication and RBAC in Kubernetes

Security is paramount in any Kubernetes environment. When implementing dynamic informers, proper authentication and Role-Based Access Control (RBAC) are essential. Ensure your service account has the correct permissions to access the resources it needs to monitor. A blog post by David Bond recommends using cache.WaitForCacheSync to guarantee cache synchronization before operations begin, preventing actions based on outdated information. This Dev.to article further discusses how configuring appropriate RBAC policies ensures that your dynamic informers only access authorized resources, maintaining the security and integrity of your cluster.

Optimizing Kubernetes Dynamic Informers

Optimizing dynamic informers is crucial for efficient Kubernetes management. By fine-tuning their configuration and usage, you can ensure your application performs well and doesn't overload your cluster. Let's explore some key optimization strategies.

Using Resources Efficiently with Kubernetes Informers

Dynamic informers streamline interactions with the Kubernetes API. Instead of constantly polling the API server, informers use a local cache. This reduces the load on the API server and improves your application's responsiveness. They simplify working with the Kubernetes API, allowing developers to focus on application logic. As David Bond points out in his article, dynamic informers offer a powerful way to handle resource changes efficiently. This efficiency is especially important when dealing with many resources or frequent updates.

Implementing Scalability and Resilience with Kubernetes

Dynamic informers can handle a wide range of Kubernetes resources. They manage add, update, and delete notifications for standard resources and CustomResourceDefinitions (CRDs), making them highly scalable. This flexibility, highlighted by David Bond, lets you adapt to the changing needs of your application. Using informers helps you build applications that respond dynamically to cluster events, increasing their resilience. This dynamic response, as explained in this LabEx tutorial, ensures your application adapts to changes and maintains functionality.

Monitoring and Observing Kubernetes Effectively

Dynamic informers provide a consistent way to manage the flow of events within your Kubernetes cluster. This consistency simplifies monitoring and ensures events are handled systematically. A key best practice, described by FireHydrant, is to use a queue for processing events from the informer. This lets another part of your code (often a controller) handle event processing, separating concerns and improving system organization. This separation also makes it easier to monitor and observe your application's behavior. Effectively monitoring these events gives you valuable insights into the health and performance of your Kubernetes resources.

Advanced Uses of Kubernetes Dynamic Informers

Once you understand how dynamic informers work, you can explore more advanced applications to streamline your Kubernetes operations. These techniques can help you manage complex resources, improve controller efficiency, and ensure data consistency.

Working with Custom Resource Definitions (CRDs)

Dynamic informers are especially helpful when working with Custom Resource Definitions (CRDs). Because CRDs define unique object types within Kubernetes, you often won't have strongly-typed clients readily available. Dynamic informers address this, allowing you to monitor changes to custom resources without needing prior knowledge of their structure. This flexibility is invaluable when dealing with evolving APIs or when you need a quick way to track new resource types. This approach, as David Bond notes in his post, lets you work with resources even when standard client libraries are unavailable or too complex.

Integrating with Kubernetes Controllers

Integrating dynamic informers with Kubernetes controllers is a best practice for robust event handling. Instead of processing events directly within the informer's handlers, queue these events for a separate controller. This separation keeps your informer logic clean and allows the controller to manage complex operations, like reconciliation and applying cluster changes. This method, highlighted by FireHydrant, uses Kubernetes' queuing mechanisms for smoother event processing.

Building Custom Controllers with Informers

Integrating dynamic informers with your custom Kubernetes controllers provides a robust way to manage the complexities of a dynamic cluster. Controllers are essential for maintaining the desired state of your resources. Combining them with informers creates a highly efficient and responsive system.

The informer acts as a vigilant observer, constantly watching for changes within your cluster. Whether a pod fails, a deployment scales, or a new CRD is created, the informer takes notice. Instead of immediately reacting, it passes this information to your custom controller through a queue. This queuing mechanism, as discussed by FireHydrant, is critical. It decouples event detection from processing, allowing your controller to handle complex logic without blocking the informer. This separation keeps your informer lightweight and responsive, ready for the next event. Your controller can then methodically work through the queued events, applying necessary updates and ensuring your cluster remains in the desired state.

This approach is especially valuable when working with Custom Resource Definitions (CRDs). Dynamic informers, as David Bond explains, offer the flexibility to monitor CRDs without predefined client structures. Your custom controller can then implement logic specific to your CRD, ensuring consistent behavior and automated management. This combination of dynamic informers and custom controllers provides a robust and scalable solution for managing complex Kubernetes environments. At Plural, we use this pattern extensively to help users manage their Kubernetes fleets. Book a demo to see how we can simplify your Kubernetes operations.

Handling Concurrent Updates in Kubernetes

In active Kubernetes environments, multiple updates often occur on the same resource concurrently. To prevent inconsistencies, you need strategies for handling these concurrent updates. Use resource version checks to identify the latest resource state. Also, consider implementing locking mechanisms within your controller to prevent race conditions and maintain data integrity. These techniques, as explained by Jeevan Reddy Ragula in his article, are crucial for reliable event handling.

Testing and Debugging Kubernetes Dynamic Informers

After setting up your dynamic informers, thorough testing and debugging are crucial for ensuring they behave as expected. A robust testing strategy helps catch issues early and prevents unexpected behavior in your Kubernetes cluster.

Testing Kubernetes Informers Thoroughly

Testing dynamic informers involves verifying they correctly handle different event types—add, update, and delete—for the resources you're monitoring. Simulate these events in a test environment to confirm your informer logic responds appropriately. Dynamic informers empower you to build responsive applications and automate tasks based on cluster events. This dynamic response lets you implement event-driven workflows and even build self-healing systems. A key advantage of dynamic informers is their ability to work with any Kubernetes resource, including CustomResourceDefinitions (CRDs), making them incredibly versatile when standard types are unavailable or too complex. Ensure your tests cover various scenarios, including edge cases and potential error conditions. Consider using a tool like Plural to streamline the management and deployment of your Kubernetes resources, simplifying the testing process. You can book a demo to see how Plural can help.

Debugging Common Issues with Kubernetes Informers

Debugging dynamic informers often involves examining the interaction between the informer, the Kubernetes API server, and your application logic. One common practice is to use a queue system. Push the events received from the informer onto a queue, and then have a separate piece of code (often called a controller) process them. This decoupling simplifies debugging and improves the overall resilience of your system, as discussed in this blog post on dynamic informers. Kubernetes provides the necessary packages to implement this queuing mechanism. Pay close attention to authentication and authorization. Ensure your informer has the correct permissions to access the Kubernetes API server. Review your Role-Based Access Control (RBAC) configuration to verify the informer's service account has the necessary permissions. Also, monitor resource usage. Informers maintain a cache of cluster state, which can consume memory and CPU resources. Monitor these resources to prevent performance issues and ensure your informer operates efficiently, as highlighted in this article on creating dynamic informers. Understanding how informers interact with the Kubernetes API is crucial. They use the API to detect changes, maintain a local cache (the indexer), and trigger handler functions based on these changes. By understanding these core components, you can effectively diagnose and resolve issues that may arise. For more complex Kubernetes deployments, consider exploring Plural's pricing plans and login to see how it can simplify your operations.

Common Pitfalls and Troubleshooting Tips

While dynamic informers simplify Kubernetes event management, understanding potential pitfalls and troubleshooting techniques is crucial for smooth operation. Let's explore some common challenges and how to address them.

Rigorous testing is paramount. Simulate various event types—add, update, and delete—in a test environment to verify your informer logic responds correctly. Include edge cases and error conditions, such as simulating network disruptions or temporary API unavailability, to expose vulnerabilities in your error handling.

Robust error handling is essential. Anticipate issues like network disruptions or temporary API unavailability. Implement strategies like logging the error, retrying the operation after a delay, or sending an alert. A well-designed retry mechanism, combined with comprehensive logging, significantly improves reliability.

Monitor resource usage. Informers maintain a cache of cluster state, which consumes memory and CPU. Keep an eye on these resources to prevent performance bottlenecks. If resource usage becomes excessive, optimize your informer's configuration or explore alternative caching strategies.

Ensure proper cache synchronization. Use the function cache.WaitForCacheSync to wait for the initial synchronization before processing events. This prevents your application from using outdated information, maintaining data consistency. Decoupling event processing with a queue system further enhances resilience and simplifies debugging. Process events asynchronously to isolate your informer from long-running tasks and improve system responsiveness.

Frequently Asked Questions

Why should I use dynamic informers instead of constantly querying the Kubernetes API?

Directly querying the API server for updates can put a significant load on your cluster, especially when dealing with many resources or frequent changes. Dynamic informers maintain a local cache of resource state, reducing the number of API calls and improving your application's performance and responsiveness. They provide an efficient way to stay updated on resource changes without overwhelming the API server.

How do dynamic informers handle Custom Resource Definitions (CRDs)?

One of the key advantages of dynamic informers is their ability to work with any Kubernetes resource, including CRDs. You don't need to know the structure of a CRD in advance. The informer automatically handles notifications for additions, updates, and deletions, simplifying the process of working with custom resources.

What's the best way to process events received by a dynamic informer?

While you can process events directly within the informer's handler functions, a best practice is to use a queue. Push incoming events onto a queue and have a separate worker or controller process them. This decoupling simplifies your code, improves resilience, and makes it easier to manage complex event processing logic.

How do I ensure my dynamic informer doesn't consume too many resources?

Dynamic informers maintain a local cache of resource state, which uses memory and CPU. Monitor these resources to ensure your informer operates efficiently. Filtering events based on specific criteria (resource type, event type, or field values) can also help reduce the load on your informer and improve performance.

What are some common debugging techniques for dynamic informers?

Debugging often involves examining the interaction between the informer, the API server, and your application logic. Verify that your informer has the correct RBAC permissions to access the resources it needs. Using a queue system for event processing can simplify debugging by isolating the informer's logic from the event handling logic. Also, check the resource versions of objects to ensure you're working with the most up-to-date data.

Comparing Informers with the Kubernetes Watch API

When building applications that interact with Kubernetes, you need a way to keep track of changes happening within your cluster. Two common approaches are using the Kubernetes Watch API directly and leveraging informers. Both mechanisms deliver updates about resource changes, but they differ significantly in how they manage those updates and the complexity they add to your code.

The Watch API provides a raw stream of events directly from the Kubernetes API server. This offers fine-grained control, but requires you to handle the complexities of managing the event stream, including connection handling, resource versioning, and data storage. As Mario Macias points out in his blog post, using the Watch API necessitates significant manual coding to manage in-memory data. This means more code to handle low-level details, potentially distracting from your core application logic.

Informers provide a higher-level abstraction. They maintain a local cache of Kubernetes resources and provide event handlers triggered when changes occur, simplifying event management. Macias notes that Kubernetes informers offer a built-in way to watch for resource changes and maintain an up-to-date in-memory cache, eliminating manual updates and reducing API calls. This efficiency is a major advantage, especially with many resources or frequent changes. LabEx adds that informers simplify event management by abstracting away Kubernetes API complexities, allowing developers to focus on application logic.

Alternatives to Using Informers

While informers are powerful for managing Kubernetes events, they aren't the only solution. Depending on your needs and application complexity, other approaches might be more suitable. Choosing the right tool depends on factors like cluster scale, the types of resources you're monitoring, and your team's familiarity with different technologies.

One alternative is to rely on comprehensive Kubernetes monitoring tools. These often provide dashboards, alerting, and deeper insights into cluster health and performance. Atlantbh Sarajevo highlights that Kubernetes monitoring is crucial for smooth application performance and resource management. While informers can be part of a monitoring strategy, they might not offer all the features of dedicated solutions. For complex setups, Atlantbh Sarajevo suggests dedicated tools, which offer pre-built dashboards, alerting rules, and integrations, reducing custom code.

Consider the potential for vendor lock-in. While Kubernetes is open source, relying heavily on specific tools within its ecosystem can hinder future migrations. Ben Houston discusses how Kubernetes can create vendor lock-in, making it difficult to leverage external resources and complicating migrations. This is relevant when evaluating tools that deeply integrate with your cluster. Carefully consider the long-term implications of your tooling choices.

Related Articles

Newsletter

Join the newsletter to receive the latest updates in your inbox.