Simplifying Kubernetes Multi-Region Management: Challenges & Solutions

Simplify Kubernetes multi region deployments with Plural's agent-based architecture, ensuring seamless management, security, and compliance across all clusters.

Kubernetes is great for container orchestration, but multi-region deployments introduce complexity. Spanning regions creates networking and security challenges, plus operational overhead that makes scaling difficult. This post explores these challenges with Kubernetes multi-region architecture, then shows how Plural Kubernetes simplifies multi-region pod to pod network management and Kubernetes for multi-region deployments.

Yet, businesses need multi-region Kubernetes for three key reasons:

Minimizing User Latency in Multi-Region Kubernetes

The farther a service is from the user, the longer it takes for the user to get a response. Therefore, you generally want services to be as close to the user as possible.

Ensuring High Availability and Fault Tolerance

Users will face issues during an outage if your services aren't set up for high availability (HA). For example, if you've deployed services in the EU and APAC regions and the EU region service goes down, the traffic can be temporarily redirected to the APAC region service. Although responses might be a bit slower, users will still be able to access the services.

Meeting Compliance Requirements

Many countries have laws against storing user data outside their borders. In such cases, having a multi-region Kubernetes infrastructure becomes necessary.

But Kubernetes doesn’t support multi-region deployments out of the box.

Key Takeaways

- Multi-region Kubernetes is crucial, but challenging: Reducing latency, ensuring high availability, and meeting compliance often necessitates a multi-region Kubernetes strategy. However, standard Kubernetes lacks native support, leading to complexities in networking, tooling, and security.

- Common solutions introduce trade-offs: While ingress controllers, service meshes, and alternative distributions like k3s offer solutions, they come with trade-offs. Each requires careful consideration of the added operational overhead and potential performance impact.

- Plural streamlines multi-region management: Plural simplifies these challenges with its agent-based architecture and unified control plane. This approach streamlines deployments, monitoring, and security policy enforcement across all regions, reducing complexity and improving operational efficiency.

Core Technical Constraints of Multi-Region Kubernetes

Kubernetes Architecture: Multi-Region Limitations

Standard off-the-shelf Kubernetes is not multi-region compatible, and it won’t be anytime soon: the CNCF and the Kubernetes core committees have repeatedly indicated that native multi-region support is not a priority for Kubernetes, focusing instead on multi-zone and multi-cluster solutions.

There are a few reasons for this. The main one is that Kubernetes relies on etcd as its primary data store, and etcd does not support multi-region operations. etcd uses the Raft consensus algorithm, which requires a majority of nodes to agree on state changes. In multi-region setups, increased latency between nodes slows consensus, leading to timeouts, frequent leader elections, and potential instability.

Latency also increases the risk of split-brain scenarios in distributed control planes. If network failures isolate parts of the cluster, different nodes may assume leadership, causing conflicting updates, data corruption, and even downtime.

The upshot of the lack

Setting up separate clusters for each region reduces latency, boosts availability, improves fault tolerance, and meets compliance needs, but it also adds complexity and cost. Managing several clusters means keeping configurations, security policies, and access controls consistent to avoid issues, while split monitoring makes it hard to keep track of system health in real-time.

etcd Challenges in Multi-Region Deployments

One of the primary challenges of using Kubernetes in a multi-region setup is its reliance on etcd as the primary data store. etcd doesn't inherently support multi-region operations, which poses significant limitations. The Cloud Native Computing Foundation (CNCF) and Kubernetes core committees have indicated that native multi-region support isn’t a priority, focusing instead on multi-zone and multi-cluster solutions. This means organizations looking to implement multi-region Kubernetes must navigate these inherent limitations and explore alternative approaches, such as Plural, which offers robust multi-region management capabilities.

Impact of Latency on Raft Consensus

The Raft consensus algorithm, employed by etcd, requires a majority of nodes to agree on state changes. In multi-region setups, the increased latency between nodes can significantly slow down this consensus process. This leads to timeouts, frequent leader elections, and potential instability within the cluster. Higher latency increases the risk of split-brain scenarios, where network failures isolate parts of the cluster, causing different nodes to assume leadership. This can result in conflicting updates and data corruption. These challenges make achieving consistency and fault tolerance across geographically dispersed locations a significant hurdle for Kubernetes deployments.

Networking Challenges in Multi-Region Kubernetes

Loss of Native Kubernetes Networking Features in a Multi-Region Setup

Kubernetes provides seamless service discovery within a single cluster using its Service abstraction and DNS system, but these features do not extend across multiple clusters. In multi-region deployments, clusters do not inherently recognize each other’s Pods, Services, or Network Policies, making cross-cluster service discovery a challenge.

IP addressing and DNS create challenges as clusters need non-overlapping IP ranges, and DNS resolution is limited since CoreDNS in one cluster can't find services in another without extra setup, requiring special solutions for cross-cluster service discovery.

These factors make cross-cluster communication significantly more complex than standard Kubernetes networking, requiring additional tools like service meshes, global load balancers, or custom DNS configurations to bridge the gap.

Network Plugin Considerations for Multi-Region Kubernetes

Kubernetes itself isn’t region-aware for networking. Your network plugin and cloud provider’s load balancer will influence how traffic is handled across regions. Choosing the right network plugin is crucial for successful multi-region Kubernetes deployments. Different plugins offer varying levels of support for multi-cluster communication, service discovery, and network policy enforcement. For example, Cilium offers robust support for multi-cluster networking through its ClusterMesh feature.

Here’s what to consider when evaluating network plugins for multi-region Kubernetes:

- Global Service Discovery: The plugin should enable services in one region to discover and communicate with services in other regions, abstracting away the underlying network complexity. Consider solutions like Consul, which provides a distributed key-value store for service discovery, or CoreDNS with ExternalDNS for automated DNS record management across regions.

- Network Policy Enforcement: Security policies should work seamlessly across regions. The plugin should allow you to define and enforce network policies that govern traffic flow between pods and services in different regions, ensuring consistent security across your entire deployment. Cilium, for instance, allows you to define network policies based on Kubernetes labels, enabling fine-grained control over inter-region communication.

- Performance and Latency: Multi-region communication introduces latency. The plugin should minimize this by using efficient routing and traffic management techniques. Consider plugins optimized for global environments, such as Cilium with its eBPF-based datapath, or Weave Net with its fast dataplane.

- Integration with Cloud Providers: The plugin should integrate well with your chosen cloud provider's networking services, such as load balancers and VPCs. This simplifies management and ensures optimal performance. For example, if you're using AWS, ensure the plugin works seamlessly with services like Elastic Load Balancing and Transit Gateway.

- Operational Complexity: Managing a multi-region network can be complex. The plugin should offer simplified management and monitoring tools to ease operational overhead. Plural can help streamline the management of multi-region Kubernetes deployments, including networking configuration and monitoring, by providing a centralized platform for managing multiple clusters.

By carefully considering these factors, you can choose a network plugin that meets the specific needs of your multi-region Kubernetes deployment, ensuring reliable communication, robust security, and efficient operation.

Common Multi-Region Solutions and Their Trade-offs

Ingress-Based Approaches for Multi-Region Kubernetes

Ingress controllers manage multi-cluster traffic by serving as entry points for HTTP and HTTPS requests from outside the cluster. However, Kubernetes Ingress often requires custom annotations and Custom Resource Definitions (CRDs) for advanced configurations. As the number of clusters and regions grows, organizations must deploy separate ingress controllers in each cluster and connect them using external load balancers or DNS services, which are beyond standard Kubernetes components.

Load Balancing Solutions for Control Plane and Services

Multi-region Kubernetes deployments depend on load balancing to distribute traffic and ensure high availability. This involves implementing load balancing at two levels: the control plane and the individual services.

The control plane—the API server, scheduler, and controller manager—needs a solution that handles failover if a region goes down. An automatic failover mechanism is essential. Tools like Keepalived and HAProxy manage communication between control planes in different regions. If one control plane fails, another seamlessly takes over.

A best practice is to use two load balancers: one for the control planes, managing traffic between them and the worker nodes, and another for exposed services and ingress. This separation ensures the control plane remains accessible, even during service disruptions, allowing for diagnosis and remediation. This setup, combined with tools like DNS round-robin to distribute traffic across control plane instances, ensures API server availability.

For user-facing services, global load balancers distribute traffic across regions, directing users to the closest available service instance. This minimizes latency and improves user experience. While Kubernetes offers resilience across multiple zones within a region, multi-region setups require more. Consider how traffic flows between entire regions, going beyond simply running in multiple zones.

Security Implications of Multi-Region Ingress

Multi-region ingress-based setups introduce additional security challenges. Traffic between regions often traverses public networks, requiring extra encryption and authentication. Additionally, ingress controllers may vary in their security capabilities, leading to inconsistent security policies across regions.

Performance Impact of Multi-Region Ingress

Ingress controllers add an extra layer to traffic flow, potentially affecting application performance, particularly in high-traffic scenarios. Each additional step through an ingress controller introduces latency, which can be more pronounced in cross-region traffic.

Service Mesh Solutions for Multi-Region Kubernetes

Service mesh technologies offer strong solutions for multi-cluster Kubernetes environments, providing secure and transparent service communication across cluster boundaries. Istio and Linkerd are two major service meshes in use today.

Istio and Linkerd: Capabilities for Multi-Cluster Kubernetes

Linkerd enables secure cross-cluster communication with service mirroring for automatic discovery and multiple connection modes, including gateway-based, direct pod-to-pod, and federated. It ensures security with a unified trust domain and mutual TLS (mTLS) encryption.

Istio offers greater traffic management flexibility, supporting both single-mesh and multi-mesh configurations for centralized or distributed control, making it more adaptable for complex deployments.

Service Mesh Complexity and Operational Overhead

Service meshes introduce proxies, control planes, and service mirrors, adding complexity and increasing failure points. Managing failure zones, network configurations, and deployment models like Linkerd’s layered and flat networks requires careful planning. They also demand continuous monitoring, updates, and troubleshooting, adding operational overhead and requiring specialized expertise.

Alternative Kubernetes Distributions for Multi-Region (e.g., k3s)

For multi-region deployments, especially where resources are tight, lightweight Kubernetes distributions like k3s offer a compelling alternative. k3s, a CNCF-certified project from Rancher, is purpose-built for production workloads in places where a full-blown Kubernetes cluster might be too much—think edge locations, IoT devices, or any scenario where minimizing operational overhead is key. Its smaller footprint makes it ideal when compute and memory are limited.

This lightweight design doesn't sacrifice core Kubernetes functionality. You still get the tools you need for container orchestration, including deployments, services, and networking. k3s is a popular choice for home labs and testing, as highlighted in resources like this review of Kubernetes distributions, but it's also production-ready for real-world multi-region deployments. The active k3s community, discussed in articles like this comparative analysis, provides ample support and resources.

While k3s simplifies multi-region deployments, it's still Kubernetes at its core. You'll still face challenges like cross-cluster communication, service discovery, and consistent security policies. However, k3s can be a valuable tool, especially when resource efficiency and operational simplicity are top priorities. For a managed approach, explore Kubernetes-as-a-Service options, as outlined in this overview of Kubernetes alternatives, to further streamline operations.

Application Gateway Patterns for Multi-Region Deployments

Application gateway patterns utilize global load balancers like Azure Application Gateway to efficiently route traffic across regional clusters based on location, workload, and cluster health. Front-door services such as Azure Front Door further enhance performance with CDN caching, delivering content closer to users, and advanced rule engines for sophisticated routing strategies. These gateways ensure high availability by dynamically monitoring backend health and redirecting traffic to healthy regions during outages.

Tooling Limitations in Multi-Region Kubernetes

Multi-region Kubernetes deployments introduce unique tooling limitations, largely due to network flow directionality and connectivity challenges.

Network Flow Direction Problems in Multi-Region Kubernetes

Deployment Tools: ArgoCD in a Multi-Region Environment

In multi-region setups, tools like ArgoCD often encounter connectivity issues. Direct IP bridging from one region (e.g., US East) to another (e.g., US West) is often not possible due to network configurations. As a result, an ArgoCD instance trying to connect to a cluster's API server in another region might fail because it cannot resolve the API server's address across regions. To address this limitation an organization might need separate ArgoCD instances for each region (e.g., ArgoCD for East, ArgoCD for West) to effectively manage clusters within those regions.

Observability Challenges: Prometheus in Multi-Region Kubernetes

Prometheus uses a pull-based scraping method, which means it needs to make API requests to endpoints compatible with Prometheus to gather metrics from monitored systems. This method creates issues in multi-region setups. Organizations often need a separate Prometheus server or agent in each region to monitor systems there. This makes data aggregation challenging, as it requires querying all regional Prometheus servers or exporting data to a central place. The network flow direction in Prometheus' design makes monitoring across multiple regions more complicated.

Common Architectural Patterns for Multi-Region Kubernetes

To tackle networking challenges, organizations deploy critical tools separately in each region. This requires replicating ArgoCD for local Kubernetes access and regional Prometheus servers for metrics collection, improving availability but increasing complexity and costs. For observability, data is either queried from regional Prometheus servers or exported to a central store, demanding careful planning to maintain reliability, scalability, and efficiency.

Phased Rollouts and Planning for Multi-Region Success

Successfully deploying a multi-region Kubernetes architecture requires careful planning and a phased rollout. A measured, incremental cluster deployment, with thorough testing at each step, helps identify and address potential issues early. This minimizes disruption and ensures a smooth transition.

Meticulous planning is also paramount. Key considerations include network configuration (CIDRs, NAT, DNS), load balancer selection, and your etcd deployment strategy. Think through how these components will interact across regions and plan for potential network disruptions or latency issues.

Before committing to a complex multi-region setup, consider alternative architectures. A simpler solution, like a service mesh across multiple, simpler, regional clusters, might be more manageable. Evaluate your specific needs and explore different architectural strategies to find the most efficient and maintainable solution. Understanding the underlying need often reveals simpler approaches.

Just as with multi-zone deployments, planning for regional outages is essential. Develop a disaster recovery plan that addresses how you’ll recover if an entire region goes down. Replicating control plane components (API server, scheduler, etcd, controller manager) across at least three zones ensures high availability and minimizes the impact of regional failures. This principle extends to multi-region deployments.

Special Environment Considerations for Multi-Region Kubernetes

FedRAMP and GovCloud Requirements for Multi-Region

FedRAMP and GovCloud add extra requirements beyond standard multi-region deployments, needing specialized methods for networking, security, and access control.

Network Restrictions in Multi-Region Environments

Network Restrictions in these environments are much stricter than in standard cloud regions. Unlike typical deployments where internet access is often open, FedRAMP enforces egress limitations, restricting outbound connections to approved endpoints. Additionally, security boundaries are enforced to keep these environments separate from other infrastructure, not just as technical constraints but as regulatory requirements that influence architecture and operations.

Access Control Requirements in Multi-Region Kubernetes

Access Control Requirements make GovCloud different from standard environments. There are strict personnel restrictions that only allow U.S. citizens or green card holders to manage these systems, excluding offshore workers and foreign citizens. Organizations need to establish separate management structures, access policies, and operational procedures to comply with these strict rules. Due to the increased operational complexity, organizations must maintain separate management accounts and tool instances (like separate deployment consoles or observability stacks) for GovCloud regions.

Multi-Zone Kubernetes Best Practices

While true multi-region Kubernetes requires advanced solutions, multi-zone deployments are readily achievable and offer significant benefits. Let's explore some best practices:

Topology Spread Constraints for Resilience

Kubernetes automatically distributes your pods across different worker nodes in different zones. This built-in resilience prevents a single zone failure from impacting your entire application. You can fine-tune this distribution using topology spread constraints, ensuring an even distribution of pods across zones, even with varying node counts. This helps maximize resource utilization and maintain high availability.

StorageClass Configuration for Zone Selection

Data locality is crucial for performance. Kubernetes strives to keep your Persistent Volumes (PVs) in the same zone as the pods using them. This is achieved through labels and StorageClasses. Proper configuration ensures optimal data access speeds and minimizes latency, which is especially important for stateful applications.

Node Management and Recovery in Multi-Zone Clusters

You're responsible for creating and managing nodes across zones. Tools like the Cluster API can automate this, simplifying scaling and recovery from zone failures. This automation is essential for maintaining a healthy and responsive multi-zone cluster, allowing you to focus on application management rather than infrastructure maintenance. For a more streamlined approach to multi-cluster management, consider platforms like Plural.

Control Plane Replication and DNS for High Availability

The Kubernetes control plane (API server, scheduler, etcd, controller manager) should be replicated across at least three zones. This redundancy ensures high availability and fault tolerance, protecting your cluster from control plane failures. Robust DNS configuration within your cluster is also vital for reliable service discovery in a multi-zone setup, ensuring that pods can consistently locate and communicate with each other.

Multi-Cloud and Multi-Region Database Deployment Strategies

Multi-cloud and multi-region database deployments offer resilience against vendor lock-in and regional outages, but introduce complexity.

Mitigating Vendor Lock-in and Cloud Provider Outages

Distributing your database across multiple cloud providers and regions protects against outages caused by natural disasters, provider-specific issues, or even geopolitical events. This strategy enhances business continuity and disaster recovery capabilities, ensuring your data remains accessible even in challenging circumstances. This approach also reduces reliance on a single vendor, giving you more flexibility and negotiating power.

Handling Software and Configuration Faults Across Clouds

While separate regional clusters improve availability and fault tolerance, they also increase management complexity. Maintaining consistent configurations, security policies, and access controls across multiple environments is crucial for avoiding inconsistencies and security vulnerabilities. Consider tools like Plural to help manage this complexity, enabling consistent deployments and simplified operations across your multi-region infrastructure.

Specific Multi-Region Solutions

Let's look at specific solutions offered by major cloud providers:

Amazon MemoryDB: Automatic Replication and Failover

Regional Clusters within a Multi-Region MemoryDB Cluster

Amazon MemoryDB for Redis allows you to create up to five regional clusters, each residing in a different AWS region. These regional clusters operate independently but synchronize data asynchronously, providing low-latency reads within each region.

Data Synchronization Between MemoryDB Regional Clusters

MemoryDB uses asynchronous replication to synchronize data between regional clusters. This approach balances performance and consistency, allowing for fast local reads while ensuring eventual consistency across regions. This is ideal for applications that can tolerate some degree of data lag between regions.

Connection Methods and Regional Endpoints for MemoryDB

Each MemoryDB regional cluster has its own endpoint. Applications connect to the nearest regional endpoint for optimal performance. Failover is handled automatically, redirecting connections to a healthy region in case of an outage. This automatic failover minimizes downtime and ensures application continuity.

Google Kubernetes Engine (GKE) Regional Clusters

Benefits of GKE Regional Clusters

GKE regional clusters distribute your control plane and worker nodes across multiple zones within a region. This enhances resilience against single-zone outages and improves application availability. The distributed control plane also reduces the risk of a single point of failure.

Zonal, Multi-Zonal, and Regional Clusters in GKE

GKE offers various cluster types: zonal (single zone), multi-zonal (multiple zones in a region), and regional (control plane and nodes distributed across multiple zones). Choosing the right type depends on your resilience and performance requirements. Zonal clusters are the simplest but least resilient, while regional clusters offer the highest availability.

Limitations and Considerations for GKE Regional Clusters

Regional clusters consume more IP addresses than zonal clusters. Be mindful of your project's IP address quotas and request increases if needed. Also, consider the increased cost associated with running a regional cluster compared to a zonal or multi-zonal cluster. Evaluate your needs and budget to determine the best fit.

Key Kubernetes Concepts for Multi-Region/Multi-Zone

Regions vs. Zones: Understanding the Difference

A region is a geographical area encompassing multiple availability zones. Zones are isolated locations within a region, designed for fault tolerance. Understanding this distinction is fundamental for designing resilient deployments. Choosing the right combination of regions and zones is crucial for balancing performance, availability, and cost.

Control Plane Components in Multi-Region/Multi-Zone

In multi-zone/multi-region setups, control plane components (API server, scheduler, controller manager, etcd) are strategically distributed for high availability. The specific distribution strategy depends on the chosen architecture and the capabilities of your chosen platform. Careful planning and configuration are essential for ensuring control plane resilience.

Persistent Volumes and StorageClasses in Multi-Region/Multi-Zone

Managing persistent storage across zones or regions requires careful consideration of data replication and consistency. StorageClasses play a key role in defining how PVs are provisioned and accessed in a distributed environment. Choosing the right storage solution and configuration is critical for data integrity and application performance.

Challenges of On-Premises Multi-Region Kubernetes

On-premises multi-region Kubernetes presents unique challenges compared to cloud-based solutions.

Automatic Failover Mechanisms in On-Premises Environments

Implementing automatic failover in on-premises environments requires careful planning and robust solutions. Unlike cloud providers that offer managed failover services, on-premises setups rely on custom solutions or integrating with third-party tools. This adds complexity and requires specialized expertise.

Load Balancing Complexity in On-Premises Multi-Region

Load balancing across on-premises regions can be complex, requiring specialized hardware or software load balancers and careful configuration to manage traffic effectively. Maintaining and troubleshooting these load balancers can also be challenging.

Limited Solutions and Documentation for On-Premises Multi-Region

Compared to cloud-based Kubernetes, fewer readily available solutions and less comprehensive documentation exist for on-premises multi-region deployments. This can make implementation more challenging and require deeper technical expertise. Organizations may need to develop custom solutions or adapt existing tools to fit their specific needs.

Latency Considerations Between On-Premises and Cloud

If integrating on-premises infrastructure with cloud regions, consider the latency between locations. High latency can impact application performance and complicate data synchronization. Optimizing network connectivity and choosing appropriate data replication strategies are essential for mitigating latency issues.

Plural's Approach to Multi-Region Kubernetes Management

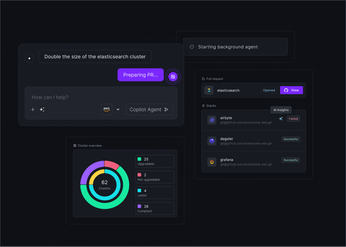

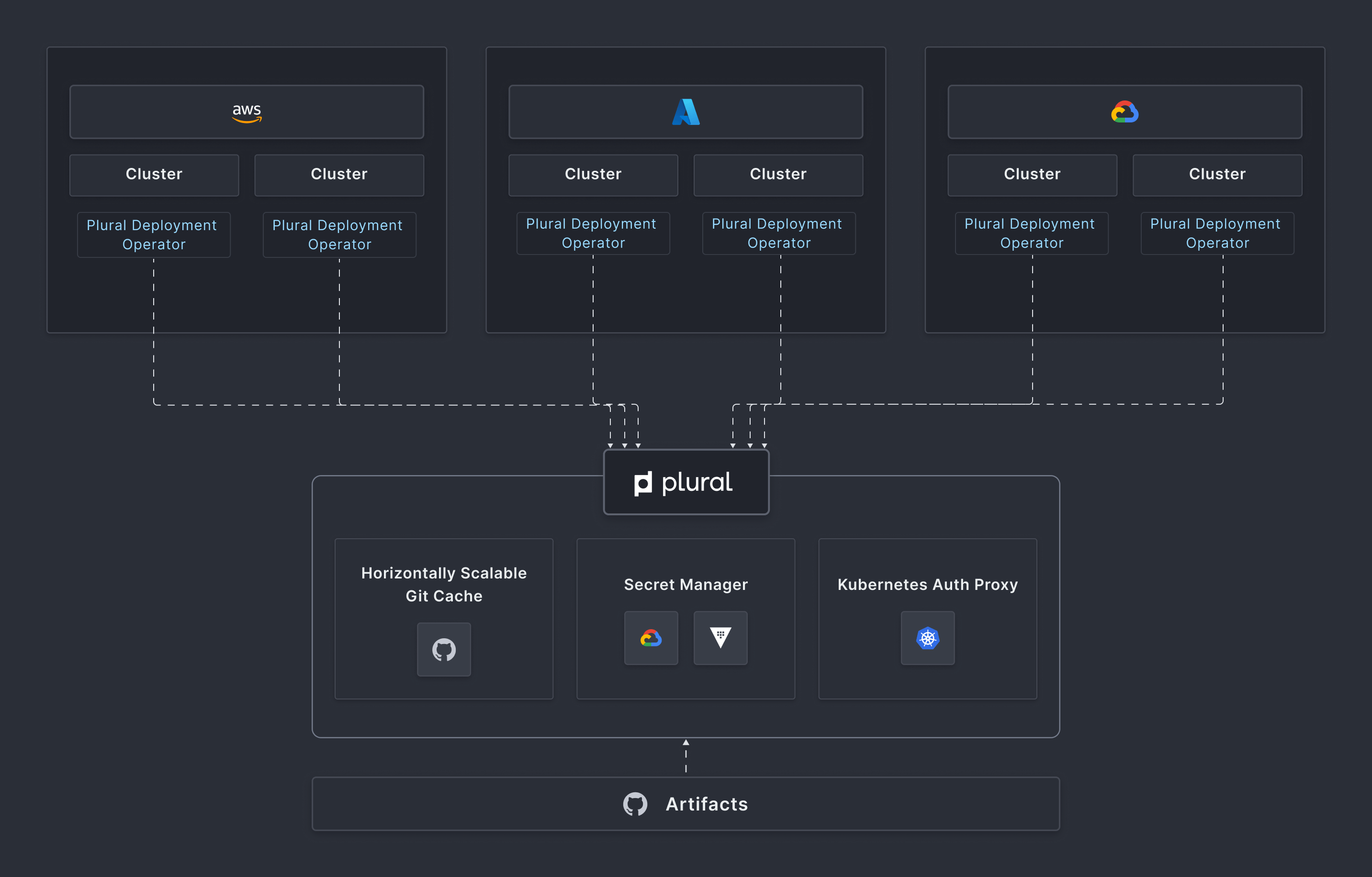

Plural's Agent-First Architecture for Multi-Region

One of the major challenges Kubernetes management platforms face is networking. Different network topologies and configurations can make it difficult to connect with clusters. However, Plural simplifies this issue with its lightweight, agent-based architecture.

Here's how it works: Plural deploys a control plane in your management cluster and a lightweight agent as a pod in each workload cluster. This agent creates secure outbound WebSocket connections to the control plane, allowing smooth two-way communication without exposing Kubernetes API servers or adding network complexity.

Once deployed, the agent:

- Establishes an authenticated connection to the Plural control plane

- Registers the cluster and its capabilities

- Receives commands and configurations from the control plane

- Executes changes locally within the cluster

- Reports status and metrics back to the control plane

This outbound-only connection model provides key advantages:

- Seamless network boundary traversal: Agents function across VPCs and regions without requiring VPC peering or exposing cluster endpoints.

- Simplified firewall management: Only outbound connections to Plural’s API endpoints are needed, eliminating complex ingress rules.

- Compatibility with air-gapped environments: A local control plane can be deployed to meet strict network isolation requirements.

Plural also simplifies cluster bootstrapping, allowing rapid multi-region expansion. The streamlined process includes:

- Automated agent deployment: Clusters are bootstrapped with minimal configuration through a simple initialization process.

- Self-registration: Agents automatically register with the control plane, sharing cluster details and capabilities.

- Configuration synchronization: The control plane instantly syncs configurations based on cluster roles and policies.

Unified Management Capabilities with Plural

Deployment Orchestration with Plural

As we've seen earlier, managing deployments and upgrades across multiple regions, where you must keep versions and configurations consistent, is challenging. Plural simplifies this process with:

Deployment Orchestration with Plural

As we've seen, managing deployments and upgrades across multiple regions—where you must keep versions and configurations consistent—is challenging. Plural simplifies this with:

- Centralized Control: Manage all your regional clusters from a single control plane, ensuring consistent deployments and configurations.

- GitOps-Driven Deployments: Use Git as the source of truth for your deployments, providing version control, audit trails, and easy rollback capabilities. Learn more about Plural's approach to GitOps.

- Automated Rollouts and Rollbacks: Automate deployments and upgrades across all regions with built-in safeguards and automated rollbacks in case of failures. Explore Plural's deployment features.

Observability and Monitoring with Plural

Gaining a unified view of your multi-region Kubernetes deployments is crucial for effective monitoring and troubleshooting. Plural addresses the observability challenges discussed earlier by providing:

- Aggregated Monitoring: Collect metrics and logs from all your regional clusters in a central location, eliminating the need to query disparate data sources. See how Plural simplifies monitoring.

- Unified Dashboards: Visualize the health and performance of your entire multi-region deployment through intuitive dashboards, providing real-time insights into critical metrics. Learn more about using the Plural dashboard.

- Alerting and Notifications: Configure alerts for key events and performance thresholds, ensuring timely responses to potential issues across all regions.

Security and Access Control with Plural

Maintaining consistent security policies and access controls across multiple regions is essential for protecting your applications and data. Plural simplifies multi-region Kubernetes security with:

- Centralized Policy Management: Define and enforce security policies consistently across all your regional clusters from a single control plane. Explore Plural's security features.

- Role-Based Access Control (RBAC): Manage user access to your Kubernetes resources based on roles and permissions, ensuring secure access across all regions. Learn more about configuring RBAC with Plural.

- Secure Cluster Connectivity: Leverage Plural's agent-based architecture to establish secure connections between your control plane and workload clusters, minimizing the attack surface.

By addressing the core challenges of multi-region Kubernetes, Plural empowers organizations to build and manage highly available, resilient, and secure applications at scale. Book a demo to see how Plural can simplify your multi-region Kubernetes journey.

Cross-Region Deployment Coordination with Plural

Plural’s agent-based architecture and egress-only communication simplify multi-region Kubernetes management through a centralized control plane, eliminating the need for complex networking configurations.

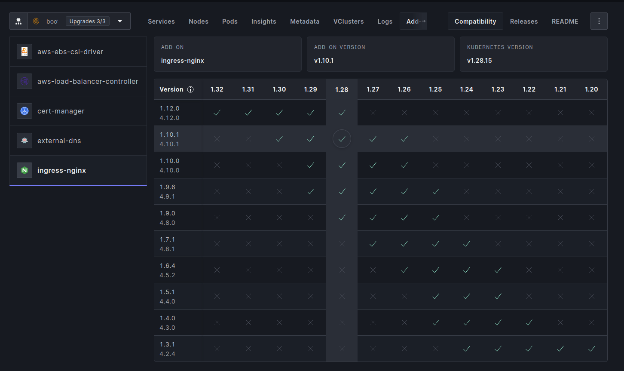

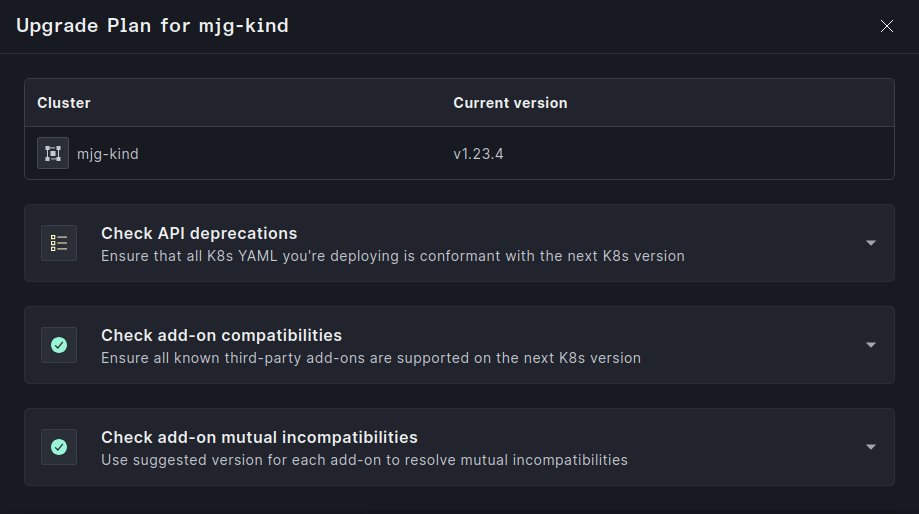

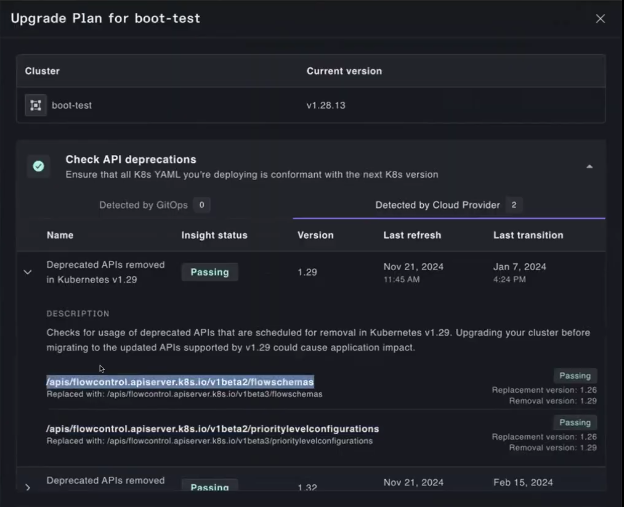

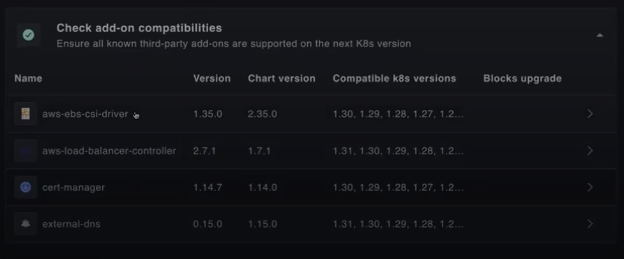

Its Continuous Deployment Engine streamlines Kubernetes lifecycle management by automating upgrades, node group rotations, and add-on updates with minimal disruption. The pre-flight checklist ensures version compatibility, while the version matrix maps controller versions to specific Kubernetes versions, enabling seamless, error-free upgrades.

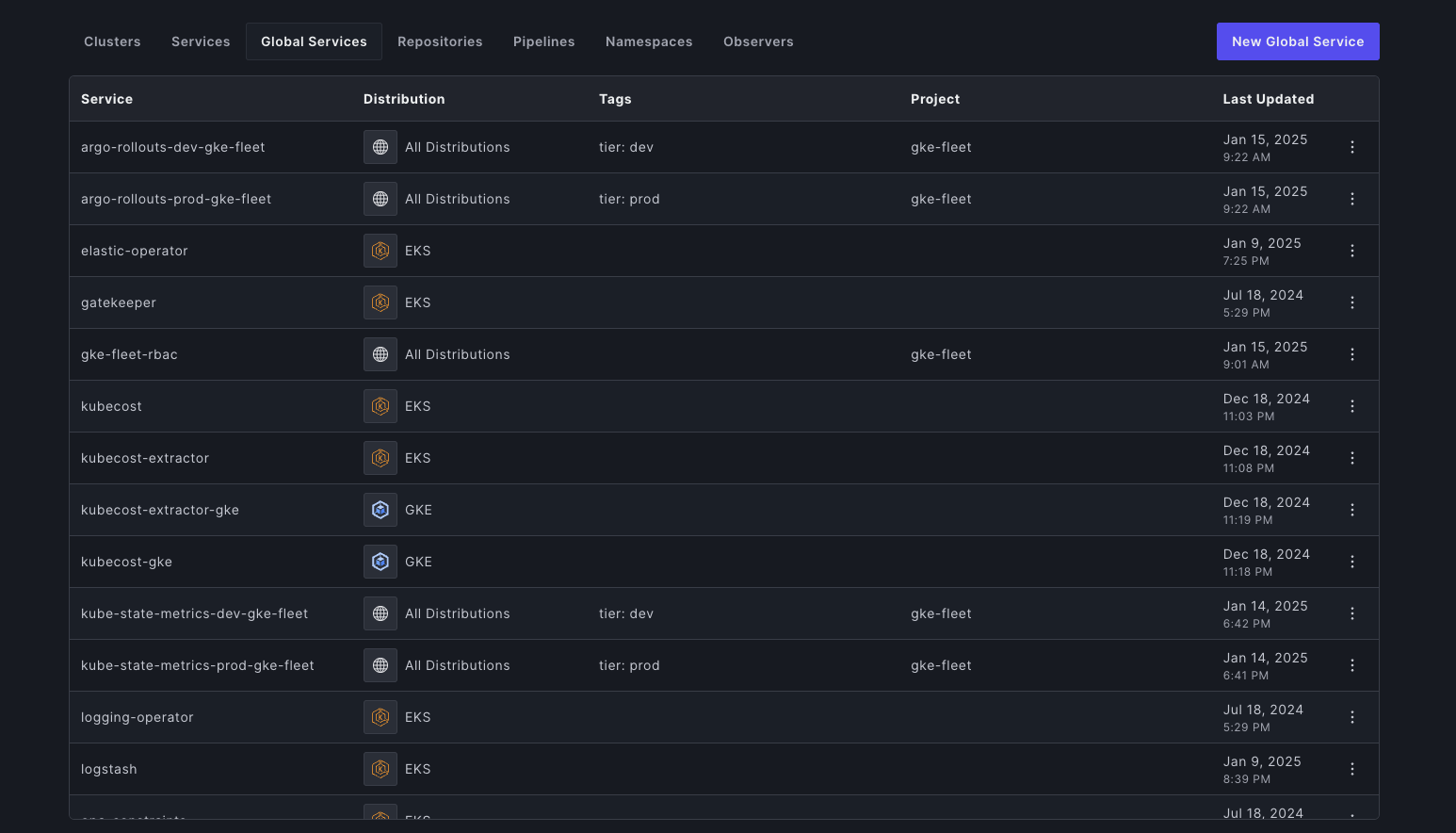

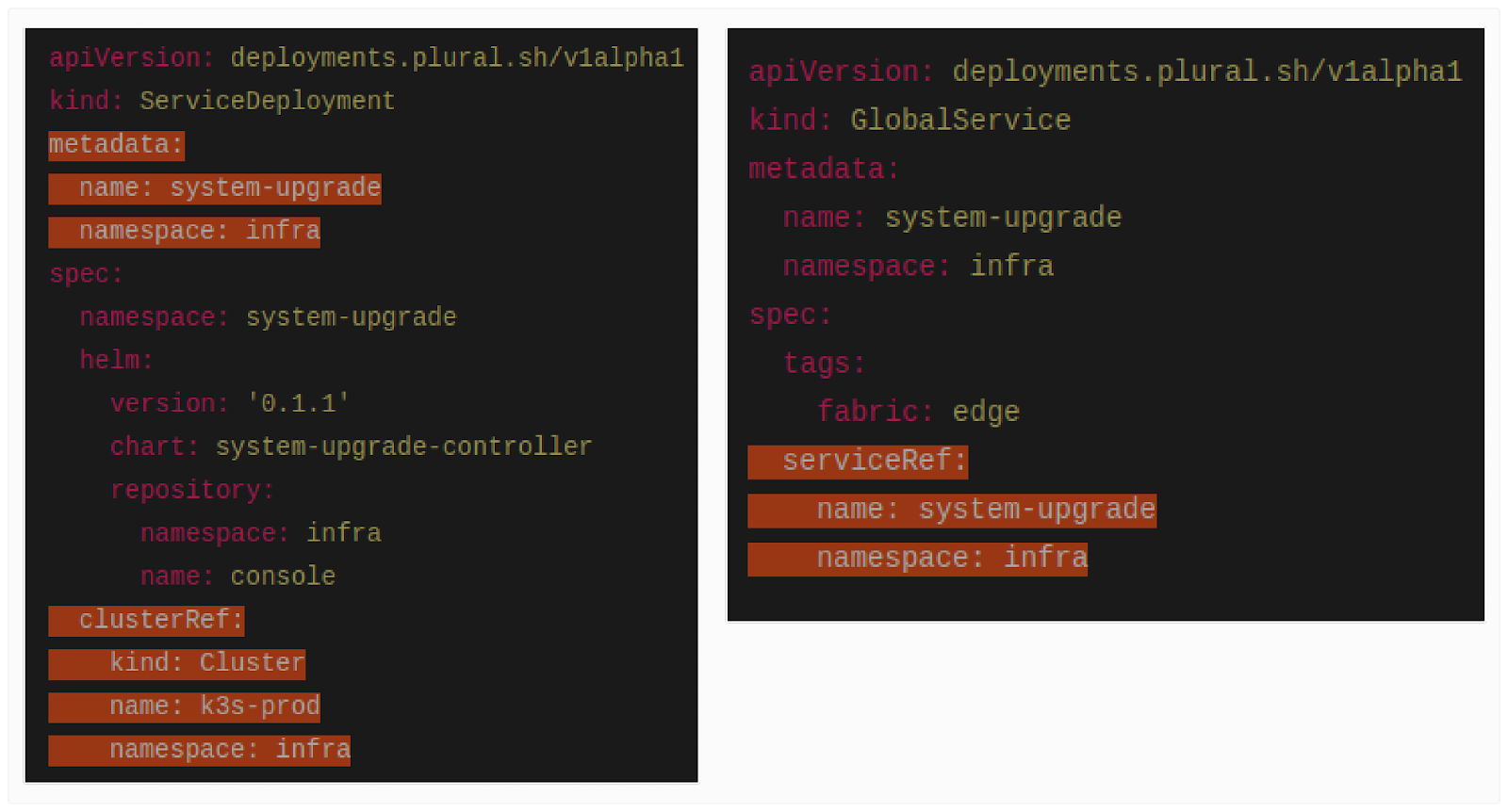

Configuration Consistency with Plural

Plural ensures consistent configurations across regions through Global Services, standardizing networking, observability agents, and CNI providers. Instead of managing these add-ons individually per cluster, users define a single Global Service resource, which automatically replicates configurations across all targeted clusters.

Policy Enforcement at Scale with Plural

Plural enforces security and compliance policies across all regions using a centralized policy-as-code framework with OPA Gatekeeper. This ensures that security and governance policies are automatically applied across all clusters, preventing misconfigurations and enforcing compliance standards.

Access Control and Security with Plural

Managing access control in distributed environments is challenging, especially when dealing with sensitive data or meeting strict regulations like FedRAMP and GovCloud. Plural simplifies this process with:

Deployment Orchestration with Plural

As we've seen, managing deployments and upgrades across multiple regions—where you must keep versions and configurations consistent—is challenging. Plural simplifies this with:

- Centralized Control: Manage all your regional clusters from a single control plane, ensuring consistent deployments and configurations.

- GitOps-Driven Deployments: Use Git as the source of truth for your deployments, providing version control, audit trails, and easy rollback capabilities. Learn more about Plural's approach to GitOps.

- Automated Rollouts and Rollbacks: Automate deployments and upgrades across all regions with built-in safeguards and automated rollbacks in case of failures. Explore Plural's deployment features.

Observability and Monitoring with Plural

Gaining a unified view of your multi-region Kubernetes deployments is crucial for effective monitoring and troubleshooting. Plural addresses the observability challenges discussed earlier by providing:

- Aggregated Monitoring: Collect metrics and logs from all your regional clusters in a central location, eliminating the need to query disparate data sources. See how Plural simplifies monitoring.

- Unified Dashboards: Visualize the health and performance of your entire multi-region deployment through intuitive dashboards, providing real-time insights into critical metrics. Learn more about using the Plural dashboard.

- Alerting and Notifications: Configure alerts for key events and performance thresholds, ensuring timely responses to potential issues across all regions.

Security and Access Control with Plural

Maintaining consistent security policies and access controls across multiple regions is essential for protecting your applications and data. Plural simplifies multi-region Kubernetes security with:

- Centralized Policy Management: Define and enforce security policies consistently across all your regional clusters from a single control plane. Explore Plural's security features.

- Role-Based Access Control (RBAC): Manage user access to your Kubernetes resources based on roles and permissions, ensuring secure access across all regions. Learn more about configuring RBAC with Plural.

- Secure Cluster Connectivity: Leverage Plural's agent-based architecture to establish secure connections between your control plane and workload clusters, minimizing the attack surface.

By addressing the core challenges of multi-region Kubernetes, Plural empowers organizations to build and manage highly available, resilient, and secure applications at scale. Book a demo to see how Plural can simplify your multi-region Kubernetes journey.

Project-Based Segregation with Plural

Plural’s project-based access control model enables precise resource and responsibility segregation within a multi-tenant architecture. Each project operates independently within the control plane, with its own resources, configurations, and RBAC policies while maintaining logical isolation.

Environment-Specific Policies with Plural

Plural enforces environment-specific security policies by categorizing clusters into development, testing, and production environments, applying appropriate security controls automatically. Additional approval steps may be required for critical changes, ensuring proper oversight and compliance with regulatory standards.

FedRAMP/GovCloud Compatibility with Plural

Plural meets FedRAMP and GovCloud requirements with outbound-only connectivity for strict egress control. Its project-based model segregates regulated and commercial environments, restricting access to authorized personnel. It also enforces audit logging, access controls, and separation of duties, ensuring compliance with FedRAMP monitoring standards.

Operational Advantages of Using Plural

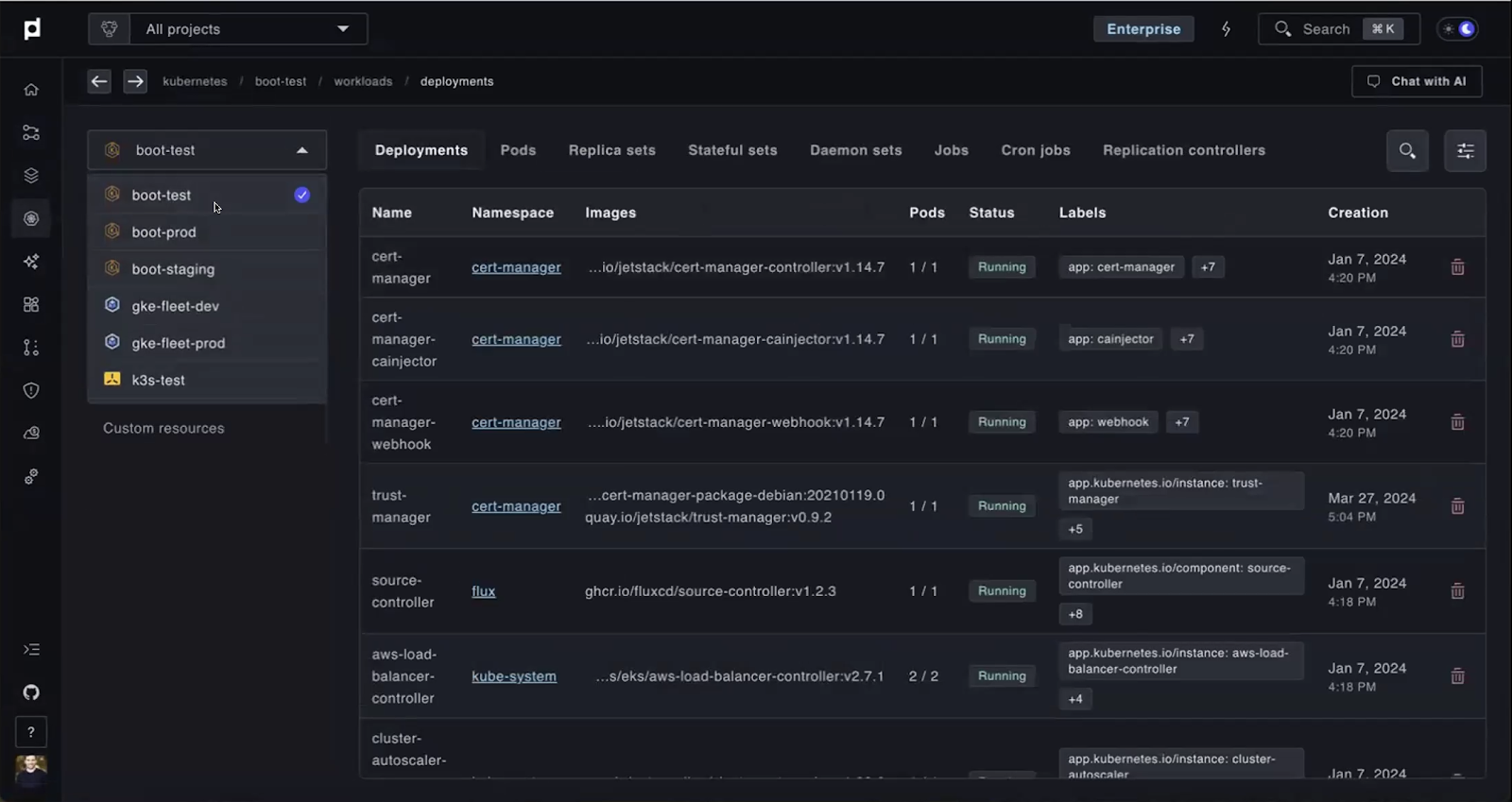

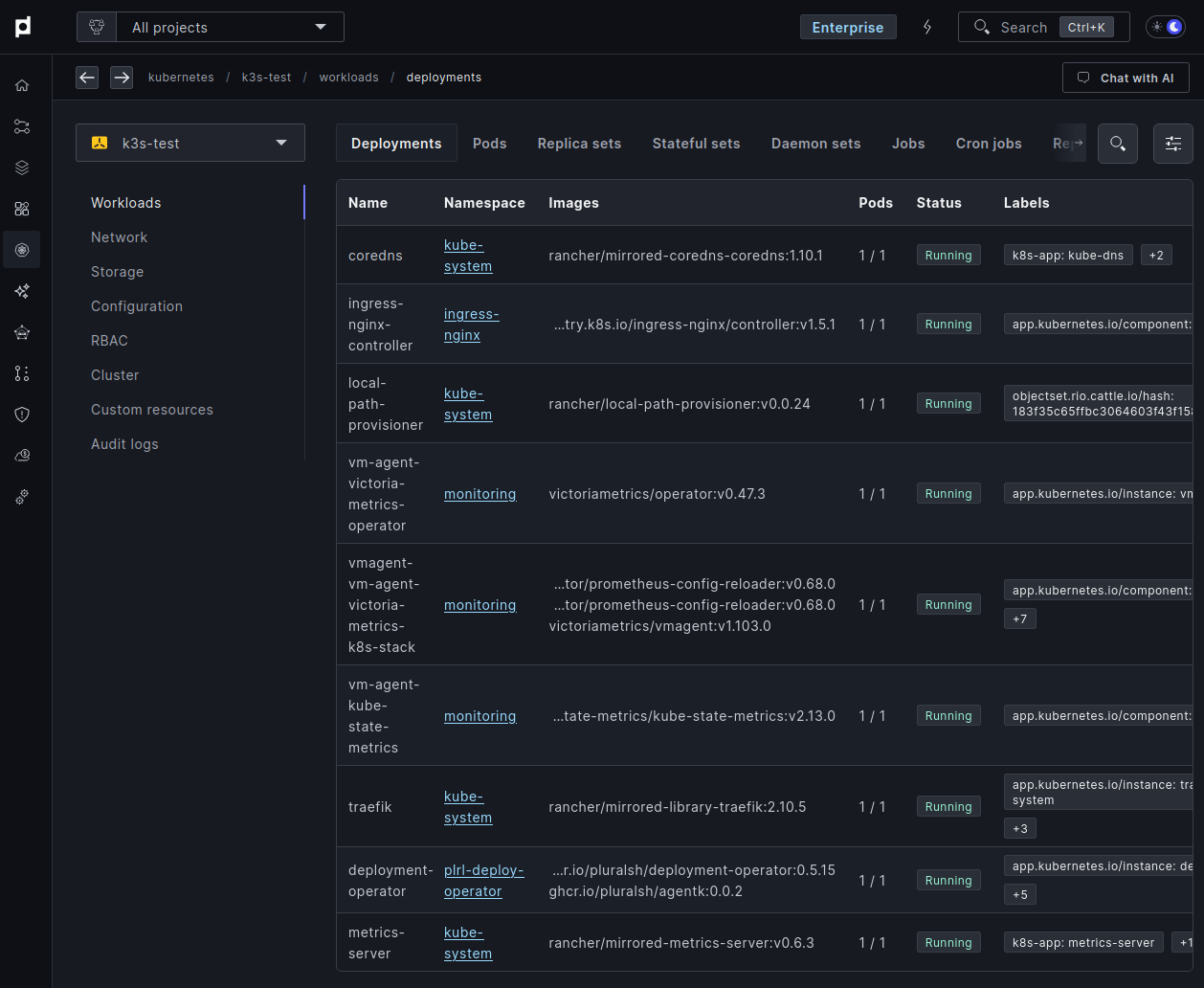

Centralized Visibility with Plural

As we've observed, fragmented observability tools and data aggregation challenges make monitoring and troubleshooting difficult in multi-region setups. Plural solves this with its built-in Multi-Cluster Dashboard, which unifies everything for a smooth debugging experience.

It provides detailed, real-time visibility into Kubernetes resources like pods, deployments, networking, and storage across all regions. Its agents efficiently collect metrics to a central platform, avoiding common cross-region pull-based issues, while centralized retention policies ensure compliance and cost efficiency. Additionally, Plural centralizes logs and events, offering intuitive search and linking tools to simplify troubleshooting and quickly identify issues in multi-region environments.

Simplified Tooling with Plural

Separate instances of tools like ArgoCD or Prometheus in each region are another source of frustration. Plural also helps simplify this process.

Deployment Orchestration with Plural

As we've seen, managing deployments and upgrades across multiple regions—where you must keep versions and configurations consistent—is challenging. Plural simplifies this with:

- Centralized Control: Manage all your regional clusters from a single control plane, ensuring consistent deployments and configurations.

- GitOps-Driven Deployments: Use Git as the source of truth for your deployments, providing version control, audit trails, and easy rollback capabilities. Learn more about Plural's approach to GitOps.

- Automated Rollouts and Rollbacks: Automate deployments and upgrades across all regions with built-in safeguards and automated rollbacks in case of failures. Explore Plural's deployment features.

Observability and Monitoring with Plural

Gaining a unified view of your multi-region Kubernetes deployments is crucial for effective monitoring and troubleshooting. Plural addresses the observability challenges discussed earlier by providing:

- Aggregated Monitoring: Collect metrics and logs from all your regional clusters in a central location, eliminating the need to query disparate data sources. See how Plural simplifies monitoring.

- Unified Dashboards: Visualize the health and performance of your entire multi-region deployment through intuitive dashboards, providing real-time insights into critical metrics. Learn more about using the Plural dashboard.

- Alerting and Notifications: Configure alerts for key events and performance thresholds, ensuring timely responses to potential issues across all regions.

Security and Access Control with Plural

Maintaining consistent security policies and access controls across multiple regions is essential for protecting your applications and data. Plural simplifies multi-region Kubernetes security with:

- Centralized Policy Management: Define and enforce security policies consistently across all your regional clusters from a single control plane. Explore Plural's security features.

- Role-Based Access Control (RBAC): Manage user access to your Kubernetes resources based on roles and permissions, ensuring secure access across all regions. Learn more about configuring RBAC with Plural.

- Secure Cluster Connectivity: Leverage Plural's agent-based architecture to establish secure connections between your control plane and workload clusters, minimizing the attack surface.

By addressing the core challenges of multi-region Kubernetes, Plural empowers organizations to build and manage highly available, resilient, and secure applications at scale. Book a demo to see how Plural can simplify your multi-region Kubernetes journey.

Eliminating Regional Tool Instances with Plural

Plural natively supports GitOps, eliminating the need for a separate ArgoCD installation. Its centralized CD system leverages reverse tunneling through its agent-based architecture, ensuring secure and efficient deployments. The same applies to logging—Plural includes comprehensive, built-in logging, removing the need for external logging tools.

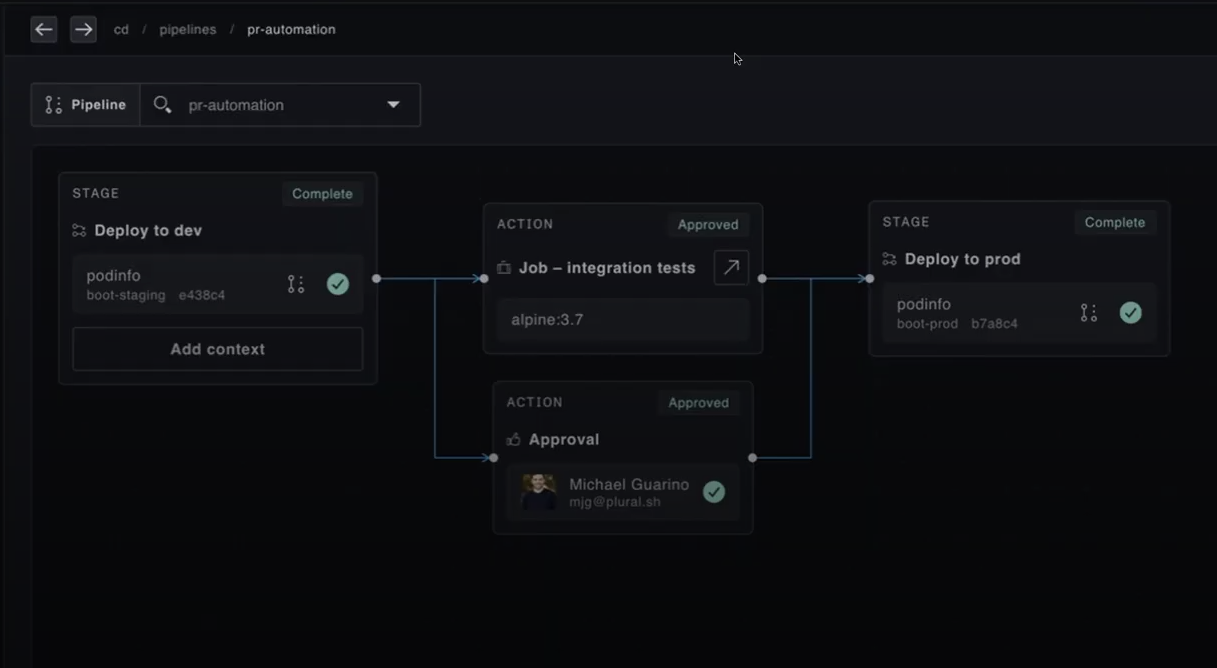

Consistent Deployment Processes with Plural

Plural streamlines cluster and service upgrades through its GitOps-powered CD pipeline. This workflow orchestrates staged upgrades across multiple clusters using Infrastructure Stack Custom Resource Definitions (CRDs). When configuration changes are pushed to Git, the pipeline first modifies CRDs for development clusters. After integration tests pass and approvals are received, the service automatically updates production cluster CRDs, ensuring consistent and validated changes across your infrastructure.

Streamlined Upgrades with Plural

Plural simplifies cross-region cluster upgrades with pre-flight checkups, version matrix, and global services for managing add-on controllers. Using a GitOps-powered CD pipeline, it orchestrates staged upgrades through InfrastructureStack CRDs. Changes are first tested in development clusters, and once validated, updates are rolled out to production, ensuring consistency and reliability across all regions.

Managing Multi-Region Kubernetes with Plural

Managing multi-region Kubernetes is complex, but Plural simplifies it with an agent-based architecture, egress-only communication, and centralized control. Its Continuous Deployment Engine streamlines upgrades, Global Services ensures configuration consistency and policy enforcement enhances security and compliance, including FedRAMP and GovCloud.

With centralized visibility and streamlined operations, Plural helps teams scale Kubernetes efficiently while reducing complexity.

Ready to simplify your multi-region Kubernetes strategy? Get started with Plural today.

Related Articles

- Kubernetes Multi-Region Deployments: A Comprehensive Guide

- Simplifying Multi-Region Kubernetes Management with Plural

- Kubernetes Clusters Solutions: The Ultimate Guide

- Kubernetes Mastery: DevOps Essential Guide

- Top tips for Kubernetes security and compliance

Frequently Asked Questions

Why is multi-region Kubernetes so complex?

Standard Kubernetes relies on etcd, a database that struggles with the latency inherent in geographically dispersed setups. This impacts consensus, increases the risk of split-brain scenarios, and makes multi-region deployments inherently unstable. Additionally, features like service discovery and network policies don't work across clusters, requiring complex workarounds.

What are the main benefits of using a tool like Plural for multi-region Kubernetes?

Plural simplifies multi-region Kubernetes management by providing a single control plane to manage all your clusters, regardless of their location. Its agent-based architecture, with egress-only communication, eliminates complex networking configurations and firewall rules. Plural also streamlines deployments, upgrades, and security policy enforcement across all regions, reducing operational overhead and ensuring consistency.

How does Plural's agent-based architecture work?

Plural deploys a lightweight agent in each of your workload clusters. This agent establishes a secure, outbound-only connection to the central control plane. This means the control plane doesn't need direct access to your workload clusters, simplifying networking and security. The agent receives instructions from the control plane and executes them locally, ensuring that all actions are performed within the cluster's own security context.

Can Plural help with multi-region deployments in regulated environments like GovCloud?

Yes, Plural is designed to meet the strict requirements of regulated environments like GovCloud. Its outbound-only communication model adheres to egress restrictions, and its project-based access control allows for strict segregation of environments and enforcement of personnel restrictions. Plural also supports comprehensive audit logging and other compliance features.

How does Plural simplify upgrades in a multi-region setup?

Plural uses a GitOps-driven approach to orchestrate upgrades across all regions. Changes are first applied to development clusters, and after testing and approvals, they are automatically rolled out to production clusters. This staged approach, combined with pre-flight checks and version compatibility verification, ensures consistent and reliable upgrades across your entire infrastructure.

Newsletter

Join the newsletter to receive the latest updates in your inbox.