Plural AI: removing the toil from Kubernetes infrastructure work

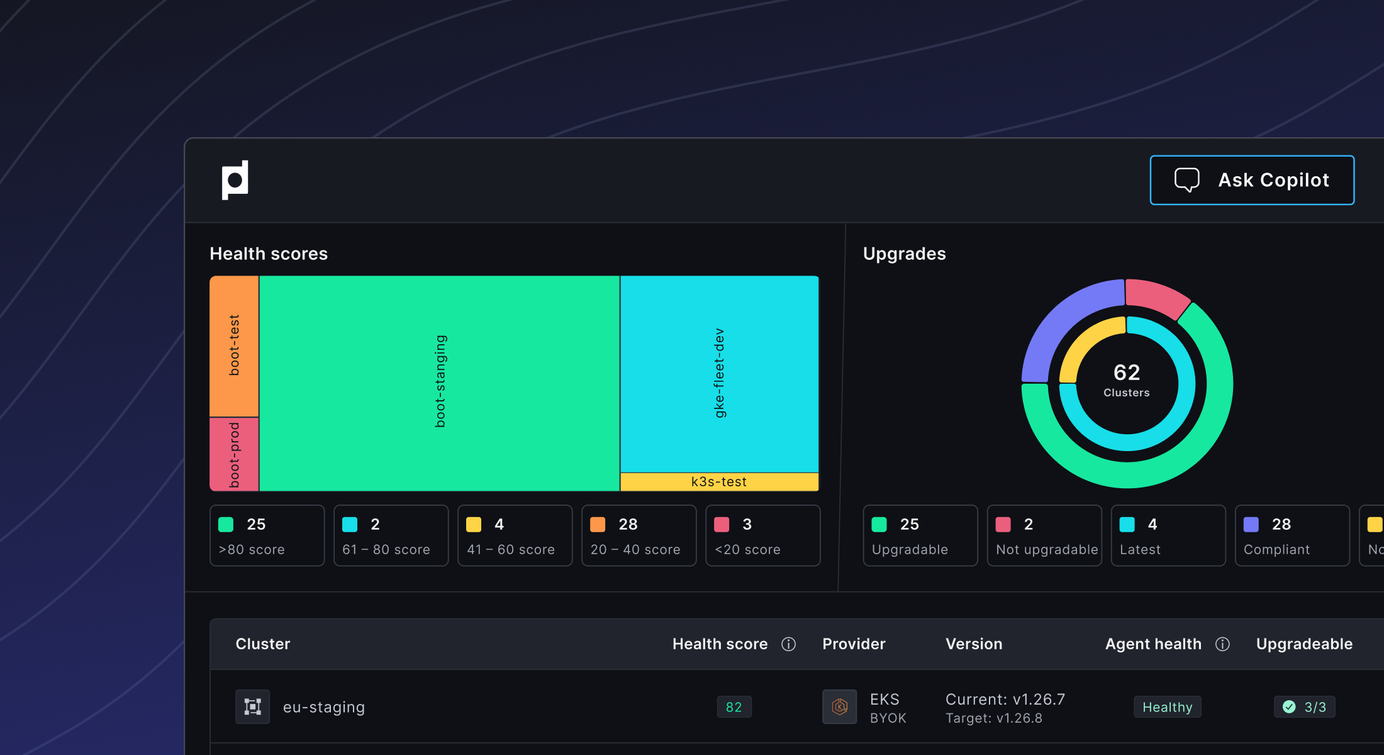

Plural AI transforms Kubernetes management with AI-driven troubleshooting, intelligent remediation, and cost optimization. It automates debugging, suggests fixes, and integrates securely with major AI providers. Plural simplifies operations, enhancing efficiency and security for modern DevOps teams.

Plural is building an enterprise Kubernetes management platform designed for modern teams. While existing Kubernetes tools tend to focus on singular aspects of Kubernetes management and leave you needing to cobble together a bunch of them, with inconsistent access policies, the beauty of Plural is that you get everything rolled into one secure, compliant place place:

- Plural CD: A fleet-scale continuous deployment engine that goes beyond the capabilities of ArgoCD with enhanced multi-cluster management and deployment automation

- Plural Stacks: A Kubernetes-native approach to infrastructure as code using Custom Resource Definitions (CRDs) and GitOps principles to automate provisioning and updates

- Plural Catalog: A comprehensive application marketplace that simplifies deployment of common tools, similar to Backstage but with deeper integration into the Kubernetes ecosystem

What truly sets Plural apart, however, is our AI offering, Plural AI, that brings intelligent decision-making to Kubernetes management, transforming how teams troubleshoot, remediate, and optimize their infrastructure.

Introducing Plural AI

Plural AI represents our vision for the future of Kubernetes management—where artificial intelligence augments human expertise to solve complex infrastructure challenges. This suite of AI-powered capabilities transforms how teams interact with their Kubernetes environments in three critical areas:

- Automated troubleshooting: Quickly identify root causes of issues across clusters, pods, and services

- Intelligent Remediation: Generate appropriate fixes with context-aware understanding of your environment

- Proactive Optimization: Continuously analyze infrastructure for cost and performance improvements

Security remains paramount in our design. Plural employs a unique agent-based architecture that maintains the highest security standards while enabling AI automation. Instead of granting an external AI system direct access to your infrastructure, Plural deploys lightweight, purpose-built agents within each cluster. These agents execute tasks securely while preserving strict access controls and keeping sensitive data within your environment.

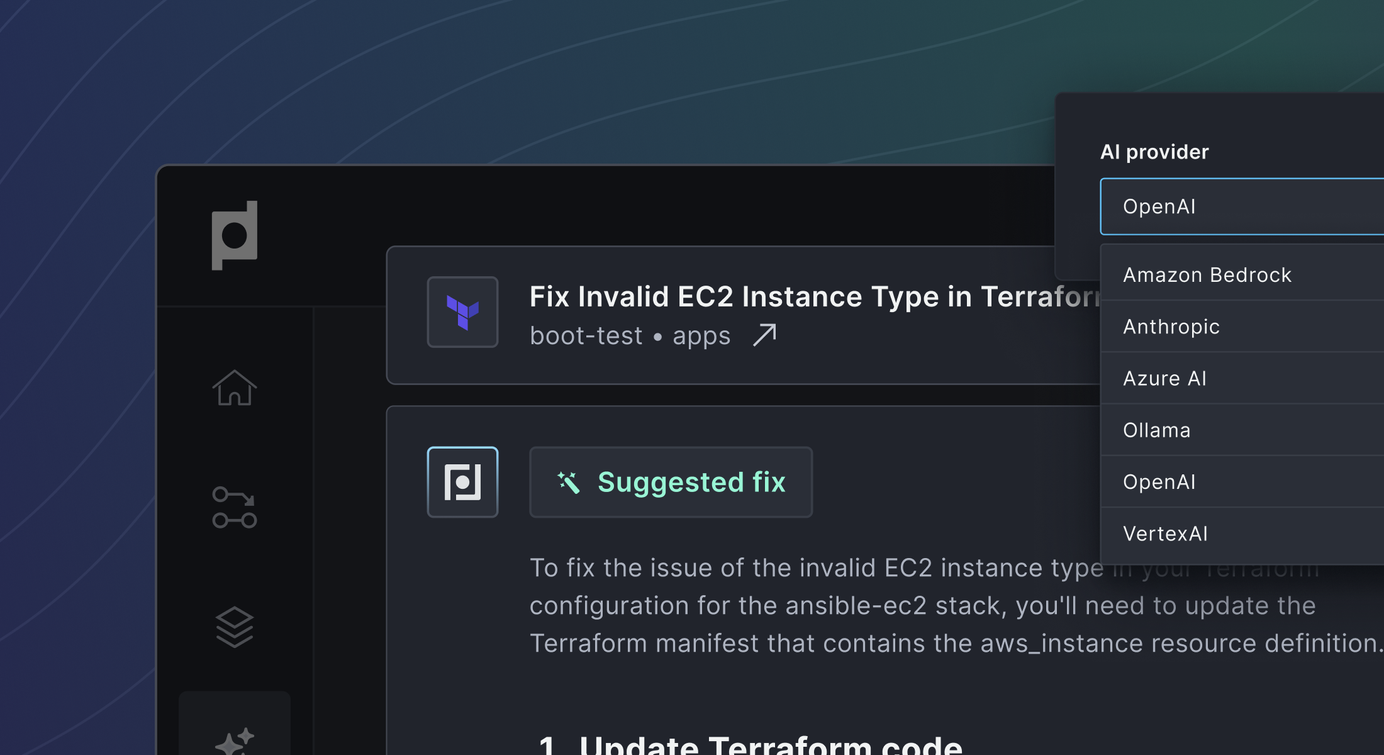

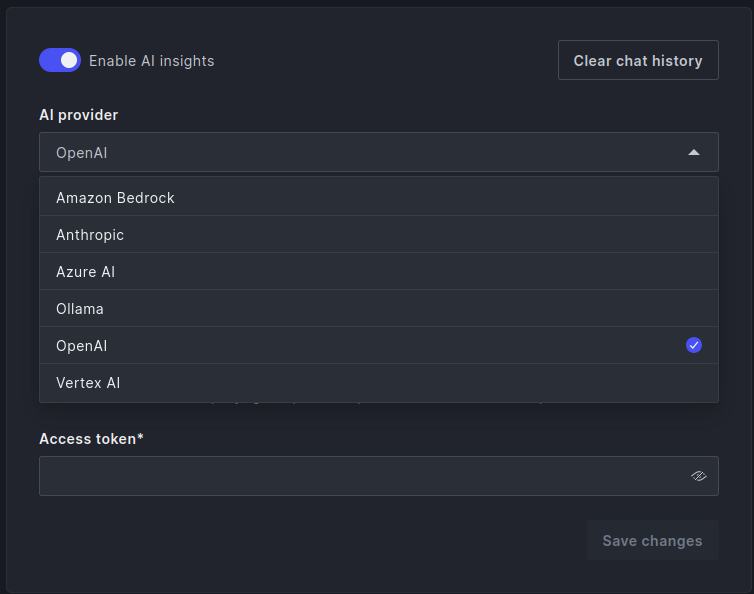

We understand that organizations have different requirements for AI providers. That's why Plural AI offers flexibility to bring your own API keys from leading providers including OpenAI, Anthropic, Amazon Bedrock, or Google Vertex AI. This approach lets you select the AI provider that aligns with your specific security policies, privacy requirements, and budget considerations.

Automatic Troubleshooting and Remediation

One of Plural's core features is automatic troubleshooting and remediation. When Kubernetes services malfunction, engineers typically follow a labor-intensive process: identifying failing pods, gathering logs, analyzing cluster events, debugging networking issues, and checking infrastructure configurations. This involves running multiple commands (kubectl logs, kubectl describe, kubectl get events) to collect data that must be manually analyzed to determine the root cause. Without automation, this process becomes inefficient and error-prone while requiring specialized Kubernetes expertise. Moreover, recurring issues force teams to repeatedly execute these same debugging workflows.

Plural eliminates this manual burden through intelligent automation. The system automatically collects all relevant information—including logs, events, and configuration data—via the Kubernetes API and a lightweight cluster agent. It analyzes the source code and resource definitions to generate comprehensive insights that include a clear summary, precise root cause identification, supporting evidence, and actionable troubleshooting steps. Plural then takes it a step further by recommending specific fixes and offering the option to create a pull request that applies these changes directly to your infrastructure.

Let’s see Plural AI into action:

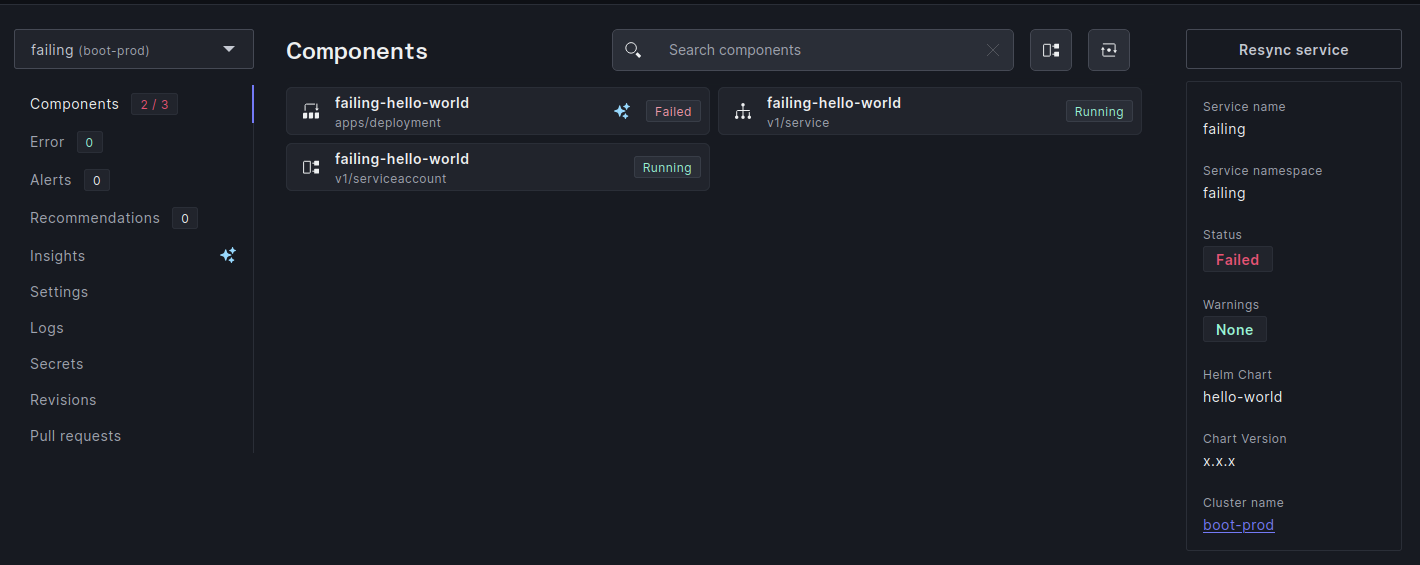

Example 1: Failing service

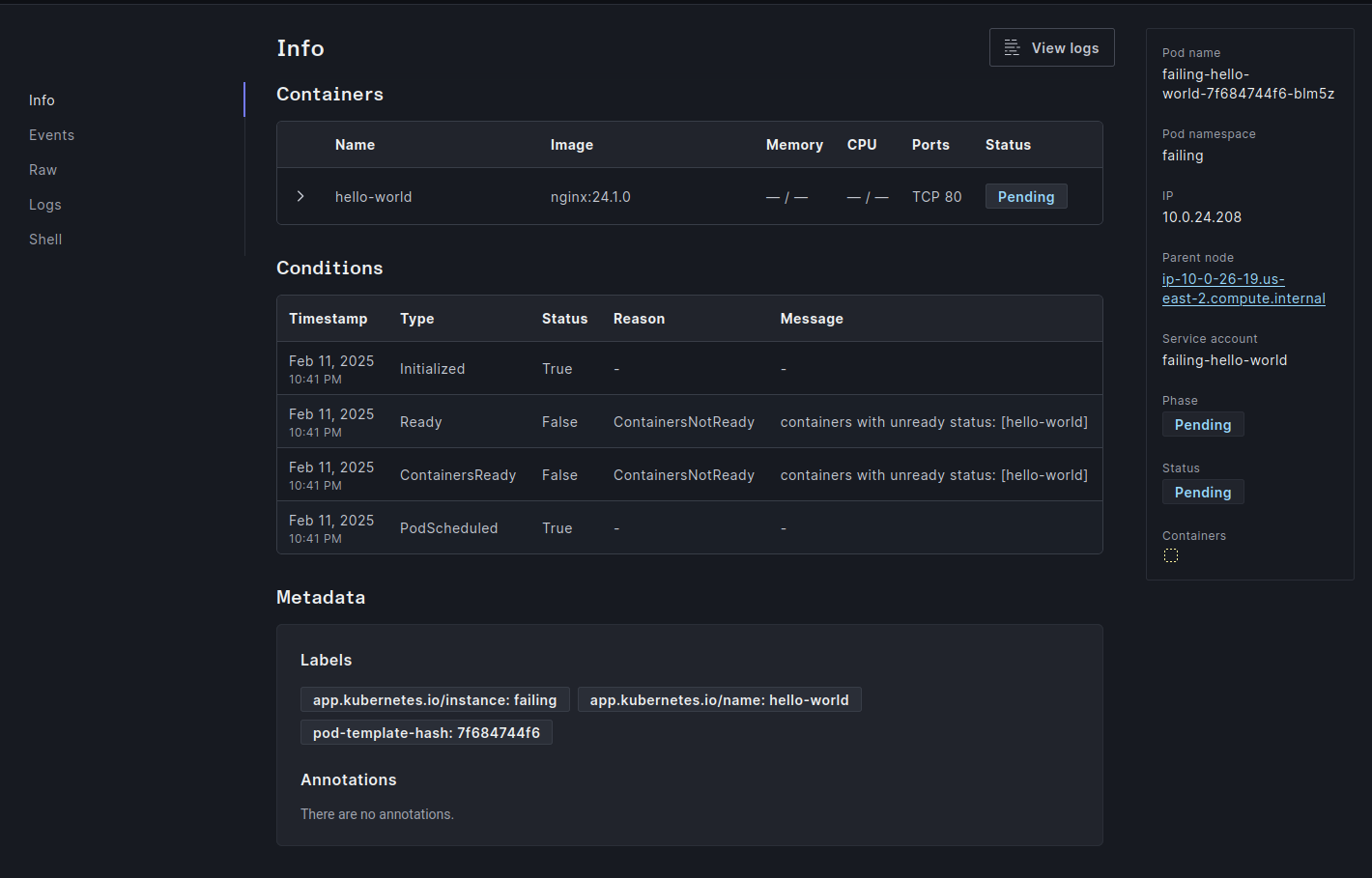

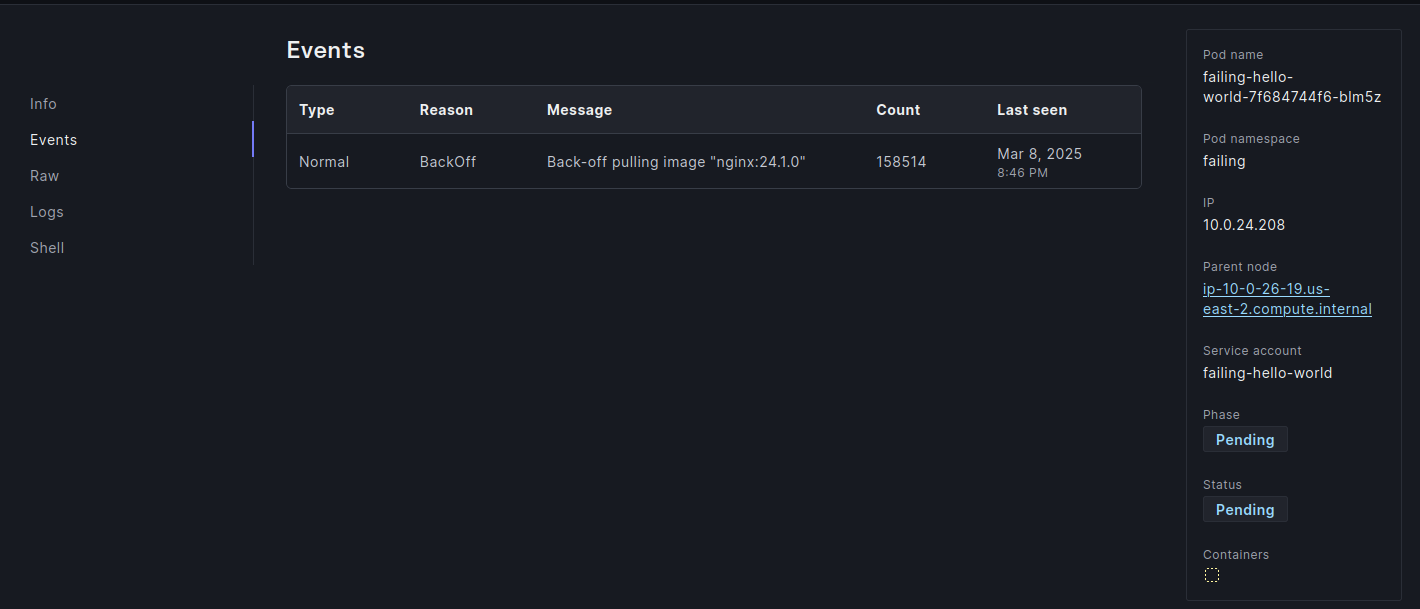

A deployment name failing-hello-world is stuck in a failing state. The pod failing-hello-world-7f684744f6-blm5z has been Pending for some time.

Plural AI Insights automatically detects and analyzes the failure by gathering Kubernetes events, pod logs, and deployment configurations. In this particular case, it identifies four key signals:

- Pod Status, indicating

waiting: {"reason": "ImagePullBackOff"}with the messageBack-off pulling image \\"nginx:24.1.0\\" - Event Logs showing a high occurrence (158404 attempts) of the error message "Back-off pulling image

\\"nginx:24.1.0\\" - Configuration Issues where the deployment uses IfNotPresent as the image pull policy, which can cause problems if the image does not exist locally

- Registry Issues where the image version

nginx:24.1.0is either incorrect, missing or requires authentication credentials.

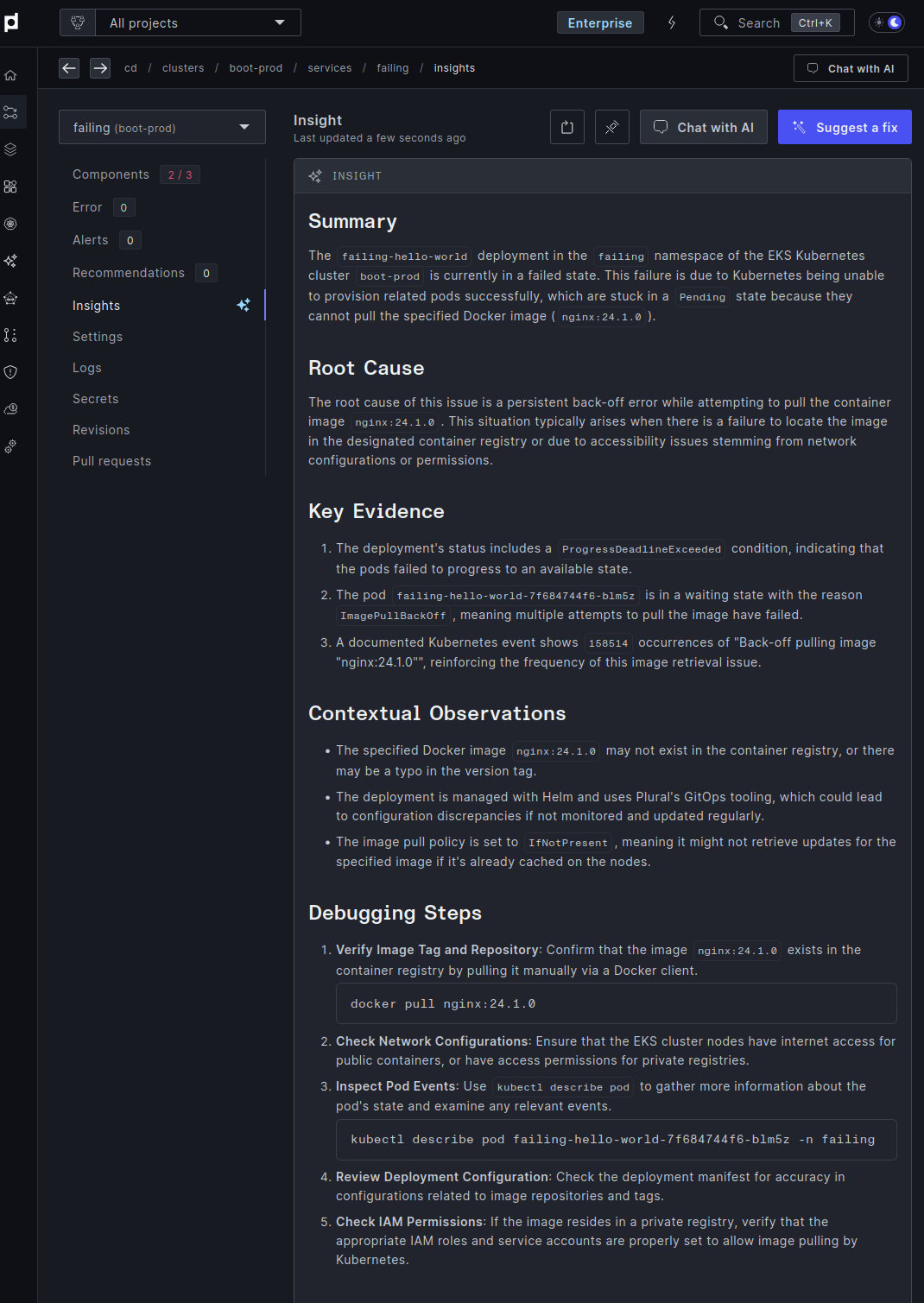

Insights provide detailed information, including a summary, root cause, evidence, and debugging steps for the identified problem, as shown in the following picture.

Plural AI also suggests fixes, and allows you to create a PR to apply the suggested fixes.

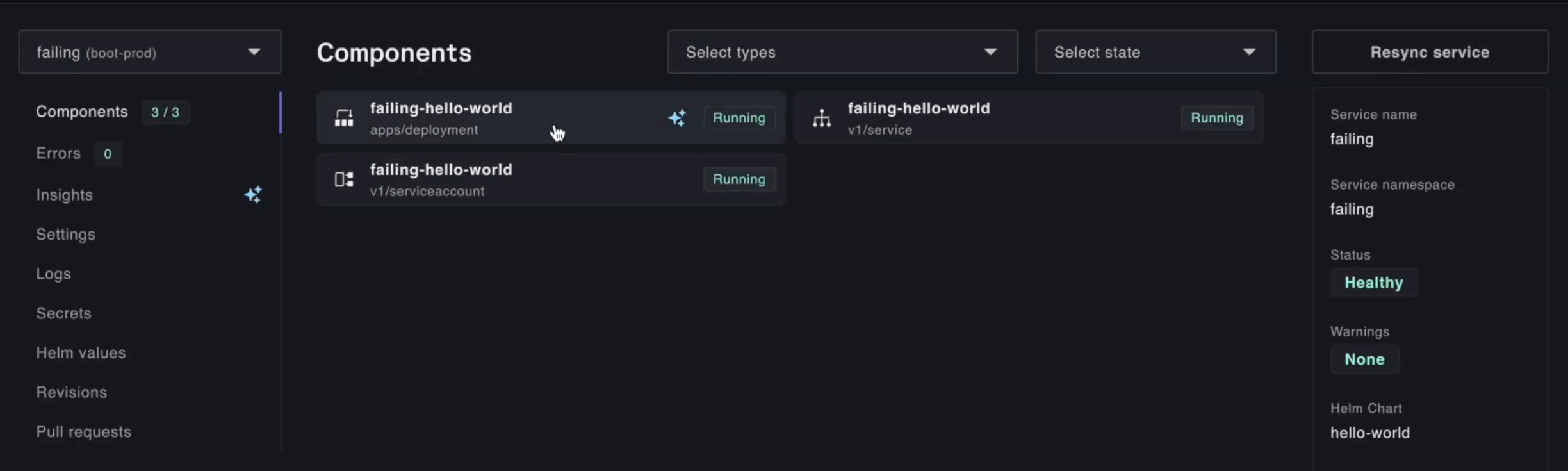

After merging the PR, Plural CD triggers the automation and applies the changes to the cluster. Now, the failing-hello-world deployment is in the running phase

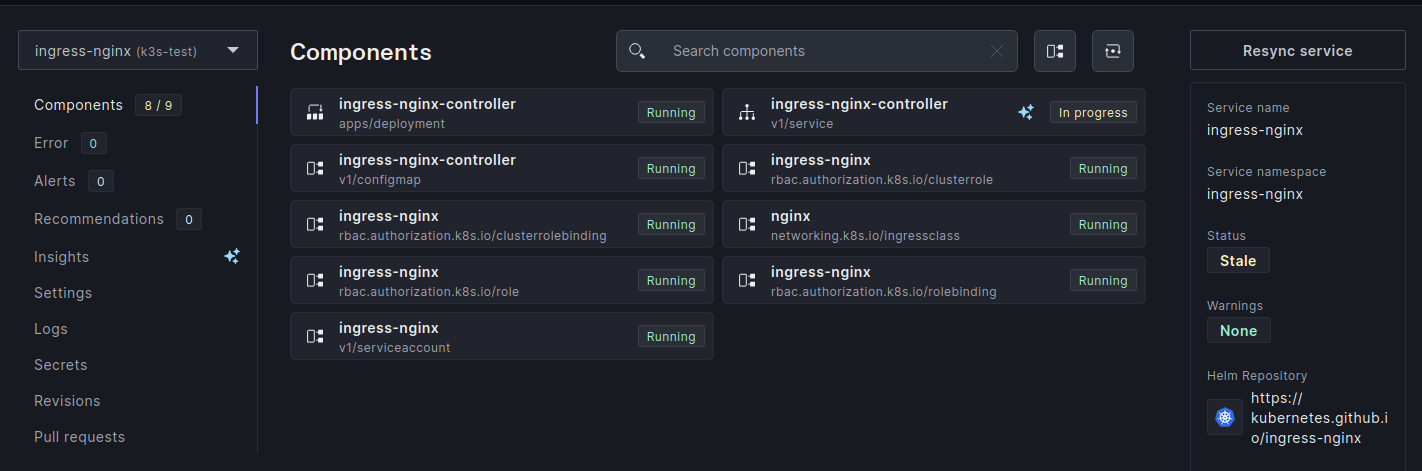

Example 2: Debugging Terraform

Another great use case is for debugging Terraform.

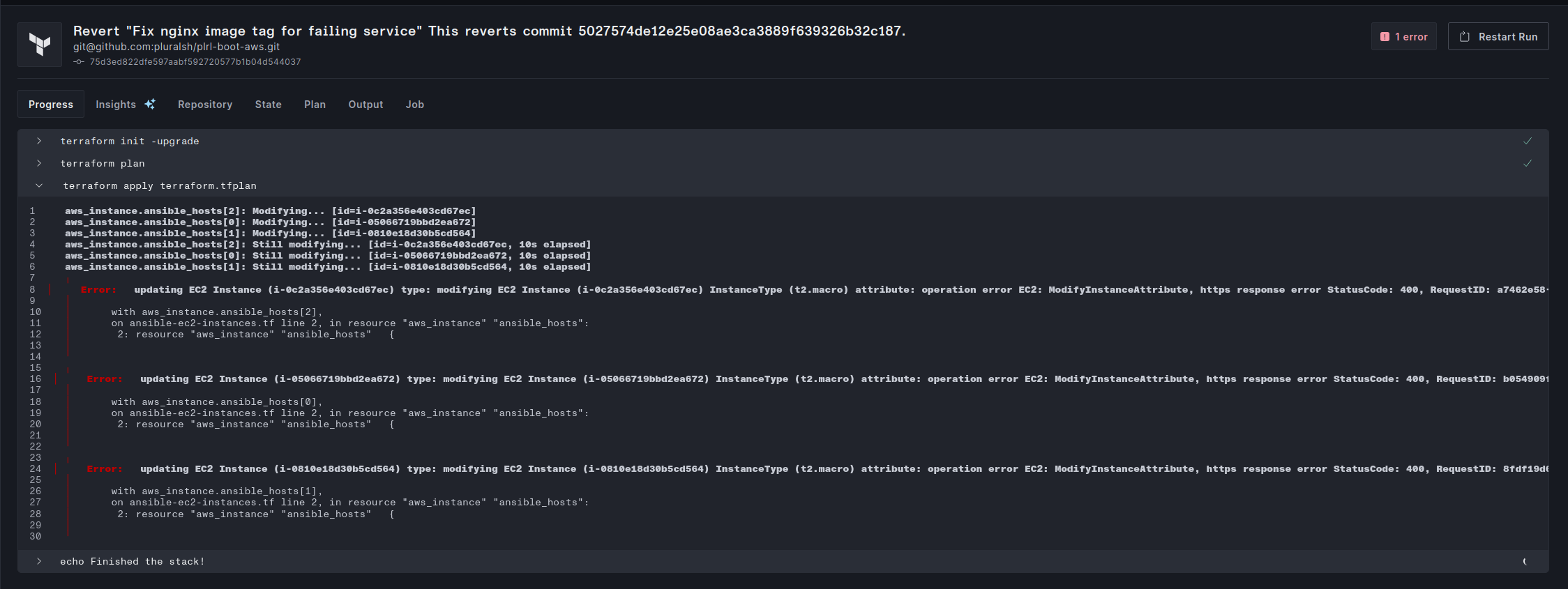

Let’s say that a Terraform stack fails during terraform apply because of an invalid EC2 instance type (t2.macro). AWS returns a 400 error, stopping infrastructure updates.

While Terraform logs contain the critical error message "InvalidParameterValue: The following supplied instance types do not exist: [t2.macro]" – and indicate the problem stems from ansible-ec2-instances.tf, where t2.macro is mistakenly used instead of a valid instance type engineers typically struggle to debug these verbose and poorly formatted logs.

As a result, debugging Terraform often consumes a lot of time and requires specialized knowledge. Luckily, LLMs are great as parsing through wordy logs.

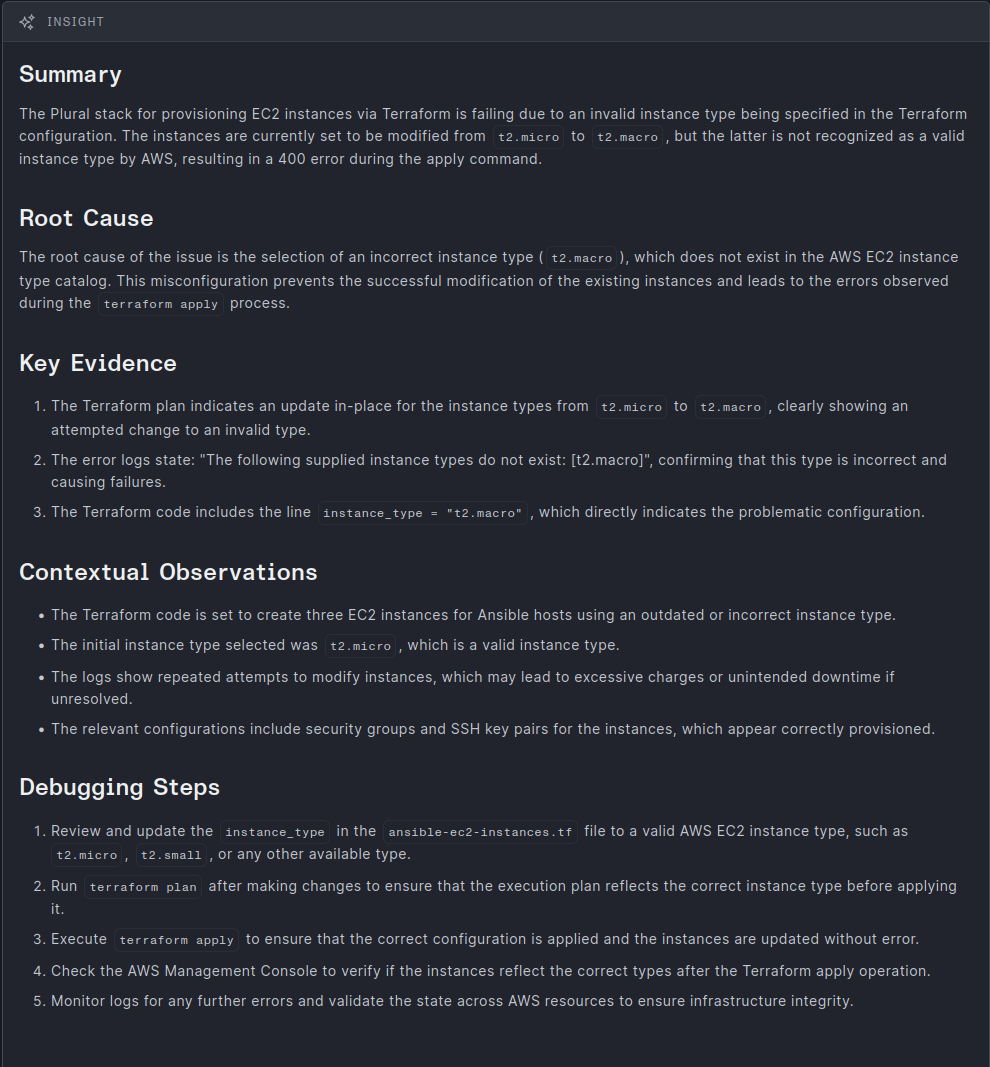

Plural AI detects the issue by analyzing Terraform logs and identifying the incorrect parameter in ansible-ec2-instances.tf. Insights offers detailed information, including a summary, root cause, evidence, and debugging steps for the identified problem, as shown in the following picture.

Plural AI locates the correct Git repository, suggests the necessary changes, and automatically creates a PR for us.

Plural Stack has an integrated PR automation suite. When a PR is created against the tracked Git repository, Plural Stack triggers a run for that PR. It then runs the terraform plan command to ensure the changes are ready for merging.

Plural also comments the above plan on the PR output in simple, plain English.

Example 3: Failing Ingress

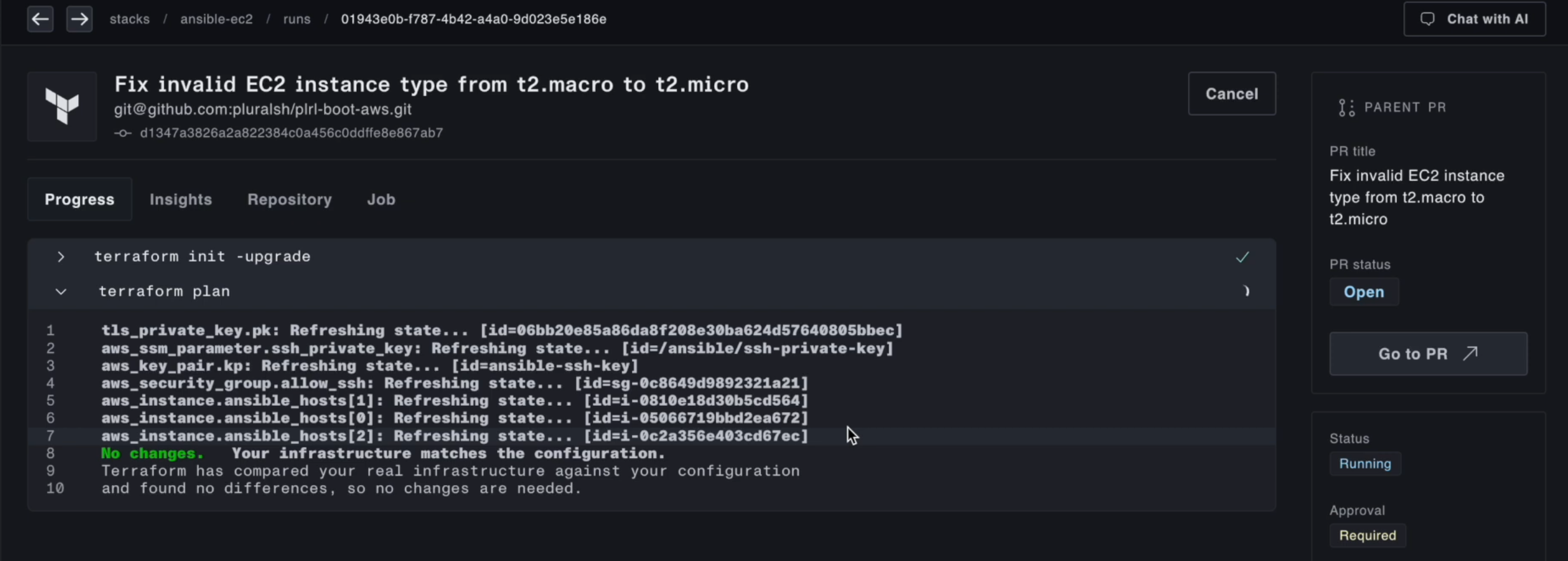

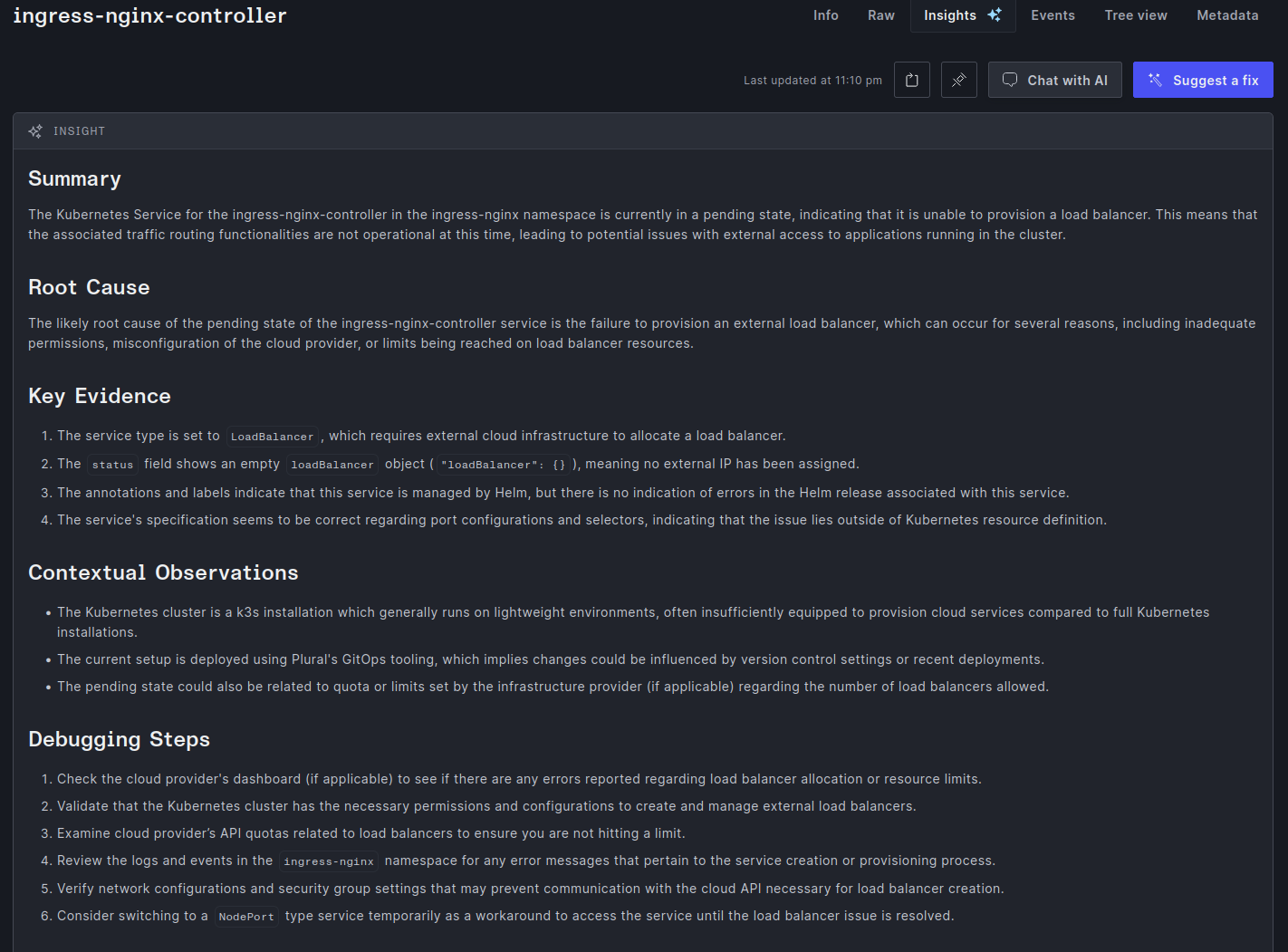

Let’s examine a more complex example. In this case, the ingress-nginx-controller is stuck in a progress state and doesn't move forward.

Upon further investigation, we find that this service ingress is running on a k3s cluster, which requires an external load balancer that is not configured. However, identifying this issue requires understanding Kubernetes networking and its ecosystem.

In this case, Plural AI intuitively reviews all the configurations and identifies the root cause: failure to provision an external load balancer.

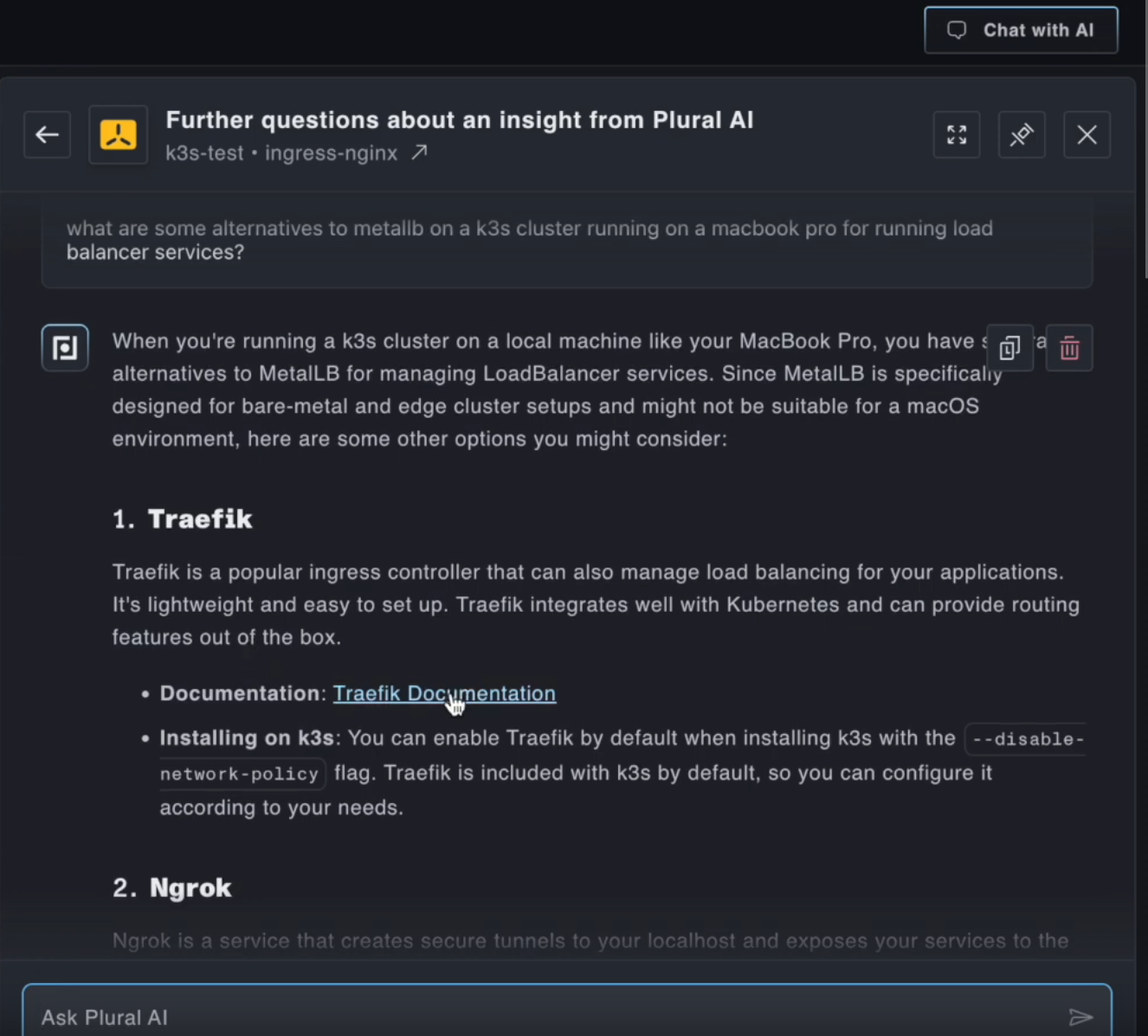

With complex issues like these, we often need to follow an open-ended process instead of a quick-fix PR. Sometimes, we must research and ask follow-up questions. In such situations, Plural AI offers a full chat interface. We can discuss the issue with Plural AI, understand it better, and find the best solutions.

Below is a screenshot of a chat with Plural AI discussing an alternative load balancer to MetalLB.

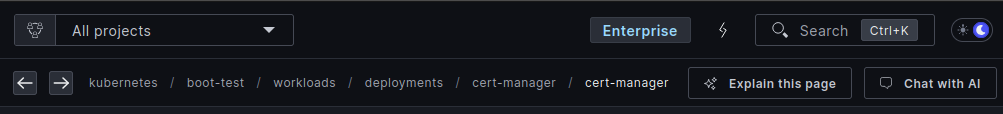

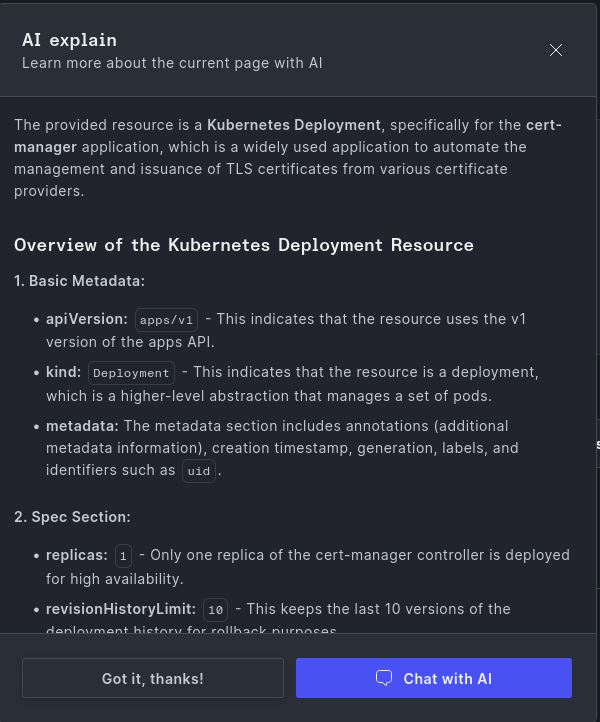

Explain with AI

SRE and platform engineering teams often dedicate substantial time to helping application developers navigate Kubernetes dashboards. To ease this workload, Plural AI offers an Explain this page feature.

This AI-driven feature simplifies Kubernetes dashboards by analyzing the information shown and using an LLM to provide clear, contextual explanations of Kubernetes objects and configurations.

For example, on a cert-manager dashboard, Plural AI identifies the cert-manager, retrieves relevant documentation, and explains its role. By clicking Explain this Page, users get an overview of cert-manager deployments, related resources, and key insights automatically generated by AI.

Managing infrastructure also involves answering many internal questions. Plural AI makes this more manageable with a complete chat feature, which shifts internal support to LLMs. This feature lets you quickly get clear explanations of complex configurations, reducing the burden on your team and allowing them to focus on more strategic tasks.

You can also use the full chat feature with the Explain this page feature.

Plural AI is seamlessly integrated with Plural's permission structure for secure access. Plural’s agent-based architecture enhances security with egress-only communication.

You can learn more about Plural AI in this demo and Plural doc.

Future Roadmap

Plural continuously enhances its AI capabilities to provide smarter, more proactive support for application and infrastructure teams. Here’s what’s coming next:

-

App Code-Level Troubleshooting

AI-driven debugging will extend beyond infrastructure to application code, integrating with observability tools like Prometheus and logging solutions like Elasticsearch. This will provide automated insights for app-level issues, similar to how AI currently diagnoses infrastructure problems. -

Cost Optimization & Automated Fixes

AI-powered cost recommendations will leverage data from Kubecost and other cost-tracking tools. Integrated with code modification capabilities, Plural will automatically generate PRs for cost-saving changes, such as scaling optimizations and resource right-sizing.

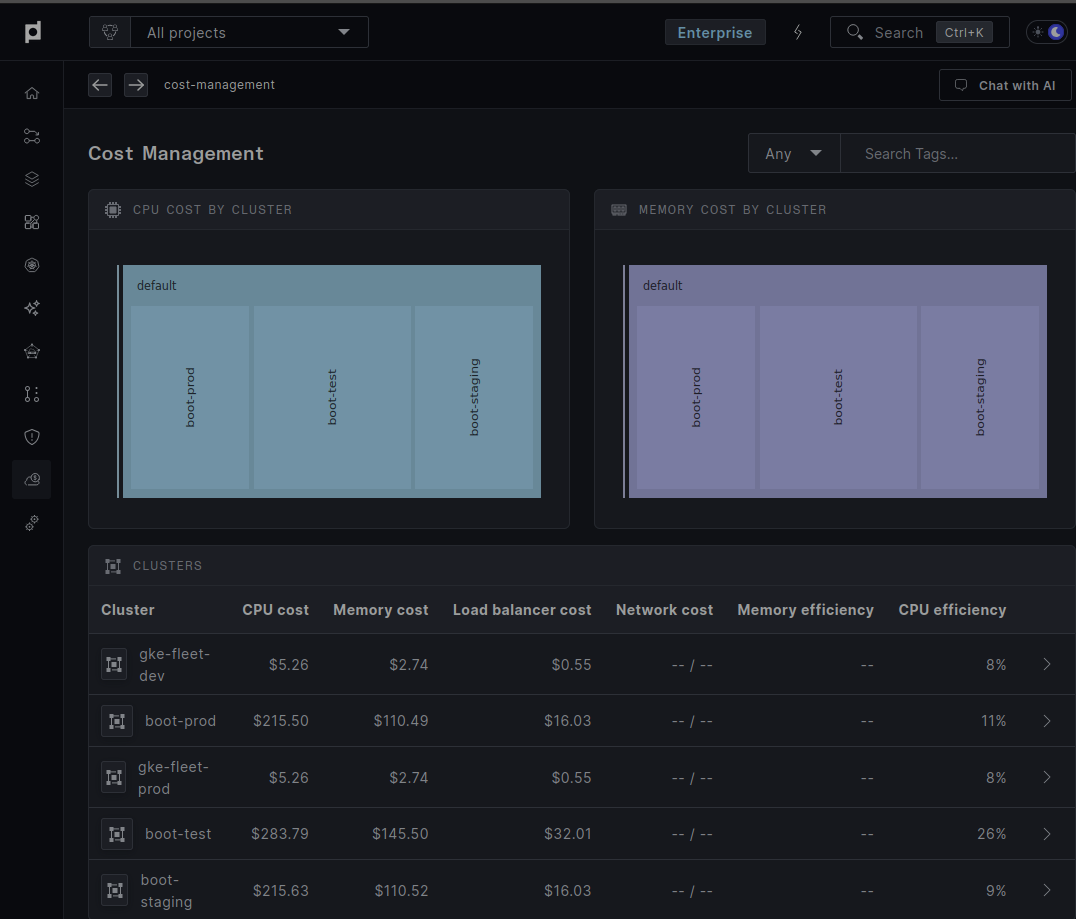

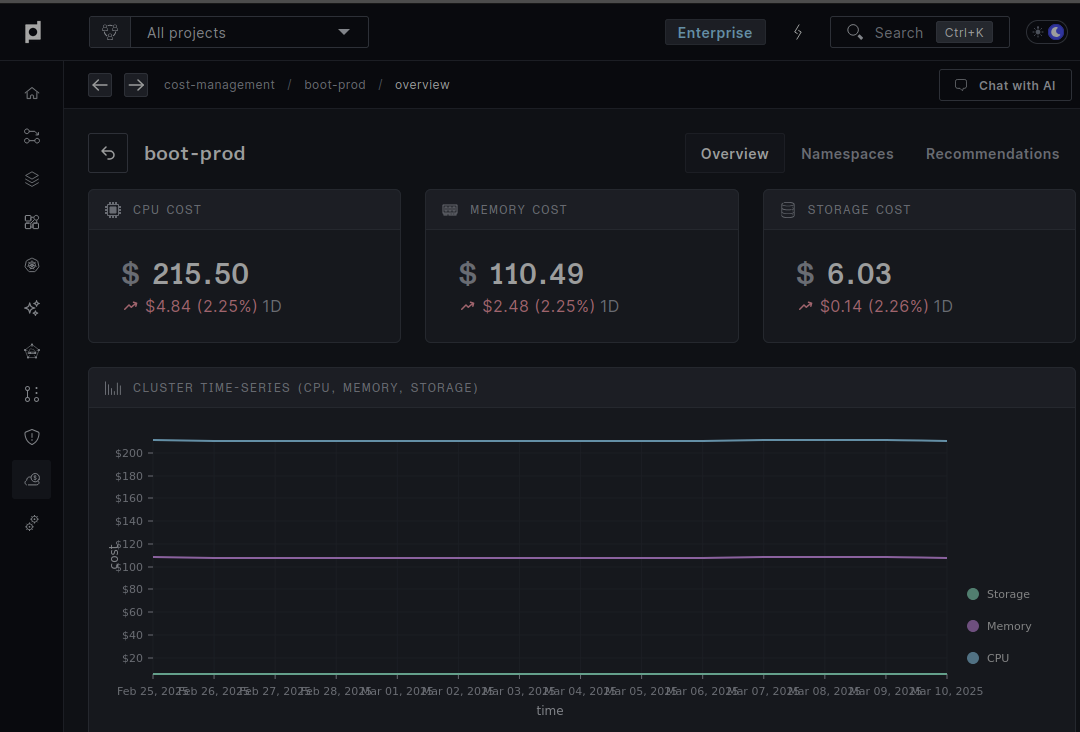

Below are screenshots for the cost-optimization dashboard.

The image below shows how the cost-management feature can calculate your resource costs with a neat graphical representation.

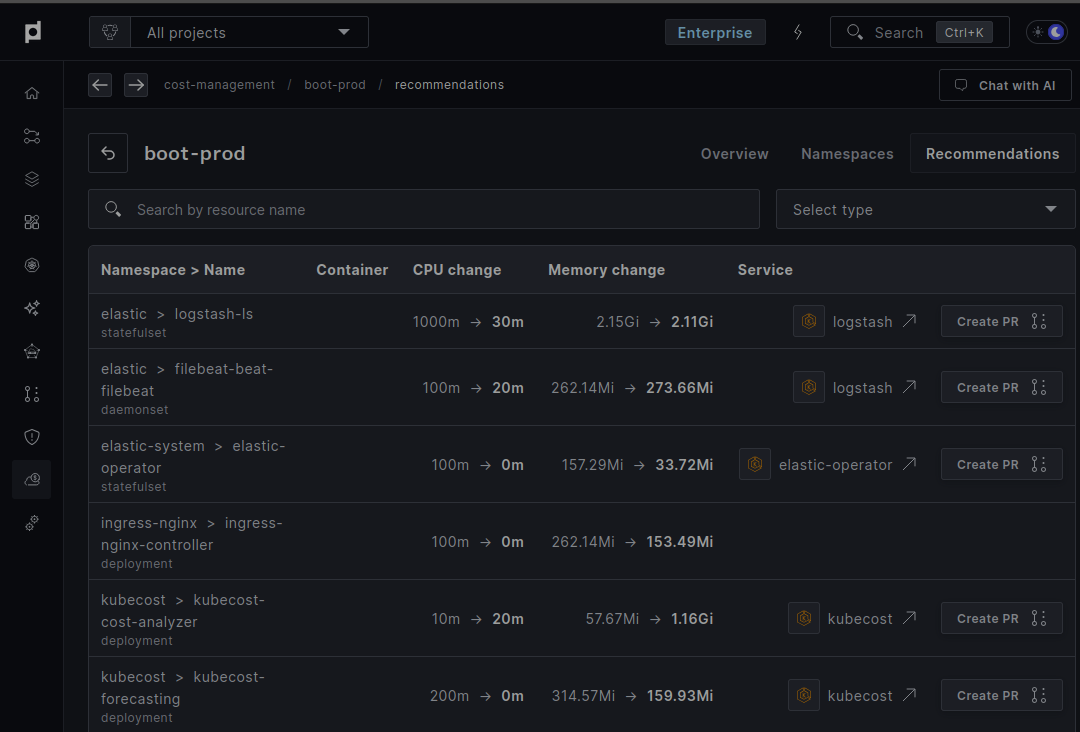

Based on resource utilization and other logs, Plural AI recommends changes to optimize cost and can Create PR with one click.

These new features will reduce manual effort, improve efficiency, and drive cost-effective scaling.

Conclusion

Large Language Models excel at analyzing vast amounts of unstructured data and explaining complex technical concepts in accessible terms. Plural AI strategically harnesses these capabilities while providing a crucial advantage: comprehensive real-time telemetry and configuration data from your infrastructure.

Unlike generic AI solutions, Plural AI continuously collects detailed metrics, logs, and configuration information from your Kubernetes clusters and Terraform deployments, feeding this rich contextual data directly to its language models. This infrastructure-aware approach enables Plural AI to deliver highly specific, accurate diagnostics and solutions rather than general advice.

By combining advanced language processing with precise, real-time knowledge of your actual environment, Plural AI transforms Kubernetes and Terraform maintenance, operations, and troubleshooting from specialized tasks into accessible workflows that any developer can navigate confidently, without becoming a platform engineering expert.

Ready to take your Kubernetes journey to the next level? Request a Demo

Newsletter

Join the newsletter to receive the latest updates in your inbox.