Upgrading Multi-Cluster Kubernetes Setups with Plural

Multi-cluster Kubernetes upgrades demand meticulous planning and execution. Administrators need to review release notes, validate compatibility across Kubernetes components, and address breaking API changes—all while minimizing downtime and mitigating risks.

Table of Contents

Kubernetes has become the de facto standard for container orchestration, simplifying application management at scale. However, managing Kubernetes clusters themselves remains complex and demanding.

In particular, with new Kubernetes versions released every four months, keeping clusters up to date is a recurring task. Upgrades are crucial for the following reasons:

- Security

Staying on updated versions is essential to protecting workloads from known vulnerabilities. For example, a vulnerability, CVE-2021-25741, was found in IngressClass, allowing unauthorized actions that were patched in v1.22.4 and v1.21.7. If you have yet to upgrade your cluster to the patched version or are unaware of new upgrades, your cluster can still be compromised. - Compliance

For many organizations, especially those handling sensitive data or government workloads, Kubernetes version compliance isn't optional - it's a regulatory requirement. Standards like FedRAMP mandate staying within recent Kubernetes versions, typically requiring clusters to run either the latest (n) or one to two versions behind (n-1 or n-2). This means organizations can't simply stick with older, stable versions - they need reliable processes to continuously upgrade their clusters to maintain compliance while minimizing disruption to production workloads. - New Features and Enhancements

Each Kubernetes release introduces performance improvements, feature updates, and extended APIs, enabling teams to optimize their infrastructure and workflows effectively. - Compatibility

Keeping clusters on supported versions ensures seamless operation with third-party tools, cloud providers, and other critical components of the Kubernetes ecosystem.

While upgrading a single cluster may seem manageable, the complexity grows exponentially across multiple clusters. This blog post will delve into the challenges of multi-cluster Kubernetes upgrades, share best practices, and highlight how Plural can streamline the process.

Challenges in Multi-Cluster Kubernetes Upgrades

Multi-cluster upgrades demand meticulous planning and execution. Administrators need to review release notes, validate compatibility across Kubernetes components, and address breaking API changes—all while minimizing downtime and mitigating risks. The larger the scale, the more time-consuming and error-prone the process becomes. Some of the challenges include:

Managing API Deprecation

Kubernetes evolves rapidly, often deprecating older APIs. For example, the migration of the Ingress resource from networking.k8s.io/v1beta1 to networking.k8s.io/v1 caused significant disruptions when v1beta1 was removed in v1.22, leading to failed ingress definitions and downtime for external-facing applications in many clusters.

Imagine managing 30 clusters where each environment uses varying versions of ingress manifests. Manually auditing and updating hundreds of these manifests across all clusters can take weeks, delaying critical upgrades.

Add-on/Controller Compatibility

One of the most significant challenges in multi-cluster upgrades is ensuring compatibility for add-ons and controllers. These components, often called shadow tech, are installed once and seldom revisited, yet they play a critical role as cluster-level services.

Why It's a Big Deal?

Add-ons like ingress controllers, cert-managers, and monitoring tools are the backbone of a Kubernetes cluster. If they fail during an upgrade, the impact can ripple across all workloads in the cluster, potentially causing widespread downtime.

Challenges Engineers Face When Managing Add-ons

- Shadow Tech Risks

Add-ons are frequently treated as set-and-forget components. Once installed, they’re rarely reviewed until something goes wrong. This means compatibility issues often surface only during an upgrade. - Repetition Across Clusters

The upgrade procedure must be repeated for every cluster in a multi-cluster setup. If you manage 30 clusters, this means 30 rounds of:

- Checking what's installed.

- Verifying compatibility with the new Kubernetes version.

- Upgrading and testing each add-on.

- Manual TrackingThere’s no built-in mechanism in Kubernetes to track installed versions of add-ons. Engineers must manually document add-ons, refer to documentation, and ensure compatibility. For example:

- Upgrading Kubernetes to v1.22 requires ingress controllers using extensions/v1beta1 to switch to networking.k8s.io/v1.

- Cert-manager versions below v1.0 break with Kubernetes v1.22 due to API deprecations.

- Cluster-Level Impact

Since add-ons operate at the cluster level, their failure can break the entire cluster. This is especially problematic in production environments where downtime is costly.

Different Configurations/Cloud Providers

When managing multiple Kubernetes clusters, each cluster may have different configurations or be hosted on various cloud providers. These variances add complexity to the upgrade process, as the same version of Kubernetes might behave differently depending on the underlying infrastructure.

For example, let’s consider a scenario with clusters on AWS and on-prem infrastructure. Each cloud provider may have different networking, storage, or authentication requirements that could impact the upgrade.

- In AWS, you might use Amazon EKS with managed node groups with specific default configurations (e.g., VPC settings, IAM roles, etc.) and specific AMI (Amazon Machine Images) versions.

- On-prem: Conversely, an on-prem Kubernetes cluster could have custom networking settings or specific storage configurations (e.g., NFS or Ceph) that require special handling during an upgrade.

A simple change to the Kubernetes version on one provider might translate differently to another, making it crucial to manage these variations carefully.

Dev/Prod Environments

In many organizations, multiple environments, such as development (dev), staging, and production (prod), are common, each running different versions of Kubernetes. While the goal is to keep them as similar as possible, discrepancies between these environments often occur.

For example, a development environment might run Kubernetes v1.21 while the production environment runs v1.18. This difference can lead to several issues during upgrades:

- API Compatibility: Certain Kubernetes APIs may be deprecated or behave differently between versions. An API that works in a dev environment might be deprecated in prod, leading to unexpected errors during upgrades or after deployment.

- Resource Limits: Development clusters might be configured with lower resource limits (CPU/memory) than production environments, causing discrepancies in behavior after upgrading. A workload that works fine in development might crash after the upgrade due to resource allocation changes.

Maintain Uptime During Upgrades

Kubernetes requires careful planning to avoid downtime when upgrading nodes. It’s best practice to keep the node’s OS or VM image one or two versions behind the latest during upgrades to ensure stability.

When a node is upgraded, workloads (pods) running on that node need to be moved to other stable nodes, a process called workload eviction. Without moving these workloads, the node might go down during the upgrade, causing application disruption and service downtime.

In a multi-cluster environment, this task becomes much more complex. With dozens of clusters and hundreds of nodes, managing the rotation of nodes and ensuring workloads are properly redistributed can quickly become a daunting challenge.

Managing Cluster Upgrades the Old-Fashioned Way

Many teams rely on tribal knowledge passed between a few engineers or sift through outdated documentation to navigate Kubernetes upgrades. For instance, identifying deprecated APIs often involves manually reviewing YAML files across multiple clusters.

While these methods may suffice for smaller setups, they need help scaling in larger, multi-cluster environments. What’s needed is a more straightforward, more reliable way to handle Kubernetes upgrades—one that minimizes manual effort and keeps up with the demands of modern infrastructure.

Streamline Upgrades with Plural

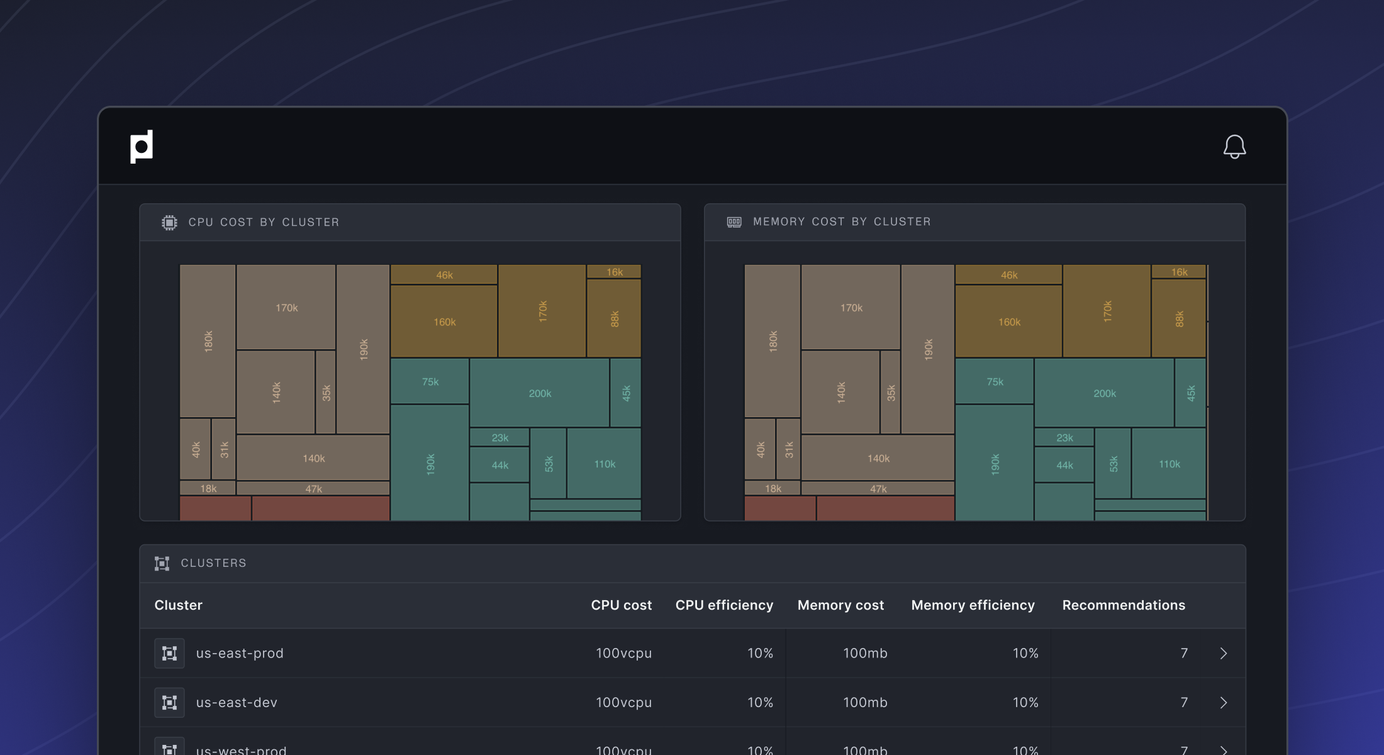

Plural is the Kubernetes management platform for modern teams.

Plural has a very efficient architecture consisting of two components: a control plane installed on the Kubernetes management cluster and a deployment agent installed on workload clusters. In each cluster, a Plural agent is run as a pod. Below is the diagram for the Plural architecture; you can find more about it in Plural’s docs.

How does Plural help with upgrades?

Plural simplifies cluster upgrades with several features.

Pre-Flight Check

Plural’s Pre-Flight Check feature helps to ensure that the API and controller versions are compatible with the Kubernetes version. This ensures that nothing will break upon the cluster upgrade.

This pre-flight checkup consists of three steps:

-

Check API deprecation

Plural identifies APIs slated for removal using two methods:-

GitOps Integration: Plural integrates with your GitOps workflows to flag deprecated resources directly in your manifests. For instance, if your manifests include a PodSecurityPolicy that will be removed in Kubernetes v1.25, Plural highlights this and suggests the updated API to use.

-

Cloud Provider Exports: Plural scrapes exports from cloud platforms like AWS to detect processes that might rely on deprecated APIs.

-

-

Check add-on compatibility

Controller updates often lag, leading to compatibility issues. Plural tackles this by scraping release notes and documentation to map add-on versions to their compatible Kubernetes versions.Below is the version matrix generated by Plural by scraping the documentation for each controller. This matrix indicates which controller versions are compatible with specific Kubernetes versions. If a version is incompatible, it is flagged as blocking.

-

Check add-on mutual incompatibility (under development)

This feature will detect compatibility issues between controllers themselves, helping to prevent API conflicts and ensure all cluster-level services interact harmoniously after an upgrade.

By automating these steps, Plural eliminates much of the manual effort involved in upgrade planning, presenting all the compatibility information in one place for easy reference.

Upgrading cluster

Now that you see what needs to be upgraded in the Pre-Flight check, it’s time to actually perform the upgrades.

With Plural Stacks, you can define a git repo that Plural subscribes to, where your Terraform or Ansible files are stored, along with its target cluster.

As new changes are committed, the Plural system then automatically executes a Terraform or Ansible workflow on that commit, as shown in the image below.

Once the terraform init -upgrade command finishes, the terraform plan command runs and generates an upgrade plan. This plan shows us the changes that will be made to the infrastructure. Plural gives an option to approve those changes manually or automatically.

Once the plan is approved, Plural runs the terraform apply command and upgrades the cluster.

After an upgrade, if deprecated APIs or controller resources are not updated to a compatible version, the cluster dashboard marks the upgrade status as Blocking for the affected controller or resource.

Global Services

Another Plural feature that is useful for upgrading multi-node setups is Global Services. In a multi-cluster Kubernetes environment, it is best practice to configure each cluster similarly to the previous one, which makes it easy to maintain. In some cases, this is not possible. For example, say you have two clusters: one on GKE, and the second on AWS. On the AWS cluster, you will likely have the controller, aws-ebs-csi-driver, installed to manage the storage classes and volumes. Obviously, this will not be installed on the GKE cluster.

However, some add-ons or controllers will likely be the same and must be installed on every cluster, irrespective of the underlying infrastructure. For example, cert-manager is used to manage certificates across the cluster components.

If you have 30 clusters, each of these clusters will have a cert-manager installed. To upgrade the cert-manager, you must update the Terraform config file, or GitOps config files, 30 times, once for each cluster.

However, in Plural, you can define this add-on as a Global Service. Then, using tags, you can specify which clusters it should be installed on. The upshot is that you only have to make changes to the config once, and the add-on will be upgraded in all the clusters it is installed on.

The image below shows that cert-manager is defined as a global service and which cluster it’ll be installed in using tags. In the second image, you can see the definition of cert-manager service deployment with version and other specs.

CD Workflow

Finally, Plural streamlines cluster and service upgrades through its GitOps-powered CD pipeline. This workflow orchestrates staged upgrades across multiple clusters using InfrastructureStack Custom Resource Definitions (CRDs). When configuration changes are pushed to Git, the pipeline first modifies CRDs for development clusters. After integration tests pass and approvals are received, the service automatically updates production cluster CRDs, ensuring consistent and validated changes across your infrastructure.

Conclusion

Historically, the challenges of handling API deprecations, add-on compatibility, and varying configurations across different environments during multi-node Kubernetes upgrades have been significant.

Plural makes it easier. By leveraging features such as pre-flight checks, global services, and continuous delivery workflows, Plural reduces manual effort, ensuring that clusters remain secure, efficient, and aligned with the latest advancements in the Kubernetes ecosystem.

Need to upgrade and want to try out Plural? Schedule a demo to experience the power of Plural in action.

Newsletter

Join the newsletter to receive the latest updates in your inbox.